This may seem a bit weird, but the pandemic brought to my daily computing life a fair amount of on-premises services. Some would call it a “homelab”, but it’s more of a set of (mostly) web-based tools I run on the boxes in my server closet.

I’ve been meaning to write about it for months but never got around to it, and I guess today’s as good a time as any.

Where

Hardware-wise, now that I gave away my ageing Raspberry Pi 2 Kubernetes cluster, the entire setup is neither cool nor much to look at, and consists of:

- An i7

KVMbox (my former hackintosh) with a relatively puny 16GB of RAM, running a mix ofLXDcontainers and actual VMs (including Windows) across a few consumer SSDs (yeah, I know, not really datacenter-grade, but it works). - A couple of Z83iis acting as media server and a development box, running

docker-composewith very strict CPU, RAM and swap limits. - A bunch of containerized applications on my Synology, managed via its built-in Docker UI.

- My home automation setup on a Pi 4, which runs a few dozen “micro” services managed by

piku.

…all run in a closet away from my office, wired up via unmanaged, zero-hassle Gigabit switches.

It’s all surprisingly compact, and if I were to measure it in rack units it would probably take 4U in height solely due to the height of the Synology, but less than a quarter of a rack’s typical depth.

So it compares favorably to 1U in overall volume, if you will, and is nothing like the massive setups some of my friends have at home.

Why

I started setting up various applications on these as a complement to the Synology built-in services, which include Time Machine, our home photo archive and an IMAP server where I keep a randomly updated copy of my personal e-mail.

They sort of took a life of their own since, and I use some of them daily for managing my media, general tinkering, prototyping stuff and occasional troubleshooting, but some became a sort of bridge between my Windows work machines (where I keep zero personal stuff) and my Macs and iOS devices.

What

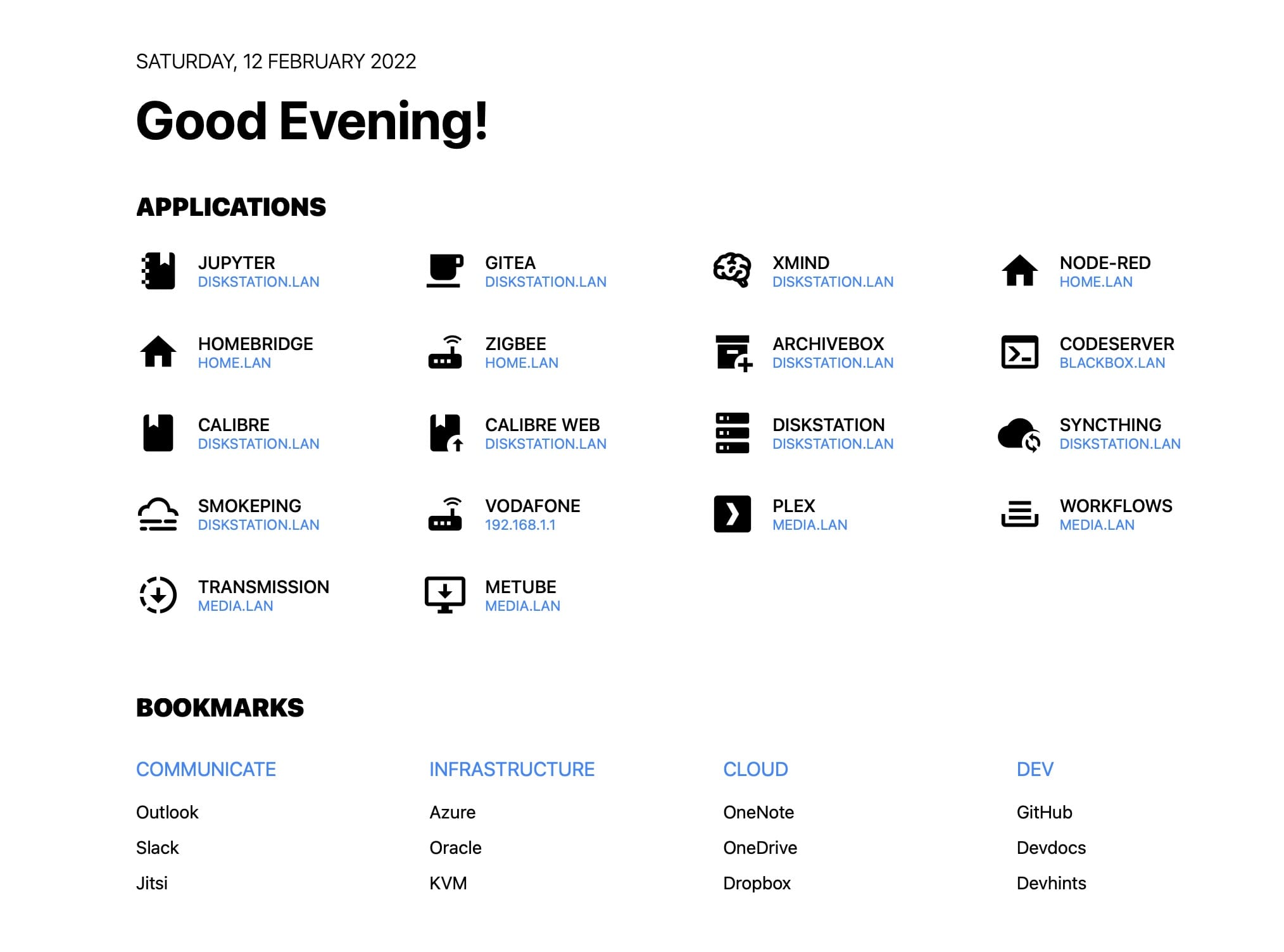

All the apps are accessible from an ultra-lightweight, custom home page I based off a popular “start page” layout (SUI), after removing 100% of the back-end, 99% of all the JavaScript and 80% of the CSS crud. It’s still recognizable, but believe me, it’s less than 5% the original size:

Without further ado, and in no particular order, here’s a quick run-down of the above (and a few other doodads):

Jupyter

I have a notebook server running 24/7, which I originally fired up to do some data science/ML (everything from an early version of my COVID dashboard to RSS feed classification) and kept because it turned out to be an excellent notebook/scrapbook/sandbox.

If I’m working it’s a great way to quickly play around with APIs of various kinds, and when I’m not it’s usually the first thing I reach for when I want to try out an idea.

Gitea

I keep a local Gitea instance for private projects I won’t or aren’t ready to make public, local mirrors of most of my GitHub repositories and local mirrors of some other repositories I would like to have access to in case they’re wiped out the face of the Internet, and I love it–it is almost as good as GitHub for a solo developer (or a small team), and has pretty much all the features you’d need to actually run a small startup.

My wake-up call to setting up my own git infrastructure was the relatively recent youtube-dl drama when its main repository was suspended due to a DMCA request a while back, and a few other similar incidents on much less controversial projects.

Besides making sure I would always have access to my own projects, I soon realized there are quite a few other interesting and obscure tools and libraries I want to keep tabs on and that I would hate to lose, like the firmware for my 3D printer, a bunch of ESP32 stuff that migrated from GitHub to GitLab (and has become impossible to find as a consequence), some of the ancient Plan9 repos, etc.

So I have multiple “organizations” set up in Gitea that mirror my stuff daily and other repositories that are synced anywhere between weekly to every other month or so, and the whole thing is backed up to Azure automatically via the Synology.

These days my kids also keep their Mac and iOS projects there (complete with trouble tickets, roadmaps and releases) and I’ve been playing with Drone to do basic CI, but it’s useless for Swift so I haven’t put a lot of effort into it.

ArchiveBox

I have given up on Instapaper, Pocket, and various other web clipping/Read Later services because all of them inevitably tried to find new ways to keep the data I stored there away from me and/or were unsuitable for long-term archiving.

I tried using Evernote and OneNote for keeping snippets around as well, but in the end I realized that I didn’t need sophisticated search or having everything on tap – what I really needed was a way to archive and re-read things that had fallen off the Internet in a decent format, and ArchiveBox does that well enough (although the search is utterly abysmal, it’s nice to have full text and PDF renderings of some things on tap).

It isn’t perfect (in particular, it goes through submitted URLs synchronously, which is just bad back-end design, and is still broken on the iPad), but it works and I can still access all of the content if I decide to stop using it.

Calibre

Like many other folk, I have been using Calibre for years to “defang” and strip DRM from e-books, which I have stored as EPUB since I decided to adopt it as my master format (I also keep copies of my Kindle purchases, but what I really value are the EPUB versions).

And since it can (sort of) read PDF metadata, I’ve also been amassing a sizable library of papers and presentations over the years.

One reason I keep it running in a container (and forego being able to plug in my Kindle to it) is its scheduled news download feature, which scrapes and mails me a “Saturday newspaper” every week, wherever I may be.

I’ve been doing this for so long that I had a number of ugly hacks to run it as a service back in my Linode days, but these days it’s trivial to get running, and the nice thing about the particular image I use is that it runs Guacamole to give me GUI access via the browser, plus RDP and Calibre’s own built-in HTTP server.

So I can actually read some reference books via a browser if I want to, which has come in handy on occasion.

XMind

I’ve been using several flavors of mind-mapping for decades (I’m currently hopping between MindNode and XMind on personal and work machines), but I recently had the need to re-open old .xmind files without automatically upgrading them, and since I didn’t want to install the software anywhere permanently but wanted to have it ready to go, I ended up building a container to run it on Linux.

I stole portions of the Calibre container setup to make it available as a GUI app via Guacamole, and somewhat to my surprise, I ended up leaving it up on my NAS as a sort of cross-platform, sandboxed brainstorming notebook.

Syncthing

In short, I have my master replica running on my NAS as a Docker container, and all my personal machines sync to it. Everything is backed up to Azure from there, and it’s been almost completely hands-off except for the occasional upgrade.

Plex

I’ve always liked the idea of having my media centrally managed at home, so a few years after fiddling with the SliMP3 and a couple of WDTVs, and with the increasingly obtuse restrictions Apple imposed on Home Sharing, Plex became the place where I stored the kid’s DVDs, YouTube documentaries I wanted to keep and my personal music collection, which is composed of ancient CDs you can’t really find anywhere these days.

I am still manually syncing some of it with Apple’s iTunes Match service (while it lasts, and because I just don’t like using the Plex app for music on my iPhone), but I’ve taken to using PlexAmp on my desktop machines and I love it.

And I’m not even one of those FLAC fanatics. My music collection isn’t that big – it’s just old, and sometimes I still find files at 128kbps, dig out the relevant CD and re-rip it to 320kbps, curating ID3 tags with Picard.

But if you are an audiophile and want to use highly contrived formats and streaming setups, I’d say Plex is definitely your jam. It also works with Alexa (something I’m trying to get rid of).

The only thing that annoys me on a daily basis is that I can’t stream to a headless Raspberry Pi Zero W directly from it. There is an ARM client but it is outdated and broken, so I’m keeping an eye on this forum thread, but there’s nothing I can use yet.

MeTube

This is the newest addition to the bunch, which I set up explicitly because I got fed up with YouTube advertisements while I was trying to watch the excellent six-part PowerOn documentary that came out during the holidays.

I may or may not use it again, but it’s so light when idle that I’ll be keeping it around.

Transmission

I hate running BitTorrent but it is often the only sane way to download Ubuntu ISO files and Raspberry Pi images, so I keep it sandboxed and available from all my devices (it downloads into an SMB share I can get to from my iPad). Remarkably, it is one of the few containers I’m running that uses way less than 128MB of RAM.

Node-RED

I have three different instances up, one devoted to home automation and homebridge/zigbee2mqtt glue, another for various e-mail and data munging workflows and a “scratch” one for testing. Given that I hate developing in JavaScript, that should tell you how useful it actually is.

Codeserver

I’ve tried to use this for a good while, but the various loopholes you need to jump over to get it working properly across browsers have been a major turn-off, so these days I am mostly back to RDP or ssh and vim to work on an isolated desktop container on my KVM host and might actually tear it down this weekend (Update: I did exactly that, haven’t missed it in months).

Smokeping

This is an oldie but goodie, and I’ve kept an instance of it around for years in various forms because it has proven invaluable in diagnosing sporadic network issues (especially when I was stuck on MEO DSL and fiber, which had weird variations every other month).

These days I have a few choice probes set up for some of my services, but it’s mostly used to keep track of local carrier interconnects and other stuff from my ISP days.

Jitsi

When the pandemic started I refused to use Zoom and my friends refused to use Teams, so I set up a Jitsi instance on Azure as “neutral territory” in which we host some informal get-togethers every week or so, and that I update every couple of months.

This is probably the only “private cloud” thing I use regularly that doesn’t run in my closet, and it’s served us well so far – and even though I run unstable builds it’s been remarkably useful to host weekly and monthly gatherings.

Next Steps

I don’t really think there is much more to add to this setup, or a lot of improvements to make–I might revamp my KVM instance soon and install something like Proxmox since I would like it to run Windows 11 and add a 1TB SSD, but I don’t really need to do it now, nor do I feel the need to run herd on a lot of apps.

Maintenance-wise, this gives me zero trouble. Most of the updates are automatic anyway, and on that topic I’ve been considering moving some of my external-facing Node-RED automations to Office 365 and Power Automate, but I spend so much time in Office 365 at work that my heart really isn’t set on it.

Updates

I had a couple of pings about this post (especially after my other post on running a Fedora environment), so here are a few more notes:

First of all, I use watchtower for (relatively) painless container updates. That can’t really be set up via the Synology GUI because that doesn’t let you map the Docker domain socket, so the trick is to create a docker-compose.yml file, SSH in, and then sudo docker-compose up -d it.

Here’s mine:

version: "3"

services:

watchtower:

image: containrrr/watchtower

container_name: watchtower

hostname: diskstation

restart: always

volumes:

- /var/run/docker.sock:/var/run/docker.sock

network_mode: host

environment:

- TZ=Europe/Lisbon

- WATCHTOWER_CLEANUP=true

- WATCHTOWER_SCHEDULE=0 30 3 * * *

- WATCHTOWER_ROLLING_RESTART=true

- WATCHTOWER_TIMEOUT=30s

- WATCHTOWER_INCLUDE_STOPPED=true

- WATCHTOWER_REVIVE_STOPPED=false

- WATCHTOWER_NOTIFICATIONS=shoutrrr

- WATCHTOWER_NOTIFICATION_URL=pushover://shoutrrr:${PUSHOVER_API_KEY}@${PUSHOVER_USER_KEY}/

cpu_count: 1

cpu_percent: 50

mem_limit: 64m

I use Pushover for notifications these days, and I set PUSHOVER_API_KEY and PUSHOVER_USER_KEY via an .env file so I don’t end up committing them to source control by mistake.