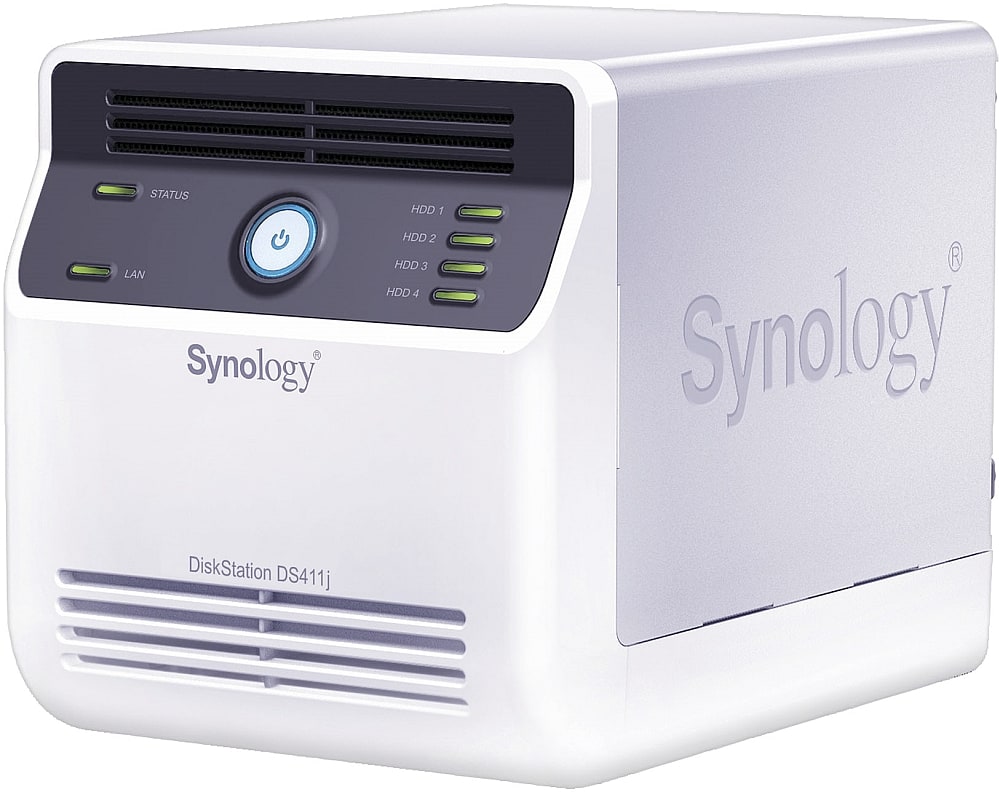

After a faithful nine years of service, I decided to replace my Synology DS411j with a brand new DS1019+. This actually came to pass a few days before last Christmas, but for multiple reasons I never had the time to write about it–until now, of course.

A Short Retrospective

The DS411j served us well, especially considering that its ageing ARM CPU (a Marvell Kirkwood mv6281) is barely more capable than the original Raspberry Pi model B.

Both are single-core CPUs, with the DS411j clocked at 1.2GHz against the Pi’s 900MHz but with a paltry 116MB of RAM instead of 512MB.

The fact that something as resource constrained as this lasted nine years while still remaining useful in this age of essentially disposable hardware rates it as nothing less than an engineering marvel (pun intended).

And it’s fun to reminisce and consider that at the time of purchase it was a great upgrade from the G4 Mac mini I was using as a NAS.

That had in turn replaced a couple of 266MHz/32MB NSLU2 boxes, the history of which is interesting enough (keeping them running was one of the reasons I got deeply into ARM hardware in the first place).

Storage Inflation

One of the original reasons for getting the DS411j was providing more reliable storage for my photo collection as it expanded into the tens of gigabytes (now multiple hundreds) as well as doing Time Machine backups.

Over time, all the media in the house gradually found its way into it as I ripped the kids’ DVDs, and then mine, and then several conflicting batches of unsorted photos, VM images… the works.

It slurped everything without a qualm, and I was able to run various services on it despite its RAM constraints. But over the years it became outclassed, slow and pokey, as well as too small for our needs.

Largely thanks to my 1TB iMac (but also my 512GB MacBook Pro and other Macs in the house), Time Machine backups ballooned to a point where they were nearly two thirds of the 5TB I had available, and doing backups (or handling large volumes of files) became a major chore as we hit the DS411j’s CPU and throughput limits.

It soon got to a point where the iMac had so much trouble completing backups to it that I started using external USB drives again.

Old and Pokey

Then came the hardware troubles. Last Summer, one of the fans failed while we were on vacation–it was a temporary failure, but I only figured that out after returning home. In the meantime I shut it down remotely and ordered a rare (and pricey) replacement, which was a tip-off to the fact that there weren’t many spare parts around and that it would likely be quite hard to recover from a more serious failure.

Miraculously, none of the hard disks failed (yet). But around November, things came to a head–we started running out of space, and everything I tried to do on it took hours (even logging into the otherwise great Synology web UI took several minutes).

This had been getting progressively worse over the years as Synology’s software became more and more complex, but I decided I’d waited long enough to upgrade–and that I would be doing so to something with a beefier CPU.

Upgrading to the DS1019+

I had been doing some research for a while, so it wasn’t hard to pick the DS1019+:

Like other current models, it has a quad-core Intel Celeron J3455 clocked at 1.5GHz (burstable to 2.3GHz), but unlike some of its peers it ships with 8GB of RAM (a massive improvement over the piddling 116MB of its predecessor).

The reasons I picked it were (among others):

- An extra bay (for more redundancy/storage)

- The ability to do hardware video transcoding (which is useful for Plex).

- The built-in RAM (which makes it possible to run various services as Docker containers)

- Two vacant slots for SSD caches (which will be a nice way to boost performance down the line)

I also had the good fortune to catch a promotion on 4TB WD Red disk drives, so I got five of those shipped as well (for around 15TB of usable storage).

Right now that is way more than I need, but that was also true of the DS411j when I got it.

Moving things across was fairly trivial, and hardly worth mentioning–I just pointed the DS1019+ to the DS411j, set up the shares that made sense, cleaned up some stuff, and left them to copy stuff across for a few days–performance over either SMB and AppleTalk was (as expected) great, and the bottleneck was clearly on the DS411j’s side.

I could have taken a more sophisticated approach like setting up data replication, etc., but for only half a dozen file shares and five users, doing a manual setup and clearing out some junk was much more effective.

Local vs Cloud Storage

Why not just “use the cloud”, Backblaze, Arq, etc., I hear you ask?

Well, I could do it for backups, sure, but I also have all my photos, music and media to think about, and those bring with them a few extra requirements:

- Latency. Storage needs to be as local as possible for media playback, but also for Mac backups and general file access–even simple stuff like sorting through a new batch of photos was a gruelling chore on the DS411j, and doing it on the DS1019+ over Gigabit Ethernet is a breeze.

- Control. I would rather have a local, single, centralised way to manage backups, encryption, and what goes (or doesn’t) go on the cloud, rather than setting up weird software with its own authentication tokens on each machine. This way even my Windows machines can back up locally with zero extra software.

- Cost. 5TB of hot storage would cost me around €85/month (or half that if I could move all of it to “cool” storage), and over a year that is roughly what I paid for my whole new NAS (which has roughly 15TB capacity).

Overall, the first two won out. And cost was roughly equivalent at such a small scale. Even considering redundancy (which I get for “free” in cloud storage) and the drawbacks of having to “maintain” the NAS (including having a UPS, having another box to keep tabs on, etc.), local storage was still slightly cheaper for a handful of terabytes.

Truth be told that I also have Dropbox, OneDrive and iCloud (a topic well worth another post, especially since I have been trying to get rid of Dropbox for a while now but can’t until the OneDrive clients for both Mac and iOS improve markedly).

But having a home server is something I’ve grown used to (in various ways), and the DS1019+ is an opportunity to simplify some things.

Software

One of the reasons I like Synology hardware is that it comes with great software–not just for NAS features, but also for various media management and small office tasks.

Synology has kept adding dozens of single-click install applications over the years, plus an incredible amount of third-party software. But right now I’m running only a few apps:

- Mail Plus, where I keep some ancient mail archives I wanted off my Macs plus a mirror/backup of some of my personal accounts (which I access either via its webmail interface or Thunderbird)

- Moments, Synology’s modern photo management app, which has a nice web interface and some useful features I’m still trying out.

- Plex, which is there on a trial basis until I figure out if I prefer to keep running its Docker version or not.

- A set of custom Docker containers with various services like Node-RED and Calibre, to which I hope to add

homeassistantsome day.

I intend to move some of my HomeKit stuff across in the fullness of time (right now I still run homebridge on my ODROID), but with all that’s been happening I just haven’t had the time.

Some of the Synology software was a bit underwhelming, though:

Photos

Moments is very nice and definitely consumer-focused, but it lacks an Apple TV app (which has failed to surface for the past two years).

It does however have the saving grace of featuring fairly sophisticated duplicate detection, so I’m using it to cull dupes from my collection.

The funny thing is that I actually had no intention of trying it, but I had a number of issues with Plex and their traditional photo management app.

For some reason, the plex user for the standard Synology package did not have permission to access my photo library, since the photo share created by DS Photo uses weird (legacy?) attributes.

This had happened to me before in the past, and is a typical hassle of Synology software. The “solution” was to remove DS Photo and Photo Station (their “pro” photo management software), altogether, which allowed Plex to access the same files as Moments and display my photo library on the Apple TV.

This is a bit clunky, but since we also use iCloud for shared family albums, sorting it out is not an immediate priority. I would, however, prefer to use either DS Photo or Moments on the Apple TV… Maybe some day.

Music

Synology has an iTunes server package (based on daapd) that, mercifully still works with Mojave, and whose only caveat is that it requires the Synology indexing service to be active as well–something I had had to disable on the DS411j for performance reasons.

I can’t access it from iOS devices since it does not support Home Sharing (nothing really does, not even Apple’s own products sometimes), but it does “work” in the sense that desktop iTunes can reach it (I have no idea about Catalina’s Music app), although cover art (a helpful mnemonic affordance) is very much a hit-and-miss affair.

Since I still pay for iTunes Cloud Library to sync my music to iOS devices (for some reason I am increasingly unable to fathom), I eventually gave up on the iTunes server and just use Plex to stream audio to my Echo Listen.

So, again, Synology’s built in services fell somewhat short of the mark. But in the process I also found Plex Amp, which is quite well done (if a bit clunky sometimes) and might be a good alternative for getting my own music to play on my Mac…

Plex and Docker

I tried running the Plex package for the Synology for a while, but besides the trouble with DS Photo it also had some weird issues with the way it sprinkled metadata files all over the filesystem.

Those didn’t happen with my external Docker setup on a Z83ii, so I have it temporarily set it up as a secondary server for music and photos only and reverted back to running Plex on the Z83ii until I can spare time to work out the details of getting the Docker version running permanently on the DS1019+.

The good news is that running Docker containers on the DS1019+ is quite well implemented: you get a nice UI that lets you pull images, set up sets of containers with custom networks, define storage volumes, etc.

The only hassle so far is that upgrading containers is completely non-intuitive: you actually have to manually re-fetch the base image, stop and “clear” the existing container, and then restart it–this is something so basic that I was expecting an “update and restart” button, and a chore to do for my multiple 3-4 container setups.

So even though the feature is well implemented, it was clearly not designed for long-term maintenance and service updates. So I suspect I will eventually ditch the GUI and just run plain docker-compose manifests on it via ssh.

Backing up To Azure

Since I have a personal Azure subscription, that provides me with a convenient way to have off-site backups–and, as it happens, one that has been natively supported by Synology HyperBackup for years.

Azure has tiered hot/cool/archive blob pricing, and HyperBackup works fine with blobs in the cool tier. Using it is slower and cheaper than the hot tier, and without any real effect in backup times.

Once I crossed the 1TB threshold, however, I started looking for ways to decrease storage costs and move as much data as possible to the archive tier.

Since I actually have separate storage accounts for each machine and a storage container for every kind of data (music, photos, etc.) and then add “folders” inside it depending on the backup type (rclone, manual, snapshot-YYYYMMDD, etc.) that allows me a lot of flexibility to play with storage tiers, and I can set that manually with any number of tools.

Azure Archive Blob Storage with HyperBackup

But what about data backed up via HyperBackup?

I have been doing backups to cool Azure blobs on the DS411j for a couple of years now, and a few tests on the DS1019+ over my fibre connection showed massive improvements in backup times–all due to the new hardware, and without any relationship to the kind of blob storage in use.

The trick to lowering costs, however, lies in picking out which bits you can shift to archive storage without getting in the way of the backup process itself.

As it turns out, HyperBackup stores the actual data inside a Pool folder (which seems to contain all the snapshot data), with index files alongside it.

So if your backup set is named (unoriginally) HyperBackup and you have it inside the photos container, then all you need to do is set all the blobs inside photos/HyperBackup.hbk/Pool to the archive tier.

To do that automatically, I defined an Azure storage lifecycle policy to do that for me–in case I forget to set the container to the cool tier it moves blobs to that after a day, and after a month it moves everything to the archive tier:

{

"rules": [

{

"enabled": true,

"name": "Archive7",

"type": "Lifecycle",

"definition": {

"actions": {

"baseBlob": {

"tierToCool": {

"daysAfterModificationGreaterThan": 1

},

"tierToArchive": {

"daysAfterModificationGreaterThan": 30

}

}

},

"filters": {

"blobTypes": [

"blockBlob"

],

"prefixMatch": [

"music/HyperBackup.hbk/Pool/0",

"photos/HyperBackup.hbk/Pool/0",

"homes/HyperBackup.hbk/Pool/0"

]

}

}

}

]

}

This has been working fine for me for many months, and I’m now spending only a few cents a day on cloud storage.

The caveat here is that if I ever need to restore from these backups, I will likely have to manually set everything inside the Pool folder back to the cool tier (which will take time), but that’s a trade-off I’m willing to make (and I can automate that away with a couple of API calls).

Update, September 2023: With the availability of a new

coldstorage tier and some new services I’ve added in the meantime, I revised the above to use that before moving things toarchive(it can likely be a bit more aggressive, but I will tweak the thresholds later):

{

"rules": [

{

"enabled": true,

"name": "Cool7Cold30Archive90",

"type": "Lifecycle",

"definition": {

"actions": {

"baseBlob": {

"tierToCold": {

"daysAfterModificationGreaterThan": 30

},

"tierToCool": {

"daysAfterModificationGreaterThan": 7

},

"tierToArchive": {

"daysAfterModificationGreaterThan": 90

}

}

},

"filters": {

"blobTypes": [

"blockBlob"

],

"prefixMatch": [

"music/HyperBackup.hbk/Pool/0",

"photos/HyperBackup.hbk/Pool/0",

"system/HyperBackup.hbk/Pool/0",

"homes/diskstation_1.hbk/Pool/0",

"docker/diskstation_1.hbk/Pool/0",

"photos/rclone"

]

}

}

}

]

}

Belt And Suspenders

However, I did have a strange problem with HyperBackup the other day–one of the backup jobs suddenly refused to back up to Azure without any useful debugging info in the logs. Synology’s software generally works well, but sometimes it is prone to inscrutable failure modes, and that is something I’m not very keen on.

So I’m following a “belt and suspenders” approach and using rclone and restic for some manual backups as well.

Given time, I may even move away from HyperBackup altogether (let’s face it, it’s never a great idea to rely upon vendor-specific solutions in the long run), but the good thing is that thanks to Docker support I’m 100% sure I can get anything I need running on the DS1019+.

So let’s see if it will last me another nine years.

Update, two weeks later:

It seems that the mysterious HyperBackup failure (which happened again, on the same backup set) was due to it attempting to run a backup integrity check and one of the blobs it was looking for having been moved to the archive tier.

Since Synology has effectively zero logging in this regard, this is just an educated guess, because I “fixed it” by moving all the blobs in that set back to the cool tier.

Since Azure storage has internal data integrity checks of its own, I’m going to run an extended test with the archive policy turned back on and HyperBackup integrity checks off.

Another Update, a few months later:

As it turns out, HyperBackup seems to rely on having some index files inside Pool permanently accessible, so I’ve upgraded the example above to only archive files inside Pool/0 and am testing it on a couple of backup sets.