All the hardwares.

Monday, 2023-02-06

Great day to walk 5Km to lunch and back again.

- Did one better and vacuumed the office instead of just tidying it before work.

- Completed another Oxide and Friends podcast episode during my exercise–only twelve or so more to go until I catch up with real time!

- Got another HDMI to CSI bridge in the post, which was a good reminder to twiddle with the CAD design for the PiKVM case again and printed a set of test catches to figure out my KP3S Pro’s minimal tolerances when using PETG.

- Checked on various electronics parts still in transit.

- Paid some bills.

Tuesday, 2023-02-07

No time to go out to lunch, despite great weather.

- Did some research on the Intel AVX-512 instruction set.

- Printed an arm to mount the modular filament holder I did last week, on my Prusa, which turned out great despite some PETG warping.

- Fine tuned the 3D model for my PiKVM case a bit more.

Wednesday, 2023-02-08

Another 5Km walk during lunchtime, albeit a rainier one.

- Finished off the PiKVM case design with better, more resistant snap fits and board stand-offs, did a final print, and published it on GitHub:

Thursday, 2023-02-09

Another round of Microsoft layoffs, which impacted my organization directly this time.

- Ended the day early for sanity’s sake.

- Lacking the mental bandwidth to use FreeCAD (which seemed like a good distraction, but requires effort and focus beyond what I can currently spare), spent a very late, sleepless evening poking at the OpenSCAD model for a new filament holder to bolt onto my KP3S Pro’s 2040 extrusion with minimal flex.

- Did some overnight doomscrolling on LinkedIn, because I’m human and some of the best people I crossed paths with were posting their goodbyes.

Friday, 2023-02-10

More doomscrolling. Can’t really say more.

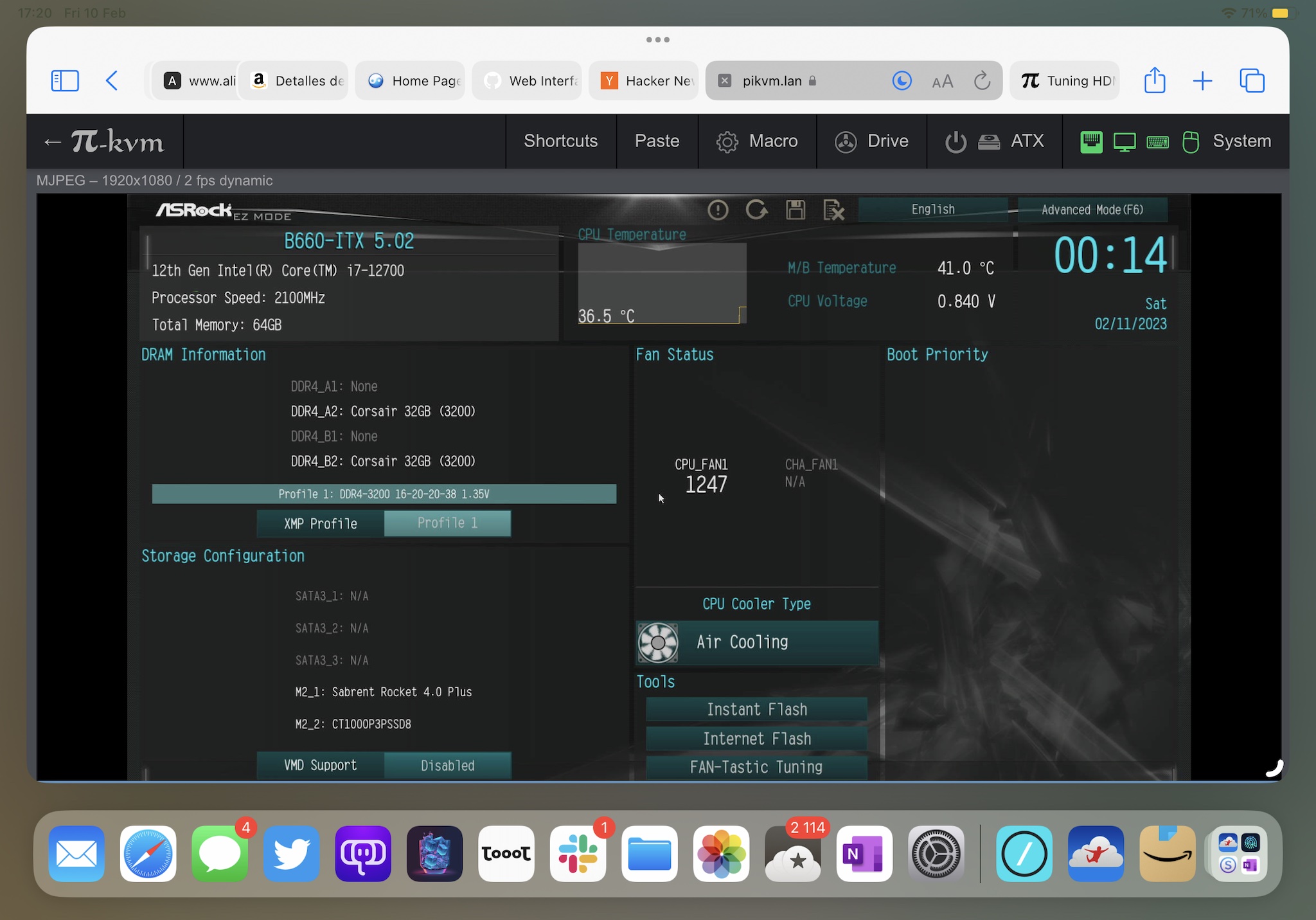

Fortunately most of the remaining parts for my new homelab server arrived (an i7-12700K, fast storage, a bunch of RAM and an RTX 3060 in a nice, compact case), so I had a ready distraction for close of business:

- Put my work laptop on a shelf and removed Outlook from my phone temporarily. It can all wait for next Monday.

- Took a few pictures, for when I actually blog about this:

- Put together most of the components and got the machine to… Not POST.

- Learned that naming a memory bank “A-1” does not mean it should be the sole DIMM slot.

- Installed Proxmox wirelessly by downloading the

.isoimage directly to my little PiKVM and booting it off its OTG port:

This is exactly why I decided to build a PiKVM a couple of months back, although when I planned for it I had no real clue of what was about to happen.

Allowing me to do this from my iPad while sitting in bed unable to sleep pondering the layoffs was the little thing’s moment of glory.

Saturday, 2023-02-11

Dived into setting up my new machine, mostly managing to forget about work.

Deep Linux kernel and LXC hackery ensued, because I want to run it completely headless and avoid having the GPU dedicated to a single VM (or split into virtual partitions across several).

- Spent a while trying various GPU sharing/virtualization/pass-through approaches in Proxmox. Ideally I would like to be able to run ML workloads in an

LXCcontainer and Steam in another container, avoiding Windows altogether. - Spent an even longer while trying to align host device driver versions with client

CUDAversions in theLXCuserland, to no avail. - Learned that it is completely possible to have

nvidia-smiwork fine but haveCUDAdevice detection fail to see your GPU, soPyTorchrefuses to run. - Changed gears and tried setting up Steam in a container, but came across bug #8916, which Valve has closed without actually fixing.

TL;DR:Big Picture mode is completely broken, and so is streaming. - Spent an ungodly amount of time trying to find a usable

armv6Arch Linux archive to install two packages on my Pi Zero W PiKVM so that I can find it via Bonjour/MDNS:

# cat /etc/pacman.d/mirrorlist

##

## Arch Linux repository mirrorlist

## Generated on 2023-02-11

##

Server = https://alaa.ad24.cz/repos/2022/02/01/armv6h/$repo

They weren’t kidding when they said the Zero W was unsupported (Arch discontinued armv6 support in 2022), but it lives!

Sunday, 2023-02-12

I knew what I was getting into when I decided to get an NVIDIA GPU, but there is a limit to how much dkms and uid mapping one can enjoy.

Still, most things are now working the way I want.

- After a bit more tinkering, got the NVIDIA

CUDAdrivers to work withLXCpretty much perfectly by installing driver version525.85.12on the Proxmox host and in a Fedora container. - Tested

CUDAwith a known quantity: Blender. Cycles rendering works great, everything is detected, but theDRIdevice nodes have the wrong permissions. - Spent a fair amount of time figuring out how to do

uid/gidmapping in Proxmox so I could access/dev/driproperly as a member of thevideoandrendergroups inside theLXCcontainer. - Learned that

LXC’slxc.idmapdirective is particularly obtuse when you just want to map agid. Will definitely blog about it. - Enabled the i7-12700K’s iGPU (it’s

Xegraphics, which is nothing to sneeze at) and made sure RDP sessions were able to use that for GL acceleration usingglamor. It’s way easier than a few months ago. - That got Big Picture mode working (and game streaming to my iPad), but rendered by the iGPU since Steam (apparently) can’t use the NVIDIA card if it is started in an X session managed by another GPU. Multiple GPUs are hard, let’s go shopping!

At this point, we literally went shopping.

- Set up Stable Diffusion inside a fresh

LXCcontainer. Without any tuning and kneecapping the CPU bits by only giving it 6 virtual cores (but with no GPU restrictions yet) the 12GB RTX 3060 seems way faster than my M1 Pro and the 8GB Azure-borne Tesla M60 I was playing with–but I’m using a completely different set of libraries on each and this is a meaningless tomatoes to apples to oranges comparison. Still, having a self-hosted ML sandbox was one of my key goals, so it’s a good outcome. - Played around with

PRIMErender offload by using__NV_PRIME_RENDER_OFFLOADand__GLX_VENDOR_LIBRARY_NAMEto tell well-behaving applications to use the NVIDIA GPU:

# This works fine (as do the Vulkan variants).

# Steam ignores it completely.

__NV_PRIME_RENDER_OFFLOAD=1 __GLX_VENDOR_LIBRARY_NAME=nvidia openscad

- Decided to call it a night and post these notes.

Will try forcing xorgxrdp-glamor to pick the NVIDIA card sometime during the week–after last Friday, I will certainly need something to keep my mind off things after work…