Over the past year or so, I’ve come across a number of myths regarding micro-services:

- That they are only for web services

- That they will kill/are enemies/don’t get along with SOA

- That they result in “shattered monoliths”, more complex and harder to manage than existing architectures

- That they’re hard to design

- That they’re hard to deploy and maintain

None of these are actually true - although, of course, human ingenuity, knowing no bounds, is sure to provide critics with enough data points on any of these dimensions to provide for some healthy argument.

So what are micro-services, anyway?

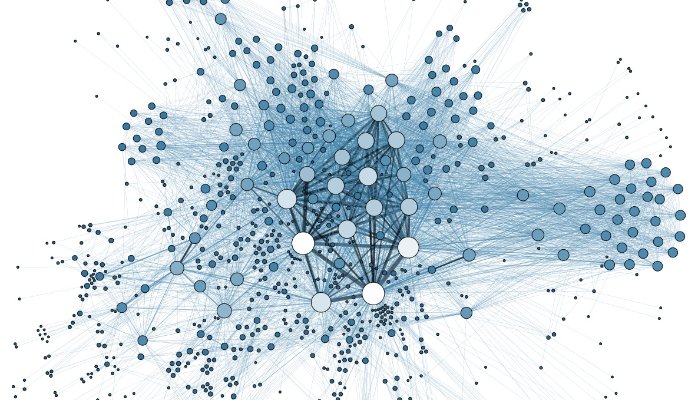

By definition, micro-services are building blocks: small, interconnected pieces of software that talk to each other using well-defined, language-agnostic APIs, coming together to form a larger application or system.

What most people don’t consider is that an API can be a lot more than, say, a REST endpoint (which is how most people think about micro-services): It can be an event queue, for instance, or even a single network packet. It all depends on how well-defined (and often, how well suited to task) that API is.

But the most common (and useful) ways to interconnect micro-services are high-volume event queues (solutions like Kafka, NATS or Azure Event Hub, which are useful in back-end scenarios) and REST APIs (which are more useful in front-end scenarios).

For instance, let’s say you need to handle (tally, filter and act upon) millions of events from a real-time billing system.

Which do you think is easier to design:

- storing all the incoming on a massive system and doing batch processing on the accumulated data, or…

- building a set of simple data consumers that do the filtering on-the-fly?

Most people, based on traditional ways of thinking, would, alas, still give more than a moment’s consideration to the first option. But which will be faster? Which can provide real-time results? And, more to the point, which one will you be able to scale up or down at will as your needs change? Not to mention that, each microservice, taken individually, will be easier to code, debug and maintain than a batch system.

The Truth

As it happens, those five myths are bunk. The really hard problems in micro-services boil down to three things:

- Instantiation (i.e., scaling)

- Service discovery

- Maintaining state

The first one is easy enough to reason about: micro-services need to run somewhere. So how do they differ from “normal” services? Why can’t a SOA endpoint, running on my traditional ESB web server, be a “micro”-service?

Well, the point about micro-services is not what kind of API they speak or in which web server they run, but rather how they can be scaled up (or down) as needs arise.

Micro-services require scalable, resilient scaffolding (i.e., the ability to be automatically run on multiple machines, or having multiple instances on each machine to take advantage of multiple CPU cores), and that is not something you usually get out of a conventional SOA framework (or ESB) - although some are beginning to tackle that problem, even as they are bound by their own conventions.

Rather, you need something like Service Fabric to manage your micro-service instances, spread the load uniformly across supporting systems, and ensuring resiliency. That’s where both service discovery (finding out where your micro-service instances are) and maintaining state (ensuring that they keep working regardless) come in.

Docker will be a part of that story in due time (it’s already the gold standard for packaging and re-using “conventional” services, but it’s still acquiring the degree of fine-grained control and self-healing that’s required).

But the main point is that you do need to re-think the way you think about your applications. The 12 Factor App “manifesto” is a good place to start (as always, and should be revisited every time you need to consider how to design scalable systems of any kind), but you need to be pragmatic about it.

Build Your First Micro-service

Start with something small, say a single API call that is very frequently used and well-defined. Do not shy away from high volume scenarios (because those are exactly the ones that will benefit the most from micro-services).

Get some objective metrics - measure that API call’s performance across a wide range of conditions (time of day, calling service, volume of results, etc.), and, try to figure out how to break it down into smaller operations.

Then take those smaller operations (if any) and implement them in a micro-services framework. Then bring more load to bear on it, and watch as it scales out, using as much (or as little) as necessary to deliver what is (quite often) vastly improved performance.

If you’re a C# or Java developer, you can get started with Azure Service Fabric (which now has a Linux version). Besides great tooling (it is, after all, completely integrated into Visual Studio), it will provide a solid framework for getting your code running both on-premises and in the cloud - starting on your own laptop, and scaling out to potentially millions of machines.

But if you’re into NodeJS, I would suggest taking a serious look at Azure Functions - it will let you get started handling HTTP requests, event queues and storage right away, and is an excellent way of experiencing the beauty of small, self-contained services right from your browser, regardless of which operating system you use.

Go micro - think big.

(This post originally appeared on LinkedIn)