This one took me a long time, and it was actually the third or fourth RK3588 board I got to review–but the timing of its arrival was completely off (it was in the middle of a work crisis) and I had to put it on the back burner for a while.

WARNING: After a couple of months of testing I had a weird hardware issue that resulted in the death of three of my SSDs, so I have temporarily shelved the CM3588. I have updated all CM3588-related posts on this site with this note, and will update the link above as things progress.

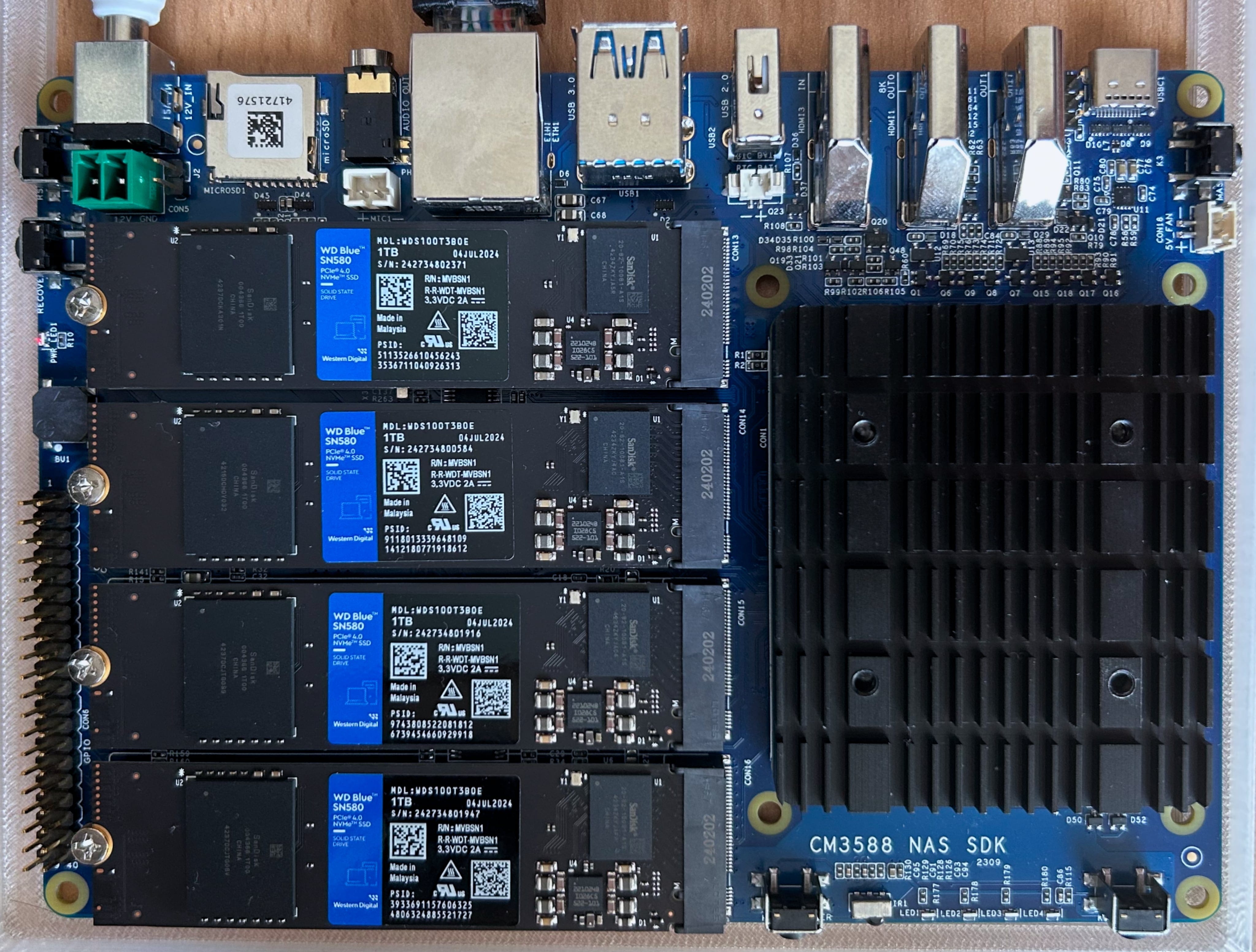

Then a bunch of other things happened, and a few months later I eventually got four WD Blue SN580 1TB NVMe SSDs at a good price and I could finally set it up.

Disclaimer: FriendlyELEC supplied me with a CM3588 compute module and NAS board free of charge, and I bought the SSDs and case with my own money. As usual, this post follows my review policy.

In the meantime a lot of people have looked at this board (it’s become a bit of an Internet sensation), so as usual I’ll try to focus on the things that I found interesting or that I think haven’t been covered in detail elsewhere.

Hardware

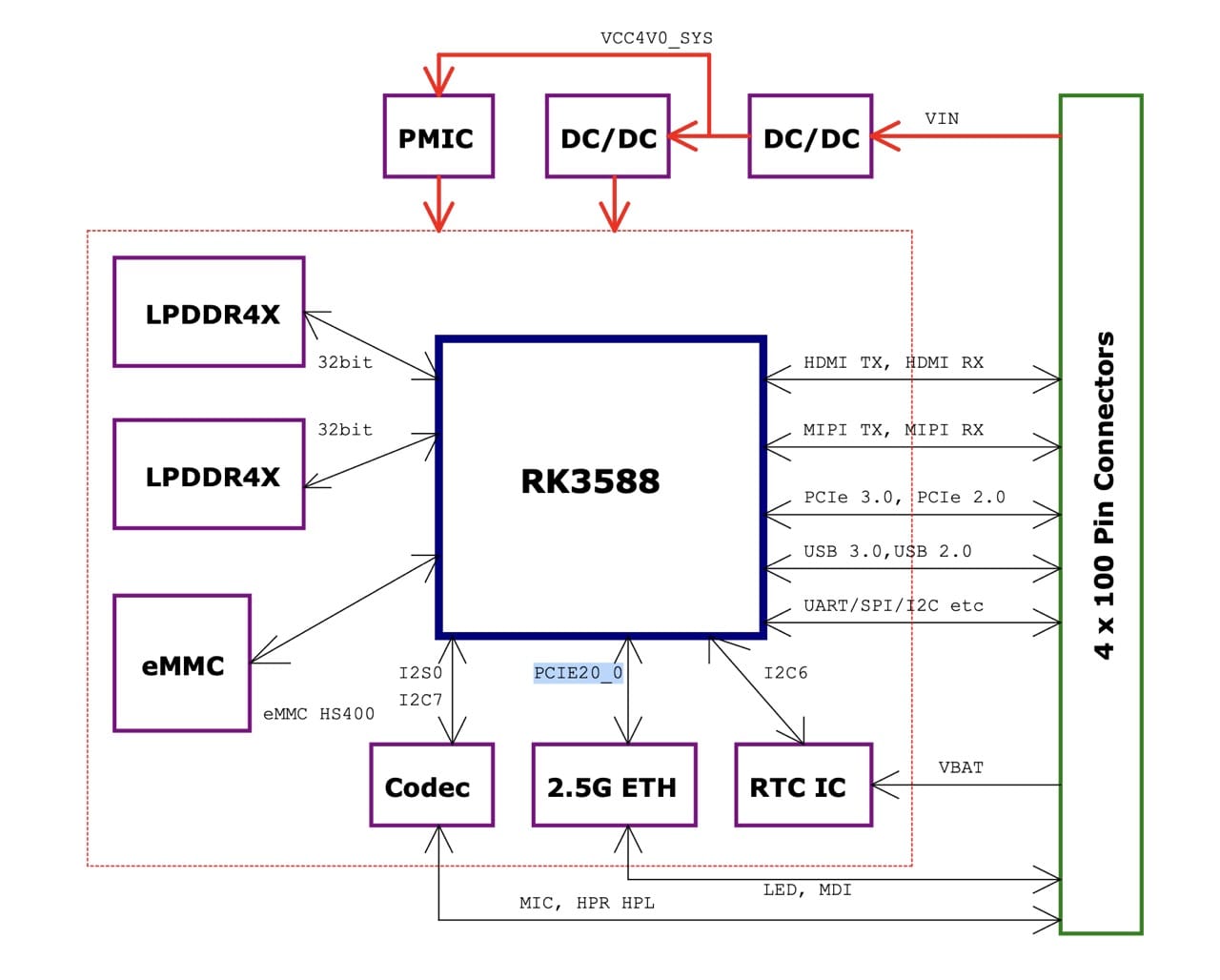

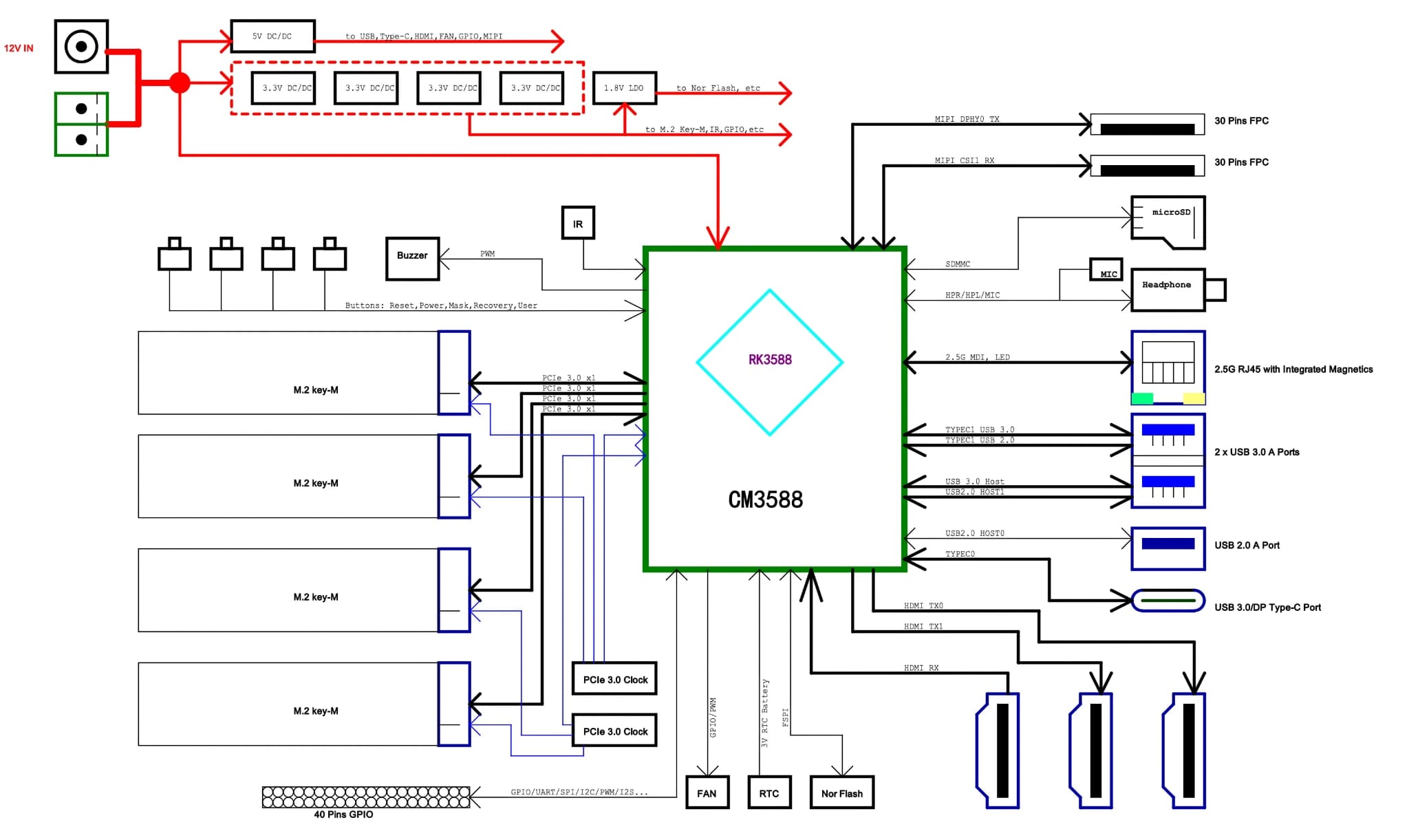

A key aspect of the NAS kit is that it is a two-part system: the CM3588 compute module and the NAS board. There are now a few different variants of the compute module, but the NAS board is a single-purpose carrier board that provides all the necessary I/O.

Let’s get the specs out of the way first, starting with the module:

RK3588SoC (4xA76/2.4GHz cores, 4xA55/1.8GHz cores, plus a Mali-G610 MP4 GPU and the usual 6-TOPS NPU)- 16GB LPDDR4X rated at 2133MHz (which is great, as it’s a lot more than the usual 4GB or 8GB I got on most SBCs)

- 64GB eMMC (mine came with OpenMediaVault pre-installed, but I’ll get to that in a bit)

The module itself is 55x65mm in size and is not interchangeable with, say, the Raspberry Pi compute module as it has a custom form factor that is only compatible with the NAS board, and the various SKU options for the kit are all based on variations of the RAM and eMMC sizes (there is also a “Plus” variant that adds even more RAM and storage as well as faster 2400MHz RAM).

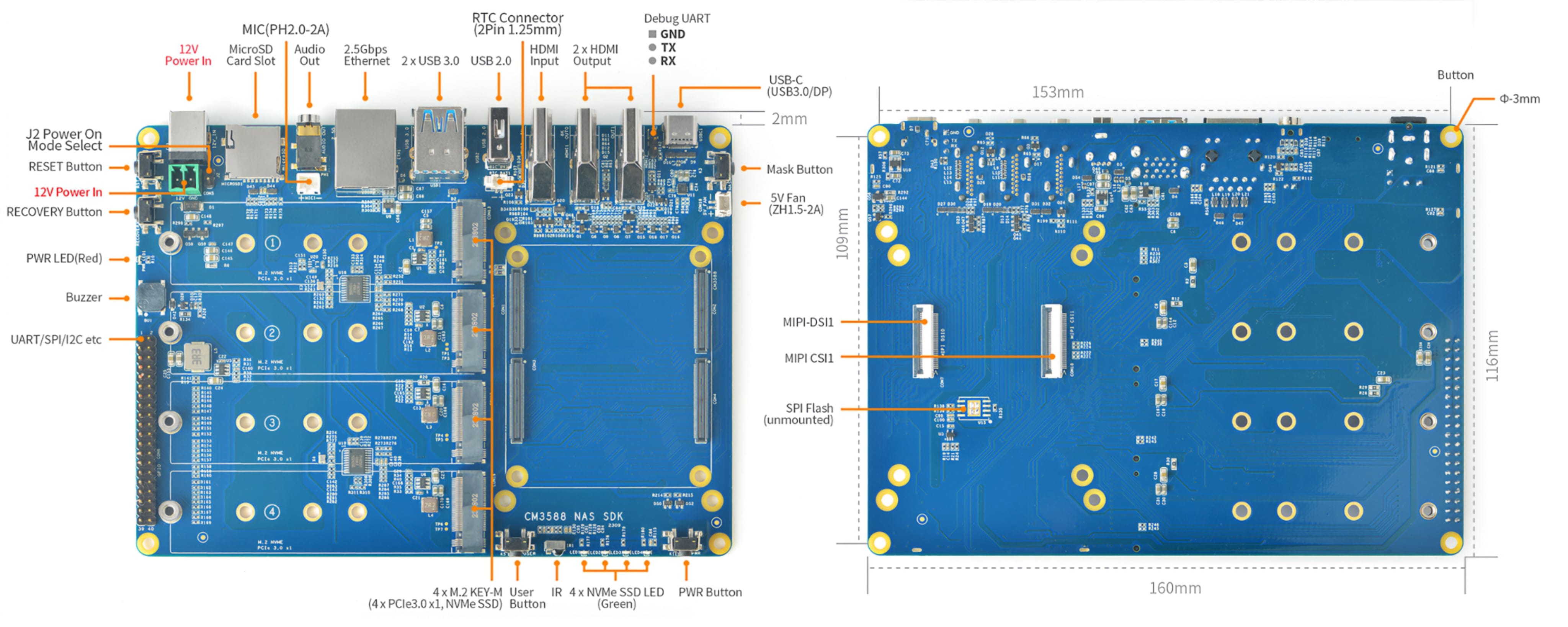

All the I/O is on the carrier, which is quite a bit larger than usual and compares more to a nano-ITX board than a typical SBC. The board itself is 153x116mm and looks like this:

Out of all the above, the most interesting bits are:

- 4xPCIe 3.0x1 NVMe SSD slots (which are the star of the show)

- A single 2.5 GbE port

- Three HDMI ports (two outputs and one input, just like the Orange Pi 5+)

- Three USB-A ports (two USB 3.0 and one USB 2.0)

- One USB-C port (USB 3.0/DP 1.4)

- The usual 40-pin GPIO header

- Two MIPI CSI/DSI connectors on the underside

- Barrel Jack (for a 12V 3A PSU, which was included in my box)

- And a plethora of other connectors and buttons (the usual MASKROM and reset buttons, a power button, and a user button, but also alternate power, RTC clock and fan connectors for a change)

One of the reasons I wanted to test it is that this is possibly the only RK3588 configuration I’ve seen that seems to fully use all the PCIe lanes the SoC provides–and also seems to overshoot that a little, since the Realtek NIC is also on the PCI bus (as a 2.0 device), so internal bandwidth might actually be over-subscribed–it’s hard to tell from the schematics as the module exposes dedicated PCIe lanes for the NVMe slots and an MDI interface for the LAN port.

Which, I think, is also why you don’t get Wi-Fi or a second LAN port. Digging into the schematics a bit shows only one PCIe 3.0 line to the outside world:

…but four PCIe 3.0 lanes to the NVMe slots:

And given the main selling point of the board is the NVMe slots, I was quite interested to see how this setup would perform in practice.

As mentioned above, I populated the board with four WD Blue SN580 1TB NVMe SSDs. These are completely overkill (they are PCIe 4.0x4 devices, and as such much faster than the board supports), but I have future designs for them–and I also got an M.2 to SATA adapter so I can re-use the NAS Kit later in a more conventional way.

System Software

Since I had shelved this project for a couple of months, I had forgotten that the CM3588 shipped with OpenMediaVault pre-installed on the eMMC–which was a bit of a relief since I was expecting to have to go through the usual dance of using the Rockchip tools to install an OS I could work with.

Digging further, I realized that FriendlyELEC has put together a very clean setup–the SD card images you download from their site will automatically install to the eMMC (if present), and the OS is Debian 12 without any frills and kernel 6.1 (which I have since automatically upgraded to 6.1.57 without any hitches).

Moreover, apt.sources.list and friends all listed perfectly ordinary Debian sources and the official OMV package repositories, without any proprietary repos (and no mystery meat packages I could see), so instead of wiping it and installing vanilla Armbian and Proxmox on top, I decided to take OMV for a spin (I lost track of it years ago, and I was curious to see how it had evolved).

But the key point here is that as such things go in the SBC world this is pretty excellent software support right off the bat, and I didn’t feel any need to use Armbian even though the board is community supported.

OpenMediaVault Setup

I took a few different passes at this, starting with getting a feel for what was already installed:

There are a few things of note here:

- You get comprehensive LVM storage management and SMART monitoring out of the box

- System monitoring is also quite good

- File services include Samba, NFS, and my old buddy

rsync, which is nice - You get UPS support out of the box

- You also get comprehensive network service and user account support

But I wanted to do more–I wanted to see how I could handle virtualization, and, most importantly, I also wanted to set up ZFS for my testing.

So I set to work installing things the OMV way, by using the Web UI to add plugins for those–and I was quite surprised to see that almost everything worked out of the box.

I did have a few relevant issues, though:

/etc/apt/sources.list.d/omvdocker.list(which tried to point to the mainstream Docker package repository) was broken, so I could only install Debian’sdocker.iopackage instead of the mainstream one- The LXC bridge didn’t work out of the box, but that was because the

lxc-netservice wasn’t running (which in turn was because I had to create/run/resolvconfmanually) - The ZFS plugin didn’t work out of the box, but that was because the kernel sources weren’t installed (which I’ll get to in a bit)

All of these seem like things that should have been sorted out by the plugins themselves, and I acknowledge that other than the ZFS issue, neither OMV’s ARM packagers nor FriendlyELEC probably expected me to use the NAS kit in the way I did–but I think it’s worth mentioning that the plugins could be a bit more robust.

Installing ZFS

I tried installing ZFS by just activating the OMV ZFS plugin, but that didn’t really work–I had the UI components loaded, but there were no ZFS kernel modules.

As it turned out, DKMS kernel configuration didn’t work because the kernel sources weren’t actually installed–which was weird, since I would expect that to be sorted out by the plugin.

Fortunately, the fix was easy–I just had to SSH in, install the kernel headers (I could have done that via the GUI as well, but I wanted to check loaded modules), and then re-run the ZFS plugin setup.

I then set autotrim=on and turned on lz4 compression using the GUI, and was good to go:

Storage Performance

I then ran fio as usual:

fio --filename=/mnt/pool/test/file --size=5GB --direct=1 --rw=randrw --bs=64k --ioengine=libaio --iodepth=64 --runtime=120 --numjobs=4 --time_based --group_reporting --name=random-read-write --eta-newline=1

…and this gave me only 10K IOPS at 667MiB/s, which is quite lower than, say the 13K I got from the Orange Pi 5+ and a bit disappointing–but which can be explained by the fact that ZFS compression was on, since all the CPU cores fired up to 100%.

I then turned off compression and ran the test again, and got 13K IOPS at 882MiB/s–with the CPUs pretty heavily loaded but peaking at 95% instead of fully saturated.

So there’s a trade-off here–and given that we’re stuck with PCIe 3.0, I actually think either value is OK. After all, the CPU compression overhead might be a decent compromise if you intend to use ZFS to host development containers or serve compressible data.

Hitting the server from the network proved that it had zero issues serving data at line speed while peaking at 45-50% CPU–I got the equivalent of 2.3Gbps on a single SMB/CIFS connection, and I don’t think it would have any issues saturating a 5GBps link.

I think this is perfectly OK for a fast home NAS, and the only real bottleneck seems to be the built-in Realtek 2.5GbE NIC.

I have a 5GbE USB adapter (and it worked), but I didn’t run a proper end-to-end test–that will be coming in a later post after I switch to Proxmox and (with luck) find the time to re-wire one run of my home network.

OMV as a Hypervisor on ARM

And why would I switch to Proxmox, you might ask? Well, I wanted to see how well OMV could handle virtualization–and the answer is “not very well”.

This is not FriendlyELEC’s fault (especially since they don’t control the plugins that OMV uses), but it’s worth mentioning that it’s a clunky experience.

Docker Compose

The Docker plugin worked out of the box–I was expecting to have to install the docker.io package and then manually configure the plugin, but it was already there and working. The Compose one, though, took a little bit of fiddling to get right, but to be honest I don’t like its reliance on setting up shared folders for the compose files–even if you do get a workable GUI to manage them:

However, I didn’t spend any time fiddling with Compose, as I typically rely on Portainer–I just wanted to see how viable it was out-of-the-box, and the answer is that it’s perfectly OK.

KVM/LXC

LXC support was a bit more interesting–it’s joined at the hip to KVM via virt-tools, and besides the fact that KVM didn’t work out of the box (it assumes x86_64 hosts, which is wrong), it was a bit of a pain to set up.

Besides having to reset and reconfigure the LXC bridge (which even after being set up dies every now and then), accessing LXC containers via the console is a bit of a pain–you have to SSH in and then use virsh to access the console:

sudo virsh -c lxc:/// console omv-test

This, together with the fact that I prefer Proxmox and rely on it already to run, backup and migrate LXC containers across all my other ARM devices, is just another reason why I’ll be wiping the OMV install and replacing it with Proxmox–but, again, OMV is fine if you don’t need virtualization, and I was actually pleasantly surprised by how well it worked as a NAS.

Thermals, Power and CPU Performance

The massive heatsink on the CM3588 module is quite effective–I never saw the CPU go above 85°C even under full load, and the clock stayed pegged at 1.8GHz for most of the test, only dropping to 1.4GHz after 2 minutes of full load–and bouncing back up again when the temperature dropped to 75°C.

The CPU performance, however, was a bit under what I’ve seen from other RK3588 devices–I got 13.889 tokens/s, which is a bit lower than the 15.37 I got from the Banana Pi M7 (with the same model version), but I think that might be due to some variation in RAM speeds (and maybe just a bit better cooling on the M7, since it dissipates heat through the case).

Power Consumption

The NAS kit is a bit power-hungry, though–with the SSDs installed, I measured 5W at idle and 14-19W from the wall (using one of my Zigbee sockets) under load, which despite low in the grand scheme of things is enough to give me pause.

By the way, the board has a voltage sensor, which is a nice touch:

# sensors

simple_vin-isa-0000

Adapter: ISA adapter

in0: 12.46 V

nvme-pci-33100

Adapter: PCI adapter

Composite: +43.9°C (low = -40.1°C, high = +83.8°C)

(crit = +87.8°C)

Sensor 1: +60.9°C (low = -273.1°C, high = +65261.8°C)

Sensor 2: +43.9°C (low = -273.1°C, high = +65261.8°C)

npu_thermal-virtual-0

Adapter: Virtual device

temp1: +59.2°C (crit = +115.0°C)

nvme-pci-11100

Adapter: PCI adapter

Composite: +47.9°C (low = -40.1°C, high = +83.8°C)

(crit = +87.8°C)

Sensor 1: +65.8°C (low = -273.1°C, high = +65261.8°C)

Sensor 2: +47.9°C (low = -273.1°C, high = +65261.8°C)

center_thermal-virtual-0

Adapter: Virtual device

temp1: +59.2°C (crit = +115.0°C)

bigcore1_thermal-virtual-0

Adapter: Virtual device

temp1: +59.2°C (crit = +115.0°C)

soc_thermal-virtual-0

Adapter: Virtual device

temp1: +59.2°C (crit = +115.0°C)

nvme-pci-0100

Adapter: PCI adapter

Composite: +45.9°C (low = -40.1°C, high = +83.8°C)

(crit = +87.8°C)

Sensor 1: +61.9°C (low = -273.1°C, high = +65261.8°C)

Sensor 2: +45.9°C (low = -273.1°C, high = +65261.8°C)

tcpm_source_psy_6_0022-i2c-6-22

Adapter: rk3x-i2c

in0: 0.00 V (min = +0.00 V, max = +0.00 V)

curr1: 0.00 A (max = +0.00 A)

nvme-pci-22100

Adapter: PCI adapter

Composite: +47.9°C (low = -40.1°C, high = +83.8°C)

(crit = +87.8°C)

Sensor 1: +66.8°C (low = -273.1°C, high = +65261.8°C)

Sensor 2: +47.9°C (low = -273.1°C, high = +65261.8°C)

gpu_thermal-virtual-0

Adapter: Virtual device

temp1: +59.2°C (crit = +115.0°C)

littlecore_thermal-virtual-0

Adapter: Virtual device

temp1: +59.2°C (crit = +115.0°C)

bigcore0_thermal-virtual-0

Adapter: Virtual device

temp1: +59.2°C (crit = +115.0°C)

Case and Ventilation

A couple of weeks ago, after getting the SSDs and starting testing, I finally ordered the case–which is a very sturdy piece of kit, and includes ventilation holes, an RTC clock battery and and a fan.

Most of my testing was done without the case, but I did install it for the final ollama run and temperature checks, and am pretty happy with it–it’s compact, sturdy, well made (the feet contain screws that hold both halves together in an ingenious way) and has a lot of ventilation.

I should mention that the fan supplied with the case was… very disappointing. The 40x40x7mm 5V fan was very noisy and had almost unbearable coil whine both while ramping up and at peak speeds, and wins my personal award for the worst fan I’ve ever used.

So all the tests were done without the fan, and I honestly don’t think it’s necessary for most use cases–the heatsink seems more than enough to keep the system cool.

However, I have ordered a couple of replacements since my server closet gets rather toasty in Summer, and the current temperature isn’t really representative of what I’ll see next year. I’ll update this post with the results once I get them.

Conclusion

Hardware-wise, I am quite happy with the CM3588 NAS kit–it’s a well thought-out solution that works very well out of the box if you want a small fire-and-forget NAS.

NVMe storage is still more expensive than SATA (spinning rust or otherwise), but there is clearly a trend towards NVMe in the small NAS world, and even at PCIe 3.0 speeds it’s a good fit for a home or small office server. Most people will be perfectly happy with the Docker support to run a few containers, but I need a bit more control and flexibility–and that’s where Proxmox comes in.

My current plan is to get Proxmox running on it and set up the ZFS pool to use both for consolidating all my ARM LXC containers and provide at least 2TB of fast shared storage for machine learning models and maybe even video editing (which I can’t do off my Synology). That will, however, require a bit more storage, which is a bit out of my budget at the moment.

Consolidating all (or at least most) of my ARM devices into a single server will compensate for the slightly higher idle power consumption, and the combination of ZFS RAID and Proxmox (with both LXC snapshots and migration) will make it easy to preserve my home automation setup and other services.

I’ll put up a follow-up post once I’ve done that, and I’ll also be testing the 5GbE USB adapter and the M.2 to SATA adapter I got for the SSDs–so stay tuned.