Here’s a funny story: Some time ago, I contacted TerraMaster to inquire if they were interested in having me review their products, but they declined.

And then, unexpectedly, I got my hands on one – in particular, I got a F4-424 Max, which is currently one of their most interesting SOHO products due to its dual 10GbE ports and a powerful CPU.

Disclaimer: Since I received the unit for free (other than customs fees, and even if not from TerraMaster), I am treating this as an ordinary review. I bought the additional 96GB of RAM and provided my own SSDs and 4TB disk drives.

Hardware

Unsurprisingly, the F4-424 Max looks like a typical NAS–the front is dominated by four hot-swappable bays, the back by a fairly quiet fan, and it is easy to overlook the compact motherboard located on the right side of the case:

Removing a few screws allows you to slide off the entire side panel and access the machine itself with relative ease.

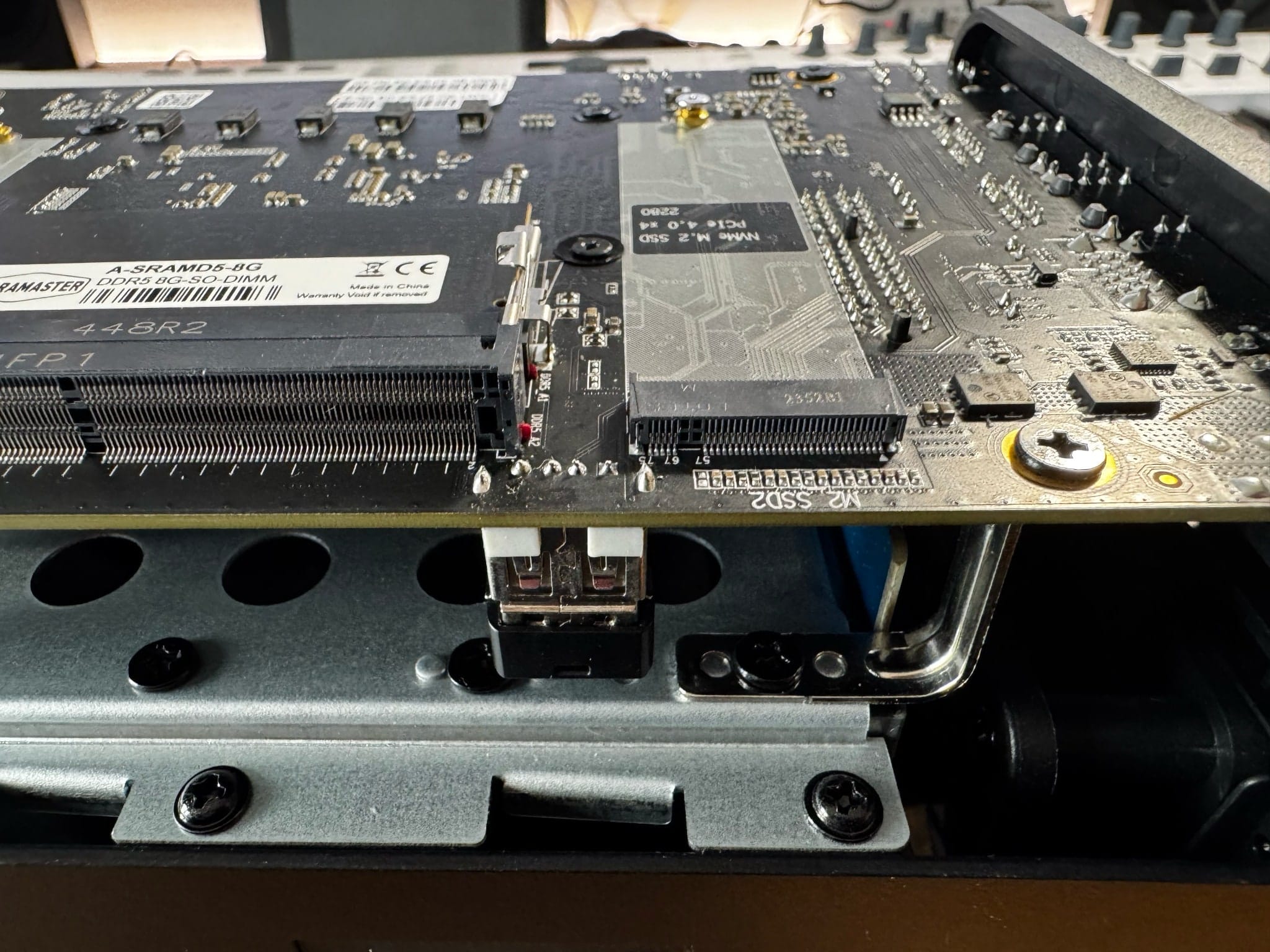

The first thing you’ll notice is that the board is dominated by dual NVMe and DIMM slots (mine came pre-populated with a single 8GB module), and that you’re actually looking at the underside of the machine.

The CPU is on the other side, under a pretty beefy heatsink that lies as close to the drives (and the main airflow path) as possible, which is reassuring considering that it is a pretty powerful chip as NAS CPUs go.

CPU Specs

The i5-1235U this ships with is a 12th Gen Intel CPU with 2 performance cores and 8 efficiency cores, which is a bit of a departure from the usual slow-poke NAS CPUs from three or four generations ago.

This chip is rated to go from 15-55W and can turbo up to 4.4GHz, which is quite speedy–furthermore, the performance cores provide two extra threads per core, meaning this is a 10 core/12 thread machine.

And it sports Iris Xe graphics, which is positively luxurious for a NAS (and pretty amazing for remote desktop services and video transcoding). Since you actually get an HDMI port, you can even use it as a desktop if you really want to.

Compute Performance

The fact that the i5-1235U has only two performance cores turned out to be more noticeable than I expected since the first few virtual machines I moved over were Remote Desktop servers, where interactive use sometimes felt slower than on the aging i7 I migrated them from.

That’s resource contention at work–interactive tasks will get pushed to and from the efficiency cores, and things are fine as long as you don’t expect those desktops to be overly snappy, since they will get constantly scheduled out of the performance cores by other tasks.

For non-interactive services, however, the i5-1235U was a massive upgrade. All the background services I tried (Plex, Samba, databases, etc.) ran massively faster than on both my Synology NAS or the N100 mini-PCs I was running them on, since the efficiency cores are no slouches.

Transcoding Performance

The Iris Xe Graphics are indeed impressive. Although I don’t have a good benchmark for H.264 RDP acceleration (which is still very experimental) and server-side glamor rendering (which I have been using for years), I was able to set up HandBrake inside a Docker container and run a few tests with the H.265 QuickSync encoder, and the F4-424 Max was able to batch transcode 1080p H.264 files into H.265 at almost 120 frames per second, which is not too shabby considering it is nearly half of what my Macs can do (you just can’t beat Metal at this).

Having decent H.265 hardware encoding on a NAS is really handy, and although there aren’t going to be a gazillion video streams coursing through my network, this is definitely a point where the F4-424 Max shines when compared to most other NAS devices.

Power Consumption

On idle, the F4-424 Max hovered between 24-28W with an empty chassis. Adding 3.5” HDDs progressively bumped that up in 5-7W increments, and a full HDD load-out was closer to 55W on idle/low CPU load – half of what the power adapter is rated for, so there seems to be plenty of headroom, although to be fair even if you really stress the CPU there shouldn’t be any issue.

Boot Drive

Right next to the CPU heatsink, you’ll find an internal USB port with a tiny 8GB drive, which is used to boot the system:

I ended up taking mine out and taping it to one of the internal brackets for safekeeping, but not before having a look at the TerraMaster operating system.

TerraMaster OS

If you’ve been paying attention to modern NAS offerings, pretty much every NAS vendor under the sun now includes their own little App Store, and TOS has a pretty decent selection:

The above isn’t a fair sample of its capabilities–since I was very interested in understanding what kind of support TOS had for cloud backup, I spent a little while diving into all the options I could find–alas, there’s no apparent way to backup to Azure Storage (unlike my Synology), although other pieces of my current stack (like Tailscale) were easy to find.

A nice find was that TOS is essentially Ubuntu Jammy, which is great if you want to stick to the base OS. And you can host Docker containers and VMs directly on TOS if you want to – which will likely be more than enough for most people.

But having Ubuntu as a base means that you should be able to do a little careful customization as long as you play nice.

For the record, here’s what lshw and friends had to say on the base system without any disks attached:

❯ lshw

-bash: lshw: command not found

❯ df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/md9 7769268 2096780 5256336 29% /

tmpfs 51200 28 51172 1% /dev/shm

tmpfs 1574176 8280 1565896 1% /run

tmpfs 5120 0 5120 0% /run/lock

tmpfs 524288 1224 523064 1% /tmp

tmpfs 787084 0 787084 0% /run/user/0

❯ lspci

00:00.0 Host bridge: Intel Corporation Device 4601 (rev 04)

00:02.0 VGA compatible controller: Intel Corporation Device 4628 (rev 0c)

00:04.0 Signal processing controller: Intel Corporation Alder Lake Innovation Platform Framework Processor Participant (rev 04)

00:06.0 PCI bridge: Intel Corporation 12th Gen Core Processor PCI Express x4 Controller #0 (rev 04)

00:06.2 PCI bridge: Intel Corporation 12th Gen Core Processor PCI Express x4 Controller #2 (rev 04)

00:14.0 USB controller: Intel Corporation Alder Lake PCH USB 3.2 xHCI Host Controller (rev 01)

00:14.2 RAM memory: Intel Corporation Alder Lake PCH Shared SRAM (rev 01)

00:15.0 Serial bus controller: Intel Corporation Alder Lake PCH Serial IO I2C Controller #0 (rev 01)

00:15.1 Serial bus controller: Intel Corporation Alder Lake PCH Serial IO I2C Controller #1 (rev 01)

00:16.0 Communication controller: Intel Corporation Alder Lake PCH HECI Controller (rev 01)

00:17.0 SATA controller: Intel Corporation Alder Lake-P SATA AHCI Controller (rev 01)

00:1c.0 PCI bridge: Intel Corporation Device 51bc (rev 01)

00:1c.6 PCI bridge: Intel Corporation Device 51be (rev 01)

00:1d.0 PCI bridge: Intel Corporation Device 51b2 (rev 01)

00:1e.0 Communication controller: Intel Corporation Alder Lake PCH UART #0 (rev 01)

00:1e.3 Serial bus controller: Intel Corporation Device 51ab (rev 01)

00:1f.0 ISA bridge: Intel Corporation Alder Lake PCH eSPI Controller (rev 01)

00:1f.3 Audio device: Intel Corporation Alder Lake PCH-P High Definition Audio Controller (rev 01)

00:1f.4 SMBus: Intel Corporation Alder Lake PCH-P SMBus Host Controller (rev 01)

00:1f.5 Serial bus controller: Intel Corporation Alder Lake-P PCH SPI Controller (rev 01)

01:00.0 Non-Volatile memory controller: Shenzhen Longsys Electronics Co., Ltd. Device 1602 (rev 01)

02:00.0 Non-Volatile memory controller: Sandisk Corp Device 5017 (rev 01)

03:00.0 Ethernet controller: Aquantia Corp. Device 14c0 (rev 03)

04:00.0 Ethernet controller: Aquantia Corp. Device 14c0 (rev 03)

05:00.0 SATA controller: ASMedia Technology Inc. ASM1062 Serial ATA Controller (rev 02)

❯ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

nbd0 43:0 0 0B 0 disk

nbd1 43:32 0 0B 0 disk

nbd2 43:64 0 0B 0 disk

nbd3 43:96 0 0B 0 disk

nbd4 43:128 0 0B 0 disk

nbd5 43:160 0 0B 0 disk

nbd6 43:192 0 0B 0 disk

nbd7 43:224 0 0B 0 disk

sdzb 259:0 0 931.5G 0 disk

├─sdzb1 259:2 0 285M 0 part

├─sdzb2 259:3 0 7.6G 0 part

│ └─md9 9:9 0 7.6G 0 raid1 /

├─sdzb3 259:4 0 1.9G 0 part

│ └─md8 9:8 0 1.9G 0 raid1 [SWAP]

└─sdzb4 259:5 0 921.7G 0 part

sdza 259:1 0 953.9G 0 disk

├─sdza1 259:6 0 285M 0 part

├─sdza2 259:7 0 7.6G 0 part

│ └─md9 9:9 0 7.6G 0 raid1 /

├─sdza3 259:8 0 1.9G 0 part

│ └─md8 9:8 0 1.9G 0 raid1 [SWAP]

└─sdza4 259:9 0 944.1G 0 part

nbd8 43:256 0 0B 0 disk

nbd9 43:288 0 0B 0 disk

nbd10 43:320 0 0B 0 disk

nbd11 43:352 0 0B 0 disk

nbd12 43:384 0 0B 0 disk

nbd13 43:416 0 0B 0 disk

nbd14 43:448 0 0B 0 disk

nbd15 43:480 0 0B 0 disk

❯ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 39 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 12

On-line CPU(s) list: 0-11

Vendor ID: GenuineIntel

Model name: 12th Gen Intel(R) Core(TM) i5-1235U

CPU family: 6

Model: 154

Thread(s) per core: 2

Core(s) per socket: 10

Socket(s): 1

Stepping: 4

CPU max MHz: 4400.0000

CPU min MHz: 400.0000

BogoMIPS: 4992.00

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht t

m pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpui

d aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm sse4

_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault e

pb ssbd ibrs ibpb stibp ibrs_enhanced tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 avx2 s

mep bmi2 erms invpcid rdseed adx smap clflushopt clwb intel_pt sha_ni xsaveopt xsavec xgetbv1 xsaves split_lock_de

tect avx_vnni dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp hwp_pkg_req hfi umip pku waitpkg gfni

vaes vpclmulqdq rdpid movdiri movdir64b fsrm md_clear serialize arch_lbr ibt flush_l1d arch_capabilities

Virtualization features:

Virtualization: VT-x

Caches (sum of all):

L1d: 352 KiB (10 instances)

L1i: 576 KiB (10 instances)

L2: 6.5 MiB (4 instances)

L3: 12 MiB (1 instance)

NUMA:

NUMA node(s): 1

NUMA node0 CPU(s): 0-11

Vulnerabilities:

Gather data sampling: Not affected

Itlb multihit: Not affected

L1tf: Not affected

Mds: Not affected

Meltdown: Not affected

Mmio stale data: Not affected

Retbleed: Not affected

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Vulnerable: eIBRS with unprivileged eBPF

Srbds: Not affected

Tsx async abort: Not affected

user@TNAS:~# lsusb

Bus 002 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 001 Device 002: ID 058f:6387 Alcor Micro Corp. Flash Drive

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Upgrading the Hardware

One of the main reasons I was interested in the F4-424 Max is its versatility. Unlike Synology devices, having a BIOS allowing you to run nearly any software opens up a lot of possibilities–and, naturally, I chose to install Proxmox.

But before doing that, I had to bump up the specs regarding reliability and performance, and to do that I dropped in two identical WD Black 1TB SSDs to run the OS and 96GB of DDR5 Crucial RAM to have loads of space for disk buffers, ZFS and VMs and LXC containers.

These were a sizable investment but completely worth it, since the RAM in particular turns the F4-424 Max into a decently fast hypervisor platform.

I was unable to achieve RAM speeds beyond 4800MHz, though, which was a bit disappointing–the BIOS appears to allow for higher speeds, but I wouldn’t get them to work (so much for DDR5 training, I guess).

Installing Proxmox

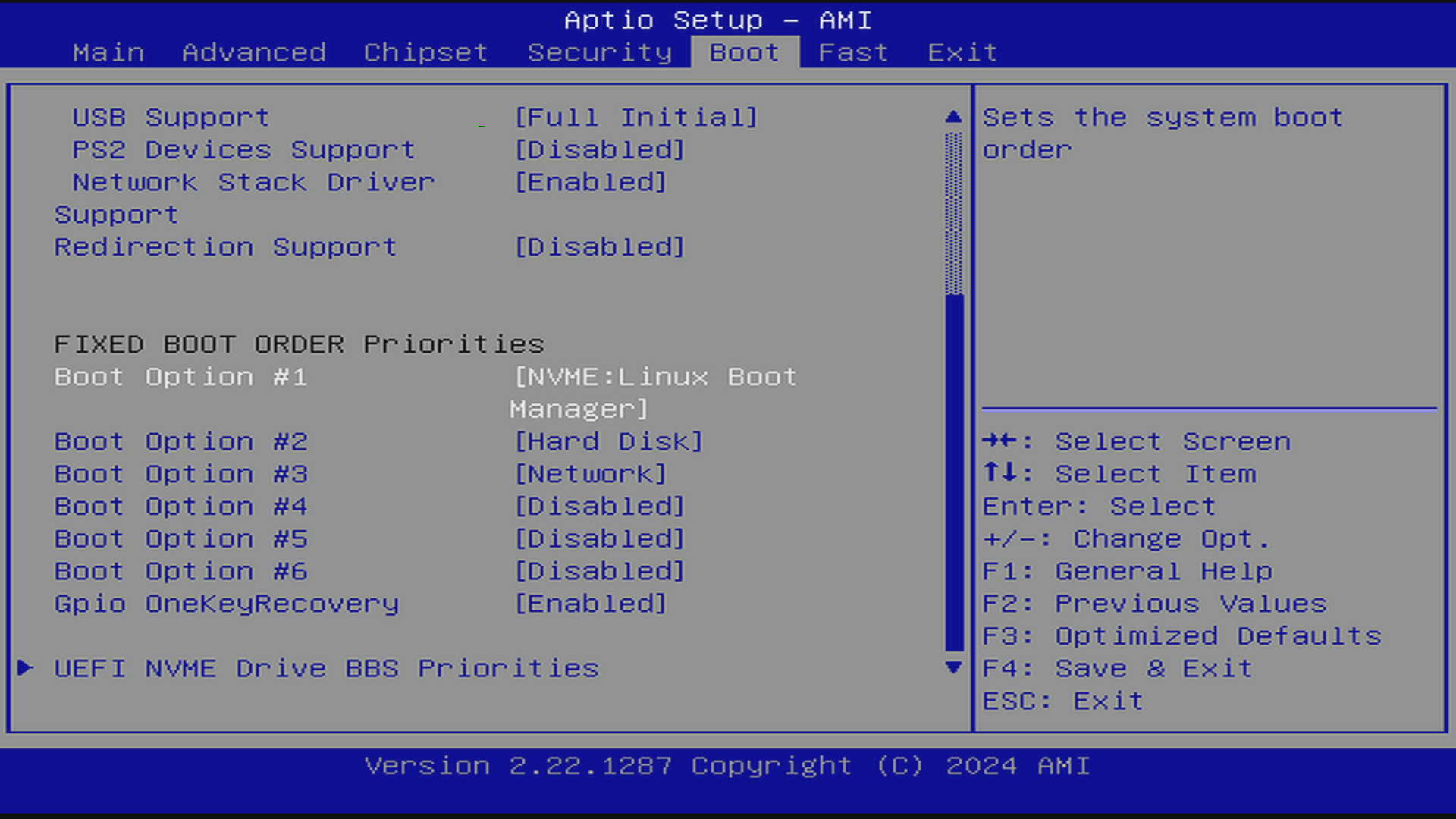

This was both easier and harder than expected–installing the system with a bootable ZRAID 1 across both SSDs was just a setup option, but getting it to boot from them was not–it kept booting into the TerraMaster USB drive regardless of what I set in the BIOS boot order:

After nearly a dozen failed attempts at setting things up in this BIOS page, I decided to unplug the internal USB drive (and thus remove it from the equation). That led to the the machine stalling on boot, but I could hold down F12 to confirm that the Linux boot loader was set as the default.

And yet I had to manually select it to boot, which was frustrating.

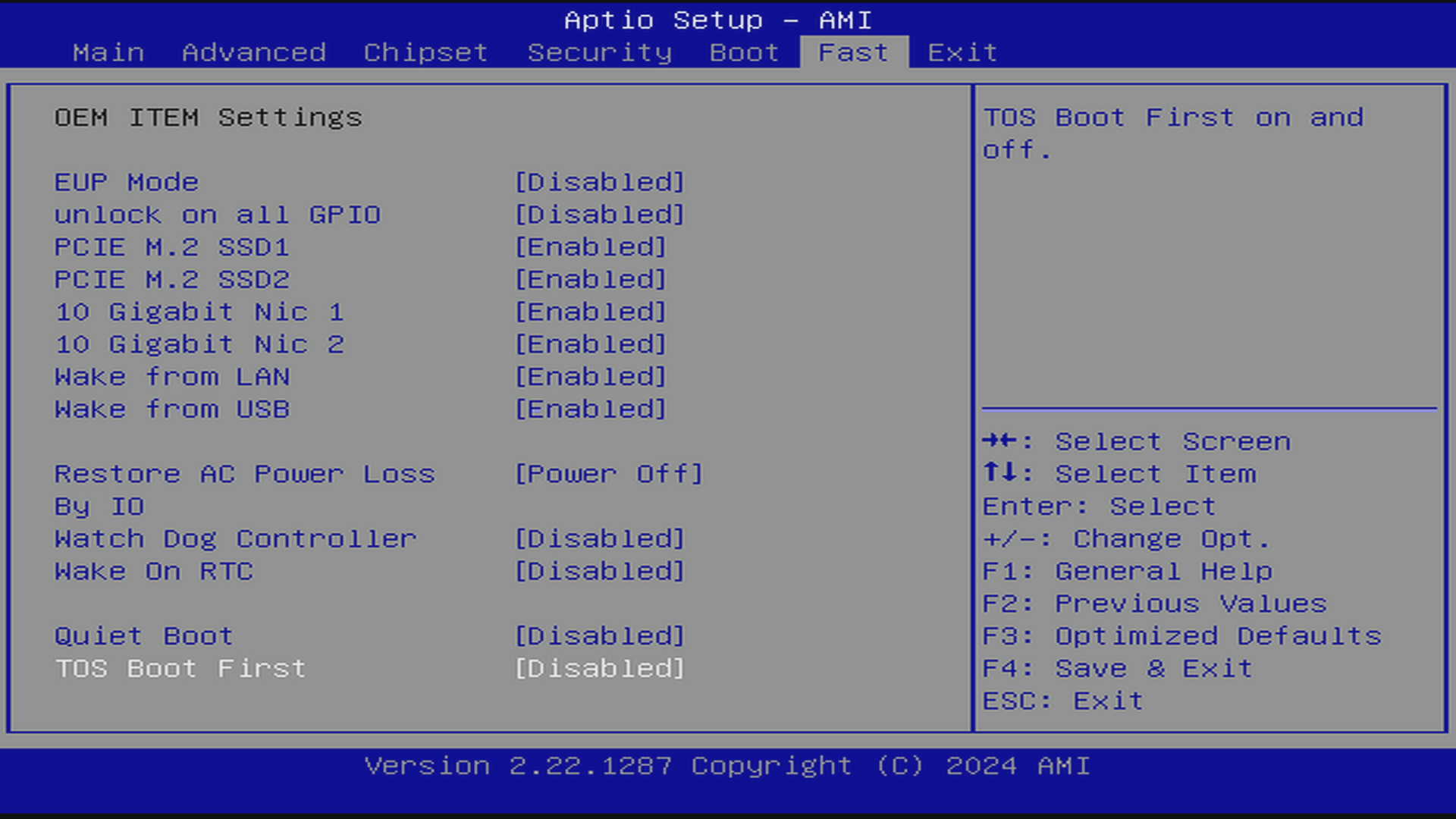

The fix was setting an extra BIOS option–near the end of a different, almost unrelated page–that tells the machine to boot from the TOS drive:

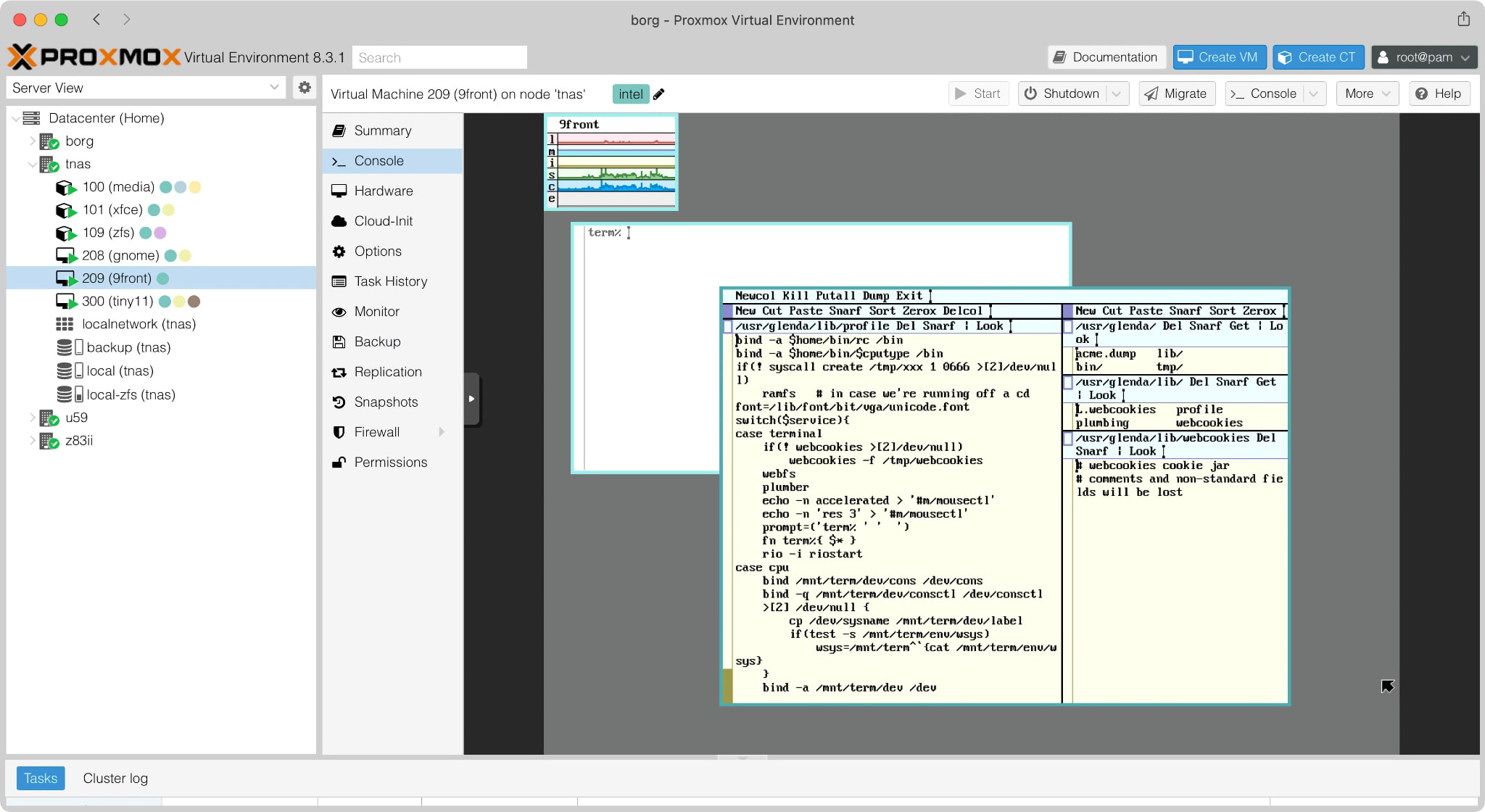

…after disabling that, everything worked fine, and I was soon able to decommission rogueone (which is where most of my daily driver environments live) and migrate things across:

NAS Setup

Setting up ZFS took weeks because, well, I didn’t have enough HDDs, the new ones I got were delayed (Amazon ran out of stock, so it was a toss-up as to whether or not I’d even get them before Christmas) and they were actually for my Synology, so I had to wait for its array to rebuild as I rotated out the old 4TB drives one by one and got them into the F4-424 Max (which, incidentally, was a completely tool-less process).

Once that was done, it took me about two minutes to re-partition and group them into local-zfs using the Proxmox GUI.

With Proxmox handling ZFS, all I needed to do now was to set up file sharing–and the way to do that with the least overhead is to set up an LXC container to run Samba.

This approach has a few neat benefits:

- I can provision Samba users inside the container (

smbpasswd -ais your friend), keeping them off other systems. - I can set up Samba management (say, via Webmin without risking messing with Proxmox).

- I can backup the entire container (and its configuration) with zero hassle.

- I can use Samba’s “recycle bin” feature to provide a safety net for data (this is so handy that I use SMB mounts in most of my Docker setups).

- I can do more advanced tricks like masking out the hosts’ UID/GID values and use my own, or set up quotas later if I want to.

But first, I need to create a shareable volume, and mount it inside the container:

# Create a volume for sharing with LXCs

❯ zfs create local-zfs/folders

# Create a mountpoint for a container running Samba

❯ mkdir /local-zfs/folders/shares

❯ pct set 109 -mp0 /local-zfs/folders/shares,mp=/mnt/shares

Then my smb.conf looks somewhat like this (with a little Apple-flavored syntax sugar to turn on a few useful tweaks):

❯ cat /etc/samba/smb.conf

[global]

preserve case = yes

short preserve case = yes

default case = lower

case sensitive = no

vfs objects = catia fruit streams_xattr recycle

fruit:aapl = yes

fruit:model = Xserve

fruit:posix_rename = yes

fruit:veto_appledouble = no

fruit:wipe_intentionally_left_blank_rfork = yes

fruit:delete_empty_adfiles = yes

fruit:encoding = native

fruit:metadata = stream

fruit:zero_file_id = yes

fruit:nfs_aces = no

# recycle bin per user

recycle:repository = #recycle/%U

recycle:keeptree = yes

recycle:touch = yes

recycle:versions = yes

recycle:exclude = ._*,*.tmp,*.temp,*.o,*.obj,*.TMP,*.TEMP

recycle:exclude_dir = /#recycle,/tmp,/temp,/TMP,/TEMP

recycle:directory_mode = 0777

workgroup = WORKGROUP

server string = %h on LXC (Samba, Ubuntu)

log file = /var/log/samba/log.%m

max log size = 1000

logging = file

panic action = /usr/share/samba/panic-action %d

server role = standalone server

obey pam restrictions = yes

unix password sync = yes

passwd program = /usr/bin/passwd %u

passwd chat = *Enter\snew\s*\spassword:* %n\n *Retype\snew\s*\spassword:* %n\n *password\supdated\ssuccessfully* .

pam password change = yes

map to guest = bad user

[software]

browseable=yes

create mask = 0777

directory mask = 0777

force group = nogroup

force user = nobody

guest ok=yes

hide unreadable=no

invalid users=nobody,nobody

path = /mnt/shares/software

read list=nobody,nobody

valid users=admin,nobody

write list=admin

writeable=yes

[scratch]

path = /mnt/shares/scratch

create mask = 0777

directory mask = 0777

force group = nogroup

force user = nobody

guest ok = yes

public = yes

read only = no

writeable = yes

Networking Performance

Like I mentioned on my WP-UT5 review, I was able to get 4.4-4.6 Gbps with that 5Gbps adapter plugged directly into the F4-424 Max, and I’ve since made an attempt to use iperf3 from multiple 2.5Gbps machines to the F4-424 Max via one of my Sodola switches, where I got past 8Gbps. But testing for that without more pure 10Gbps hardware is futile, and I didn’t invest much time in it.

Suffice it to say that the F4-424 Max should be able to saturate at least one of its 10Gbps interfaces, although as usual that will depend a lot on what you’re doing.

Conclusion

The F4-424 Max is a solid piece of hardware with loads of DIY-friendly and expansion possibilities. Even though it is somewhat pricey and not marketed as an open platform, the 2x10GbE interfaces and ease of customization make it an appealing alternative to going full DIY, especially if you want something compact and quiet.

Additionally, I found the base TerraMaster OS more interesting than Synology’s approach–having Ubuntu as a base makes it much more approachable by tinkerers, even if for home/SOHO use you don’t need to worry about what’s under the hood.

Next Steps

My plan is to decommission nearly all my other machines and migrate their services to the F4-424 Max, devoting one of its 10GB NICs to a VLAN trunk that will go into a central switch and preparing my network for future segmentation.

This will eventually entail having it host a virtual router to manage work, lab, and home segments, but I’m looking into other options since I don’t want to be overly dependent on a single box.

In the meantime I will be poking at the ZFS array with various things, and if I later happen to start editing video and find it lacking, then I’ll look into making the storage go faster… But for now, it’s plenty fast enough.

Update, December 2025: After a year of use, I can say that the F4-424 Max has been rock solid. However, I have neglected it in one important aspect, which is thermals: As it happens the fan controller is semi-proprietary and not supported under Proxmox, so it was actually running the fan at a fairly low speed most of the time, which led to higher than expected CPU temperatures under load and a lot of throttling. I finally found a way to deal with that and that seems to be working well.