The launch of the Raspberry Pi 5 was a bit… underwhelming, to be honest.

Part of that is because the Raspberry Pi Foundation favors incremental improvement and a conservative approach to hardware design, which is understandable given their target markets.

But there is a wider scope of use cases for ARM boards these days, and I’m not sure the Raspberry Pi 5 is the best “next step” beyond the 4 for what I aim to do, so I decided to take a look at the Orange Pi 5+ instead.

Disclaimer: Orange Pi sent me a review unit (for which I thank them), and this article follows my Review Policy. Also, I purposely avoid doing any price comparisons with the Pi 5 (or the Pi 5 plus an equivalent set of add-ons) because I think the Orange Pi 5+ is a different beast altogether.

Hardware Specs

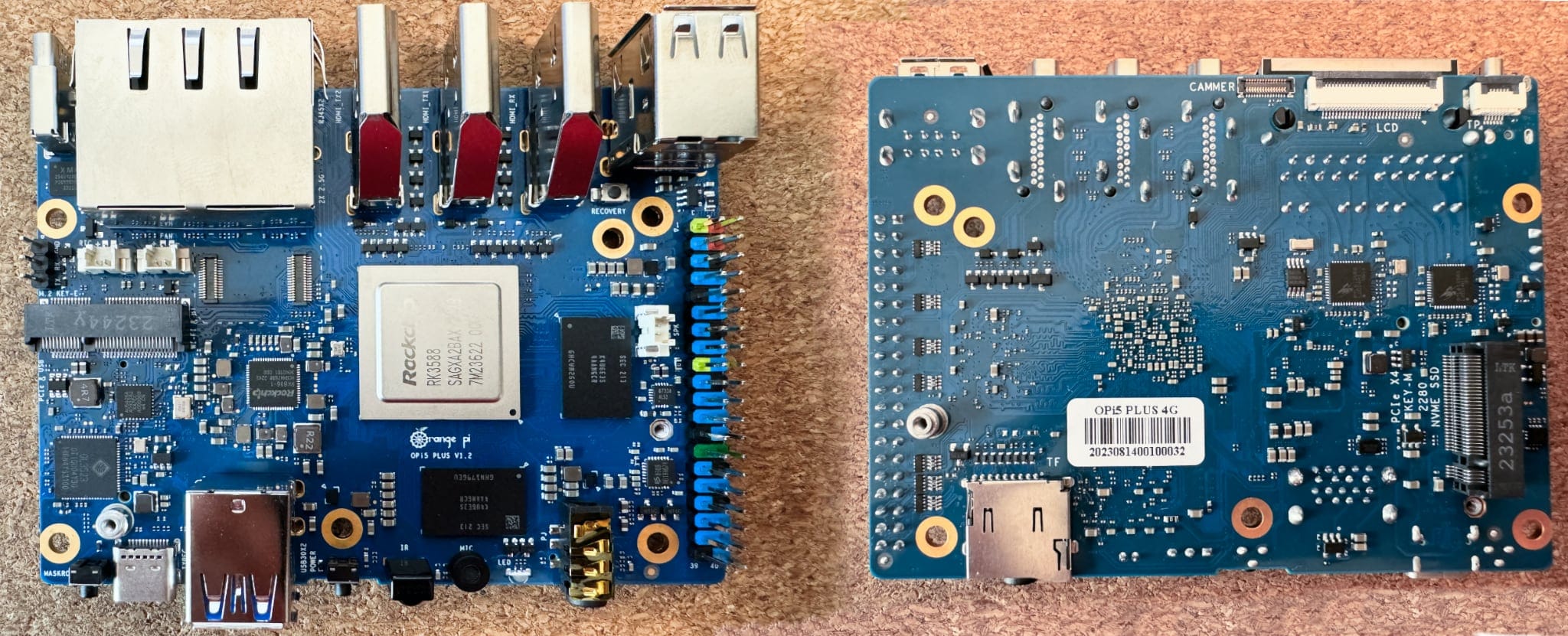

The Orange Pi 5+ is pretty much a textbook Rockchip design, with a few interesting tidbits:

- An

RK3588chipset (with 4xA76/2.4GHz cores and 4xA55/1.8GHz cores, plus a Mali-G610 MP4GPUand anNPU) - An M.2 2280 PCIe 3.0x4

NVMeslot (leveraging thePCIe 3.0lanes the3588provides) - An M.2 E Key 2230 slot (for WiFi/BT)

- Dual

HDMIoutputs (rated for 8k60) - One

HDMIinput (rated for 4k60) - DisplayPort 1.4 (8K30) via USB C

- Dual 2.5GbE ports

…plus numerous USB ports, a typical set of single-board computer connections (SPI, MIPI and a 40-pin GPIO header), a microphone and an IR receiver:

This makes for a very interesting board with lots of standardized I/O, and I was very curious to see how it would fare as both a thin client and (more importantly) as a server.

Sadly, the board I got only has 4GB of RAM, which made it impossible to test some of the things I had planned (the upper SKU right now ships with 16GB of RAM, and there is going to be a 32GB version as well).

Nevertheless, I went ahead and added the following:

- A 1TB WD Black SN770

NVMedrive (PCIe Gen 4, a bit overkill but useful for testing since it removes any I/O bottlenecks) - A Realtek RTL8852BE WiFi 6/Bluetooth 5.2 card (which I had lying around)

This made for a pretty beefy setup nonetheless, and I was able to test quite a few things since I got it early this month.

Cooling, Case and Power

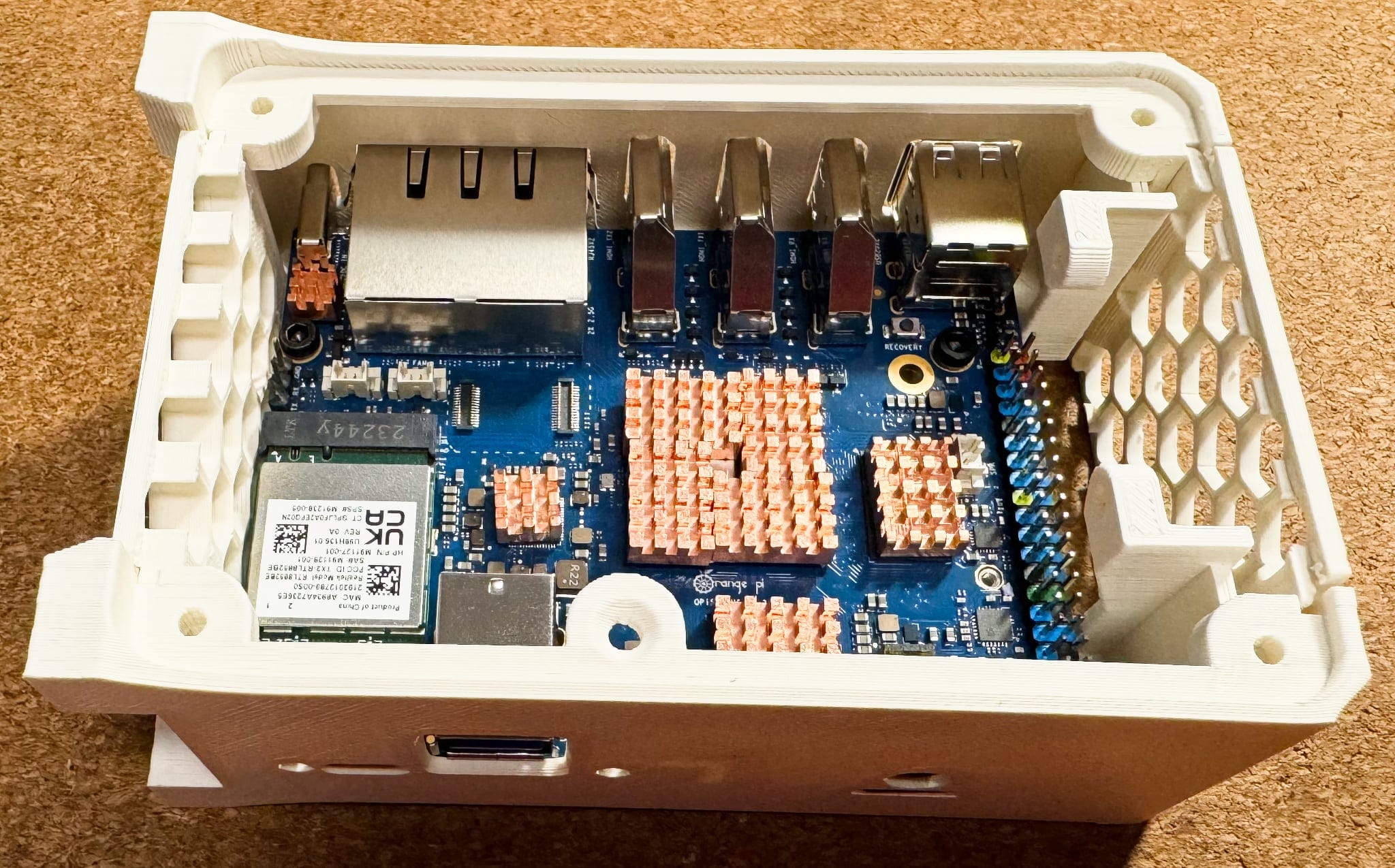

The sample unit came without a cooling solution, but even though there is an official heatsink (with a fan that uses the 2-pin PCB connector), given my dislike for fan noise I opted for hacking some purely passive cooling.

So I took some spare copper blocks I had gotten for other projects and used leftover thermal strips to attach them to the RK3588, the DRAM chips and a couple of other chips. This worked wonderfully and kept the CPU at a cool 39oC when idle.

While I waited for the NVMe drive to arrive, I decided to find and print a suitable case for it:

With this case closed and stood up vertically, the CPU temperature on idle slowly rose to 45oC, which is still pretty good for a passive setup.

After I added the NVMe drive (with its own heatsink) and started testing things, temperatures rose a couple of degrees, but the passive setup still worked fine1.

Power-wise, the board idles just under 5W, and I managed to get it up to 15W (measured from the wall socket) while running OnnxStream at full tilt (more on that below, but suffice it to say that this was with all cores running inference and a lot of SSD throughput).

This is roughly half the power envelope of a comparable mini-PC, although I wouldn’t place much stock on the raw values without considering the overall performance of the system and what it is being used for.

But it is nice to see how efficient the board is given the amount of horsepower it has.

Operating System Support

Software support is always the Achilles heel of Raspberry Pi alternatives, but Orange Pi has various OS images available for the 5 Plus. This includes their own flavors of Arch and Android, but also reasonably stock OpenWRT, Ubuntu and Debian images (which have build scripts available on GitHub).

I tried a couple of those while my NVMe was in transit, but since I was planning on using the board as a server I decided to go with Armbian in later tests, which is a well supported distribution that has been around for a long time and has an active community (plus a reasonable assurance of long-term updates).

Armbian currently provides a Debian 12 bookworm image as a release candidate for the Plus 5–however, since the RK3588 support is still not fully baked for kernel 6.x, I had to install a version with kernel 5.1.

Not that I didn’t make an effort–the 6.7 edge version wouldn’t even boot, and trying to upgrade to the default 6.1 kernel also failed, forcing me to mount the SD card on another machine and restore the 5.1 kernel.

And, in general, right now Rockchip images are mostly kernel 5.x based, but given what teams like Collabora are doing I expect this to improve over time.

Use Cases

But before I settled on Armbian, I had time to test the board in a couple different scenarios:

Tiny, Mighty Router

This was the first (and easiest) thing to test off an SD card, and it didn’t disappoint.

The stock OpenWRT image booted up fine, but since I only have gigabit fiber and gigabit LAN (and the only machine with 2.5GbE is in my server closet) I wasn’t really able to push the hardware to its limits in my office.

But again, I made an attempt and was able to get transfer rates of a little over 122MB/s (roughly 970Mbit/s) through it. The RK3588 is somewhat overkill for a router, so no surprises here. I’m positive it would fare just as well with 2.5GbE traffic, and I will likely have an opportunity to test that in the future, since I plan on swapping one of my managed switches for a PoE-enabled one with 2.5GbE ports.

Desktop Use

There’s not much to say here either–I wrote the Orange Pi Debian 12 XFCE image to an SD card and used it as a thin client for a few days, and it worked great–for starters, it was able to cope with both my monitors at decent resolutions.

I only tested via HDMI, but was able to get a usable desktop across both the LG Dual Up and my LG 34WK95U-W, although of course Linux still has issues with widely different display resolutions, etc. Nothing untoward here, and XFCE was snappy enough.

As a Remote Desktop Client (using Remmina), the Orange Pi 5+ beat the Raspberry Pi 4 figures I got last year, but not by much–and the reason for that is simply because it is all very server-dependent, and I was using the same server for both tests.

But I did get a solid 60fps on the UFO test rendered on a remote desktop, and Remmina was about as snappy as the official Remote Desktop client on my Mac.

Besides that, running Firefox, VS Code and a few other apps was a good experience, although the 4GB of RAM felt a bit cramped (some of the images I tried used zram swap) and SD card performance impacted loading times.

Benchmarks

Once I got the NVMe, I got started on the main use case I had in mind for the board–understand how it would fare as a server, and how it would compare to both the Raspberry Pi 4 and the u59 mini-PC I have been using as part of my homelab setup.

And that typically involves two kinds of things:

- Heavy I/O (which is where the

NVMecomes in) - Heavy CPU load (which is where the

RK3588’s extra cores come in)

So I installed Armbian onto a fresh SD card, booted off it, and then ran armbian-config and asked it to install onto /dev/nvme0n1p1–it cloned the SD install (merging /boot and / onto that partition) and updated the SPI flash (mtdblock0) to enable booting from the NVMe, which worked flawlessly.

Disk I/O

I then used fio to test the NVMe drive, and got the following results:

# fio --filename=/tmp/file --size=5GB --direct=1 --rw=randrw --bs=64k --ioengine=libaio --iodepth=64 --runtime=120 --numjobs=4 --time_based --group_reporting --name=random-read-write --eta-newline=1

random-read-write: (groupid=0, jobs=4): err= 0: pid=620: Thu Jan 18 18:36:43 2024

read: IOPS=13.4k, BW=835MiB/s (875MB/s)(97.8GiB/120015msec)

slat (usec): min=7, max=15753, avg=56.50, stdev=61.17

clat (usec): min=3, max=18314, avg=534.81, stdev=431.78

lat (usec): min=99, max=19738, avg=592.00, stdev=443.42

clat percentiles (usec):

| 1.00th=[ 161], 5.00th=[ 217], 10.00th=[ 258], 20.00th=[ 318],

| 30.00th=[ 363], 40.00th=[ 408], 50.00th=[ 453], 60.00th=[ 502],

| 70.00th=[ 562], 80.00th=[ 660], 90.00th=[ 857], 95.00th=[ 1106],

| 99.00th=[ 1844], 99.50th=[ 2474], 99.90th=[ 4752], 99.95th=[ 8586],

| 99.99th=[13566]

bw ( KiB/s): min=713365, max=1110144, per=100.00%, avg=855819.52, stdev=16270.31, samples=956

iops : min=11146, max=17346, avg=13371.99, stdev=254.23, samples=956

write: IOPS=13.4k, BW=835MiB/s (875MB/s)(97.8GiB/120015msec); 0 zone resets

slat (usec): min=7, max=15360, avg=62.20, stdev=58.87

clat (usec): min=77, max=38943, avg=18504.56, stdev=3070.53

lat (usec): min=174, max=39019, avg=18567.47, stdev=3069.47

clat percentiles (usec):

| 1.00th=[10683], 5.00th=[12387], 10.00th=[13566], 20.00th=[15795],

| 30.00th=[17695], 40.00th=[18744], 50.00th=[19268], 60.00th=[19792],

| 70.00th=[20317], 80.00th=[20841], 90.00th=[21627], 95.00th=[22152],

| 99.00th=[23725], 99.50th=[24773], 99.90th=[28967], 99.95th=[30540],

| 99.99th=[32900]

bw ( KiB/s): min=732032, max=1106048, per=100.00%, avg=855790.94, stdev=14574.78, samples=956

iops : min=11438, max=17282, avg=13371.53, stdev=227.75, samples=956

lat (usec) : 4=0.01%, 10=0.01%, 100=0.01%, 250=4.54%, 500=25.33%

lat (usec) : 750=13.03%, 1000=3.80%

lat (msec) : 2=2.89%, 4=0.34%, 10=0.27%, 20=30.96%, 50=18.83%

cpu : usr=6.36%, sys=39.13%, ctx=1889178, majf=0, minf=101

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwts: total=1602601,1602691,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=835MiB/s (875MB/s), 835MiB/s-835MiB/s (875MB/s-875MB/s), io=97.8GiB (105GB), run=120015-120015msec

WRITE: bw=835MiB/s (875MB/s), 835MiB/s-835MiB/s (875MB/s-875MB/s), io=97.8GiB (105GB), run=120015-120015msec

This is very good, especially when compared to the u59 mini-PC, which has relatively cheap SATA SSDs:

read: IOPS=2741, BW=171MiB/s (180MB/s)(20.1GiB/120014msec)

write: IOPS=2743, BW=171MiB/s (180MB/s)(20.1GiB/120014msec)

…and to the Raspberry Pi 4, which has a USB 3.0 SSD:

read: IOPS=2001, BW=125MiB/s (131MB/s)(14.7GiB/120015msec)

write: IOPS=2003, BW=125MiB/s (131MB/s)(14.7GiB/120015msec)

I found the u59 results weird, so I ran the same test on my Intel i7-6700 (which also has SATA SSDs) and got a wider sample:

| Machine | IOPS (64K random read) | IOPS (64K random write) |

|---|---|---|

Intel i7-12700 (NVMe SSD, see note below) |

42926 | 42908 |

Orange Pi 5+ (NVMe SSD) |

13372 | 13371 |

Intel N5105 (SATA SSD) |

2741 | 2743 |

Intel i7-6700 (SATA SSD 1) |

2280 | 2280 |

Intel i7-6700 (SATA SSD 2) |

2328 | 2329 |

Raspberry Pi 4 (USB 3.0 SSD) |

2001 | 2003 |

I’m a bit curious as to how a Raspberry Pi 5 would fare in this kind of testing (although I’d have to get one and an NVMe HAT, which would need to be factored in cost-wise), but I strongly suspect the Orange Pi 5+ would still be faster.

Note: I got some pushback on HN that I should have used

NVMedrives on the Intel machines for comparison. My point here is that a) I tested with what I have (and I only added the i7 because I found the u59 results strange), and b) the u59 is within the general ballpark of the Orange Pi 5+ price-wise (as would likely be the i7-6700 second-hand, really), and mine shipped withSATASSDs. I’m not trying to compare apples to oranges here, just trying to get a feel for how the Orange Pi 5+ compares to other machines I have lying around. Otherwise I’d go and compare it directly against my M2/M3 Macs or my i7 12th gen, which would be a bit silly. Nevertheless, I also ran the test on the i7.

Ollama CPU Performance

This is where things get interesting, because the RK3588 has two sets of 4 cores and a lot of nominal horsepower, but I don’t plan on using it for conventional workloads, and most benchmarks are artificial anyway.

So I decided to run a few things that are more in line with what I do on a daily basis, and that I know are either CPU-bound or heavily dependent on overall system performance.

As it happens, Ollama is a great benchmark for what I have in mind:

- It is a “modern” real-world workload

- It is very compute intensive (both in terms of math operations and general data processing)

- It does not require I/O (the

NVMeputs the Orange Pi 5+ at an unfair advantage) - Tokens per second (averaged over several runs) is largely irrespective of the kind of question you ask (although it is very much model-dependent)

However, I had to work around the memory constraints of the 4GB board again.

Since none of the “normal” models would fit, I had try and use smaller models for comparable results (the Raspberry Pi 4 I’m using has 8GB of RAM, and that’s enough to run quite a few more).

tinyllama is a good baseline, but I also tried dolphin-phi because it is closer to the kind of models I work with.

I again had to resort to my i7-6700@3.4GHz for comparison because the u59’s N5105 CPU does not support all the required AVX/AVX2 instructions, but the results were pretty interesting nonetheless.

For each model, I ran the following prompt ten times2:

for run in {1..10}; do echo "Why is the sky blue?" | ollama run tinyllama --verbose 2>&1 >/dev/null | grep "eval rate:"; done

Looking solely at the eval rate (which is what matters for gauging the LLM performance speed-wise), I got these figures:

| Machine | Model | Eval Tokens/s |

|---|---|---|

| Intel i7-6700 | dolphin-phi | 7.57 |

| tinyllama | 16.61 | |

| Orange Pi 5+ | dolphin-phi | 4.65 |

| tinyllama | 11.3 | |

| Raspberry Pi 4 | dolphin-phi | 1.51 |

| tinyllama | 3.77 |

Keeping in mind that the i7 runs at nearly six times the wattage, this is pretty good3, and the key point here is that the Orange Pi 5+ generated the output at a usable pace–slower than what you’d get online from ChatGPT, but still fast enough that it is close to “reading speed”–whereas the Raspberry Pi 4 was too slow to be usable.

This also means I could probably (if I could find a suitable model that fit into 4GB RAM) use the Orange Pi 5+ as a back-end for a “smart speaker” for my home automation setup (and I will probably try that in the future).

OnnxStream Combined CPU and I/O Performance

Next up I decided to try OnnxStream, which made the rounds a while back for allowing people to run Stable Diffusion on a Raspberry Pi… Zero.

As it turns out, it is a very interesting project, not just because it uses ONNX but also because it can run inference pretty much directly from storage (which is feasible for Stable Diffusion because it’s essentially scanning through the model “once” rather than doing multiple passes over various layers, which is what LLMs end up doing).

This is closer to the kind of workload I’m interested in, and even though it requires a lot of I/O (in which the NVME does give the Orange Pi 5+ an unfair advantage), it is still a good test of the CPU’s ability to run inference.

So I built OnnxStream from source on my test machines (except the u59, because XNNPACK really likes advanced instruction sets as well), downloaded the Stable Diffusion XL Turbo model, and ran the following command:

# time OnnxStream/src/build/sd --xl --turbo --rpi --models-path stable-diffusion-xl-turbo-1.0-onnxstream --steps 1 --seed 42

----------------[start]------------------

positive_prompt: a photo of an astronaut riding a horse on mars

SDXL turbo doesn't support negative_prompts

output_png_path: ./result.png

steps: 1

seed: 42

...

You’ll notice that I only ran one step and that I used the --rpi flag, which turns off fp16 arithmetic and splits attention to make it run faster on the Raspberry Pi.

I also ran iotop to see how much I/O was going on, and during the initial stages of each run I saw a lot of activity (which is to be expected, since the model is being loaded into memory and the first inference steps are being run).

As I expected, the Orange Pi 5+ was able to sustain a throughput of 200MB/s when loading slices of the model, which is pretty good (the Pi 4 was only able to do about 60MB/s), but the overall run time was still partly CPU-bound, making it a more interesting test.

Since this took a good while to run4 I only did it three times, and averaged the results:

| Machine | Time |

|---|---|

| Intel i7-6700 | 00:07:05 |

| Orange Pi 5+ | 00:14:08 |

| Raspberry Pi 4 | 00:46:53 |

Overall, the Orange Pi 5+ was three times faster than the Raspberry Pi 4 and half as fast as the Intel i7 under the same conditions, which confirms that with a little more RAM (and likely a little more patience and optimization) the Orange Pi 5+ could run other kinds of models at a decent clip and with a great power envelope.

Jellyfin

After all that, I decided to relocate the board to my server closet for a weekend and try something a bit more mundane–running a media server.

This is something that is of interest to most folk looking at ARM single board computers for home use, so I installed docker and set up a test Jellyfin server using my NAS as a backend.

Even though Jellyfin does not have any support for hardware transcoding on ARM, the overall experience was pretty good, at least with two simultaneous users–Swiftfin and the Android TV client both worked snappily, and I was able to stream 4K content directly to both without any issues (again, this board is a bit overkill where it regards throughput).

Forcing transcoding on the server side was a bit more taxing, but I was able to get HEVC to H.264 1080p software transcoding going without any stuttering, and the 8 CPU cores were more than enough to handle the load–but I didn’t try more than two simultaneous streams, and I suspect that would be the limit.

Parenthesis: GPU and NPU support

At this point it bears noting that even though the RK3588 has a Mali-G610 MP4 GPU and a Neural Processing Unit, neither of those have the kind of support that, say, Intel QuickSync has for streaming, and that finding anything with support for the NPU was a bit of a challenge–which was another reason for me taking a break from normal benchmarking and trying something more mundane.

I did manage to get an OpenCV YOLO demo going with a USB webcam, but ARM software is still thin on the ground, and the expertise to improve it as well. In particular, this thread taught me a lot about the NPU’s limitations. It isn’t as “wide” as a GPU for the kind of mainstream AI work I’m interested in, so there’s really no way to use it for LLMs or other large models.

But I’m positive I can get whisper.cpp running on it, which I’ll try via useful-transformers once I have a little more time.

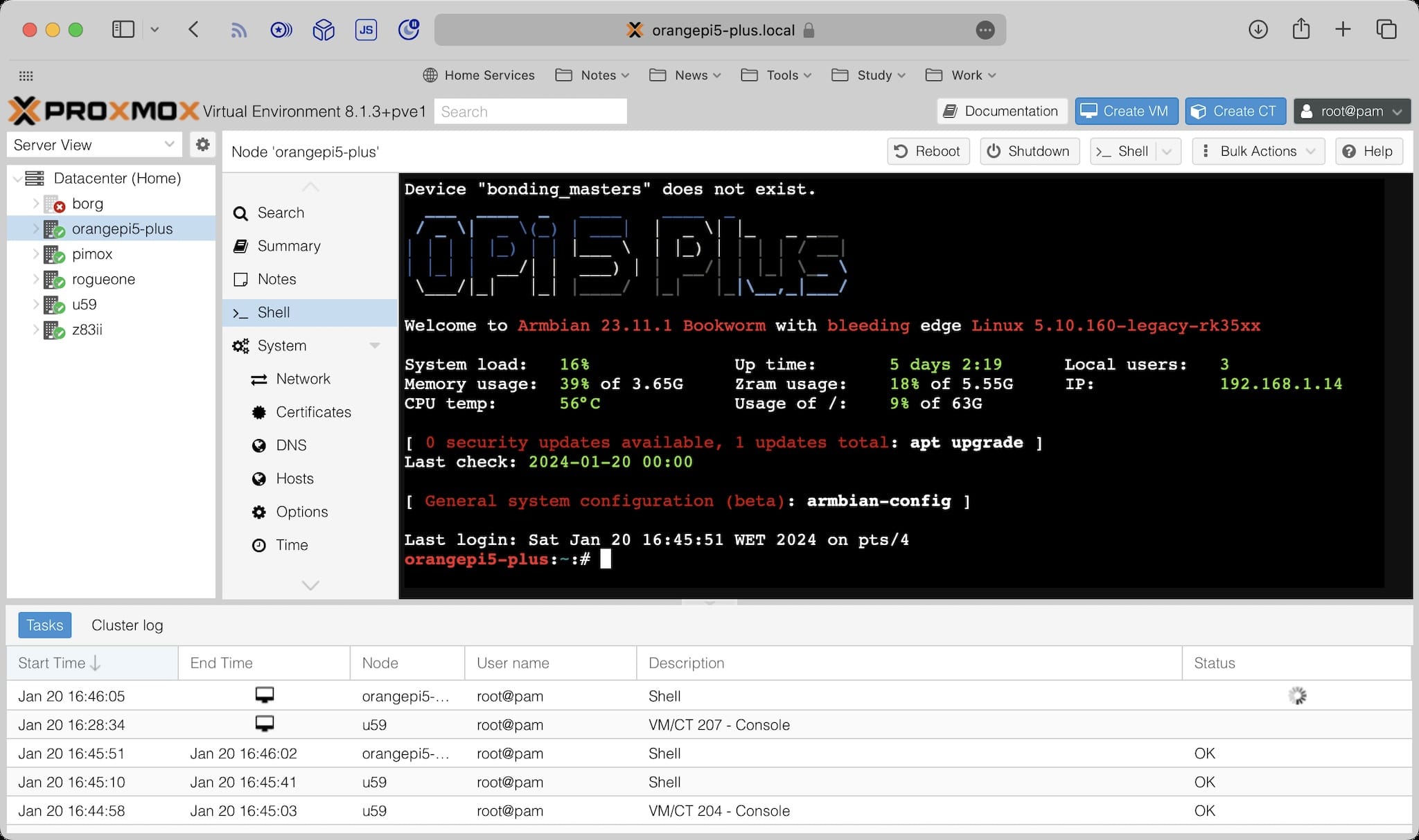

Running Proxmox on the Orange Pi 5+

Now that I had a good performance baseline, I could move on to the meat of things–testing Proxmox for ARM. I reset the SPI flash, booted off the Armbian SD card, and re-partitioned the NVMe like this:

Disk /dev/nvme0n1: 931.51 GiB, 1000204886016 bytes, 1953525168 sectors

Disk model: WD_BLACK SN770 1TB

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x1fbc6f29

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 2048 134219775 134217728 64G 83 Linux

/dev/nvme0n1p2 134219776 142032895 7813120 3.7G 82 Linux swap / Solaris

/dev/nvme0n1p3 142032896 1953525167 1811492272 863.8G 8e Linux LVM

Again to compensate for the limited 4GB RAM on my board, I enabled swap for the extra partition I created and set up LVM on the rest of the disk.

From then on, installing Proxmox was just a matter of following the wiki instructions, making sure the LVM arrangement was correct (you need to create the pve volume group, etc.) and joining it to my cluster:

All the basics worked (other than device management, which I’ll come to in a second, it worked just as well as my other servers), and besides cloning some of the ARM containers I had already on my Pi I was able to set up a few new containers and VMs without any issues–although, again, the 4GB RAM was a little limiting when using VMs, so I mostly stuck to LXC containers.

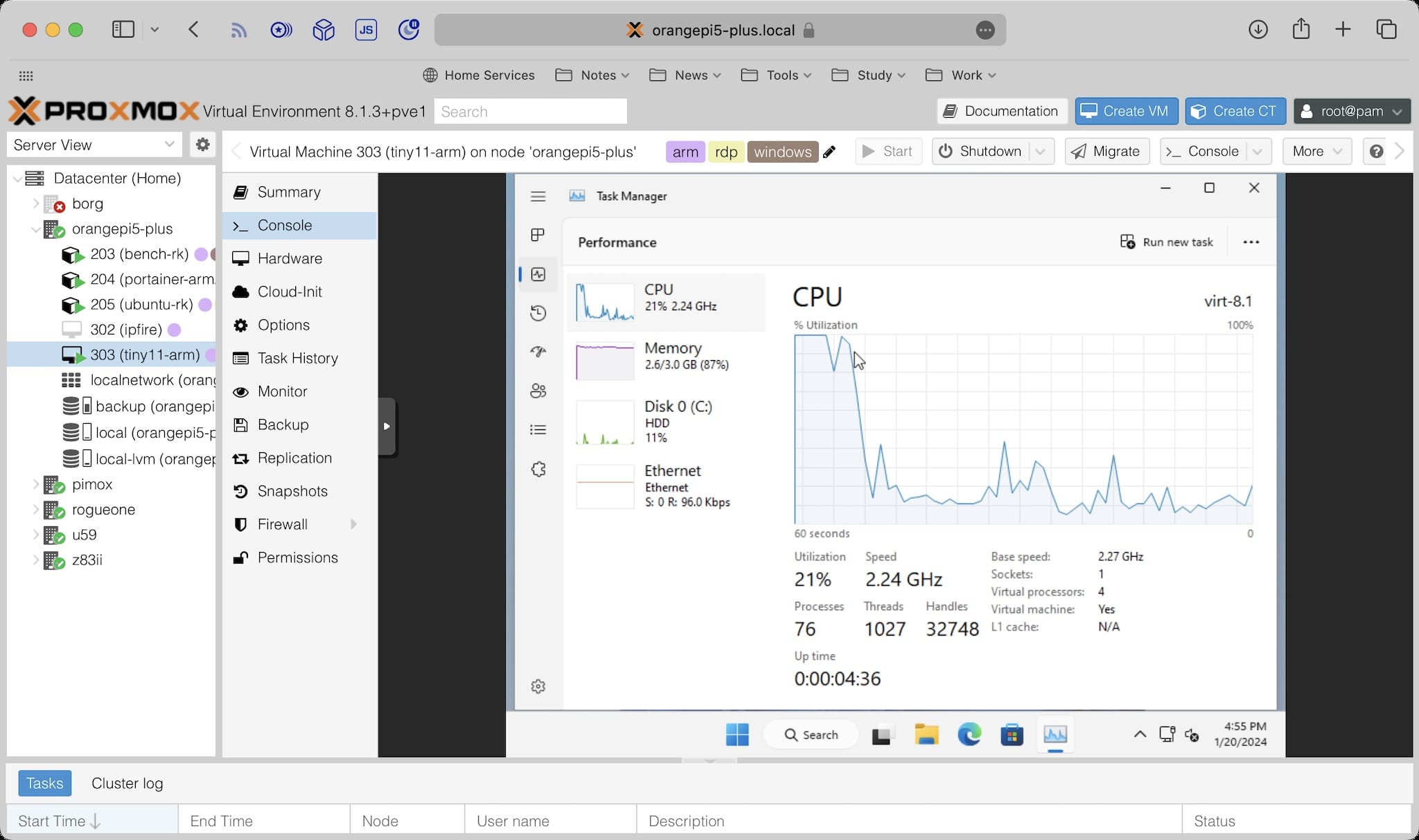

Running Windows 11 ARM on the RK3588

One thing that I absolutely wanted to test was getting Windows 11 ARM to run, since the Raspberry Pi 4 had some trouble. It was a lot smoother (and faster) to do a fresh install on the RK3588, although I did have to limit the VM to 3GB RAM:

An interesting quirk I noticed with the Orange Pi 5+ is that the emulated real-time clock was off by a couple of centuries5, which causes no end of trouble–CPU usage went through the roof as Windows services tripped over themselves to check certificates and other things, and the VM was very sluggish.

But once I set it to the correct date and time, everything worked fine and was very usable even when logging in via Remote Desktop. And, of course, the Intel emulation worked fine–I installed XMind, and it held its own even when bumping the remote window to full screen on my large displays.

Device Passthrough

Thanks to the magic of Proxmox, migrating the Windows VM to my Raspberry Pi 4 and back was an automatic process, as was cloning my Portainer setup from the Pi and running it on the Orange Pi 5+.

I couldn’t get PCI passthrough to work, though–which makes sense since Proxmox isn’t really ARM-aware right now, and I suspect that both the shipped QEMU version and the 5.10 kernel don’t have the necessary bits to make VFIO work (I’ve lost track of the kernel work for that sometime last year, and haven’t been able to find good updates).

So passing through network cards to a virtual router like IPFire is out of the question for now, although I suspect I can finagle a way to do it with the Proxmox SDN settings (or perhaps an USB adapter).

Conclusion

With an NVMe drive, the Orange Pi 5+ is a little beast of a machine, and extremely good bang for the watt–besides my very specific interests, it is a very capable board that can be used for a lot of things, and I think a 16GB unit (let alone the new 32GB version) would be more than good enough to be a quiet, low-consumption (yet pretty powerful) homelab server.

And that applies even without Proxmox–all you’d need is Portainer and a few containers, although of course you’d have to be mindful that ARM support for some things is still a bit spotty.

Given that I have been running a few VMs and a bunch of development containers on a Rasbperry Pi 4 for a while now, I’m certain the Orange Pi 5+ is able to handle the same workload with margin to spare (except for the 4GB RAM, of course). And it is a lot more flexible in terms of I/O and display options, although I do think a SATA port (or two) would be a nice addition.

As a thin client, it is also pretty good–just as capable as the u59 was, but with less power consumption and an equivalent set of I/O options with full-size display connectors (which is a plus for me).

And speaking of the u59, I was a bit surprised that I wasn’t able to run Ollama on it (the lack of AVX/AVX2 support caught me by surprise), and it makes me wonder how much better Intel’s new N100 CPU would be–and how the scales would tip versus ARM CPUs.

Regardless, I expect to migrate my home automation setup to the Orange Pi 5+ in the future (once I’ve tried using it as a voice assistant and played with the HDMI input, which will require a side quest into setting up Android), and I’m looking forward to see if kernel 6.x support improves things further.

It would be so nice to have more RAM, though.

-

As an additional data point I just moved it to my server closet after a few weeks of testing, and right now

lm-sensors/armbianmonitorreports 52oC with low load, which is OK. I may be adding a fan to it now that it is in a confined space far away from my desk, but I’ll wait and see how it fares. ↩︎ -

If you’re curious, typical output goes something like this: “The sky appears blue due to a phenomenon called Rayleigh scattering. When sunlight enters Earth’s atmosphere, it interacts with molecules and particles in the air, including nitrogen and oxygen atoms. These particles scatter light in all directions. […] ↩︎

-

Of course, the realm of the CPU-bound is relatively slow. My Mac M2 Pro runs

dolphin-phiat 69.35 tokens/s… ↩︎ -

In retrospect, I should have stuck to Standard Diffusion 1.5 and tried more samples. But I was curious about how the XL model would fare, since that’s what I’ve been using for my own artwork. ↩︎

-

I’m not sure if this is a

QEMUissue or just a buggy remapping of the Orange Pi 5+ clock, but since all my machines runNTPamd the Pi doesn’t have a clock at all I suspect it can be the latter. ↩︎