As I predicted in my last post, I ended up spending a few hours messing about with Stable Diffusion. Being more interested in reading than in futzing about with computers, though, it took me a while to get going.

In the end, it was the potential for satire that made me dive in. I decided I had to have a go at it after seeing this in r/StableDiffusion:

Now, I do not have any machines with discrete GPUs, so I have been giving most trendy ML techniques a pass over the past few years–I had plenty to do with that in real lifeTM, and with the GPU shortages and all I have not (yet) gotten myself a beefy Ryzen desktop with a CUDA-capable GPU.

But, serendipitously, Hacker News was aflame with a discussion about an Apple Silicon version, which I was able to get going on my M1 Pro in around 10 minutes (including downloading the freely available 4GB model).

I’m actually now using lstein/stablediffusion, which has been very active over the past two days and has papered over most of the missing bits in PyTorch and GPU support except upscaling. That means I’m somewhat limited to rendering 512px images in under a minute, but that is plenty enough1.

I’m not going to wax lyrical about the impact of AI-driven illustration on art, or how it’s going to put stock image providers out of business. Both of those are quite likely to pan out in unexpected ways, so I’ll just say that having Stable Diffusion freely available and driven by Open Source tooling is definitely going to impact companies like OpenAI as far as the mass market is concerned (making money out of their current tech has just become really hard, and they are going to need to step up and provide much more sophisticated tooling).

My take on this is that it’s not going to replace artists – Stable Diffusion is closer to being “a bicycle for art” (i.e., an enabler and accelerator) than anything else, and for those of us with less artistic leanings it’s just going to be a lot of fun2.

Prompt Engineering

Since Stable Diffusion is not Dall-E 2, a lot of the staple prompt engineering that is making the rounds is a bit moot. However, you can go a long way if you stick to a theme and fine tune it, and so for most of my experiments I went with this:

portrait painting of

thing or person, sharp focus, award-winning, trending on artstation, masterpiece, highly detailed, intricate. art by josan gonzales and moebius and deathburger

This is what you’d get if you asked for a Corgi:

Portraiture

But then things quickly go ballistic from there:

Sadly, the current version I’m using ignores seed values, so I can’t reproduce these at will (yet).

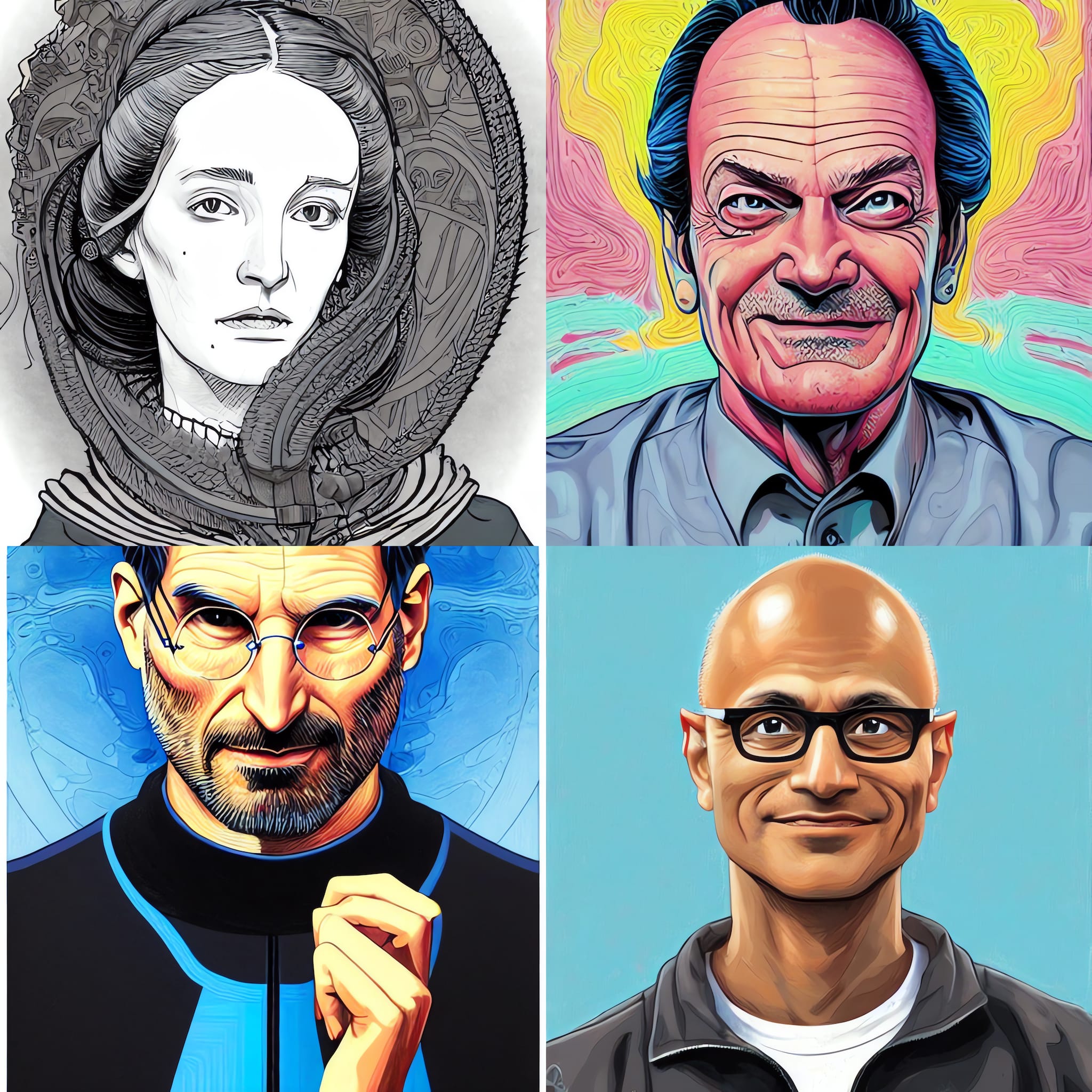

The Pantheon of Tech

The really amazing thing, though, is when you realize that inside those 4GB of model weights there is enough data to synthesize these:

This is the bit where Arthur C. Clarke’s old adage about sufficiently available technology being indistinguishable from magic applies, because I just typed their names into the prompt placeholder.

Artists

It gets a lot generally right for artists, in tone if not in looks:

Almost Fictional

And it just comes up with the most delightful details if you pick names of people who either don’t exist nor have a photographic record:

The craggy look of Caesar, Paul’s stillsuit, Master Chief’s armor (in a “portrait”, in exactly the same prompt as the others) and Artemis’ countenance… If you picture this as a sort of dip into our collective unconscious, the results are nothing short of amazing.

It was even able to cope with a completely vague subject like the cast of Friends:

Our Collective Unconscious

So I decided to take this a bit further and dip into the cesspool of our collective unconscious that is Twitter, and generate random things based on what crossed my timeline:

Image Generation

The best thing that I’ve yet to explore fully, though, is using Stable Diffusion to generate new images based on sketches or existing pictures.

For a lark, I turned some of my friends’ avatars into weathered fishermen by using them as base images:

But it is much more impressive if you look at the source images alongside. For example, I took my very first Dall-E 2 output and used it as an input:

Given that people are already incorporating Stable Diffusion in drawing tools and the rate at which things have been progressing during Summer, I can’t wait to see what is going to come out of this in a year or so.

I expect a lot of video, a lot more sophistication around prompt design, a few more completely free models and, of course, a veritable plague of AI-generated reaction GIFs, but I think it will all be alright in the end.

Well, eventually. A lot of immature people (and policy makers, official or otherwise) are going to be freaking out…

-

You can also run the Vulkan version of Real-ERSGAN and upscale images separately, which has been working fine for me. ↩︎

-

The Muggles, of course, will just appreciate the better voice-driven Instagram filters it will inevitably spawn. Or something. ↩︎