Progress over the past two weeks has been slow, but I’ve gradually been feeling well enough to get back to exercising and taking a stab at my hobbies again (work is another matter–I’ve been ramping up, but Thanksgiving holiday has slowed down everything).

And one of the things that has been on my mind is the recent release of Stable Diffusion 2.0, partly because it intersects with ML (which I wish I was doing more of, not necessarily of that kind), hardware (I keep wishing I had a beefy desktop machine with a hardware GPU, although my MacBook Pro and my iPad Pro can both run Stable Diffusion models just fine), and fun (because I had so much fun with the early version).

So I decided to do the kind of thing I usually do–I added another angle to this, which was automated deployment, and built an Azure template to deploy Static Diffusion on my own GPU instance.

Alternatives

Like everyone else, I’ve been using a mix of free Google Colab workbooks (which have an annoying set up time, even though most of them will cache data on Google Drive to speed up repeated invocations), my MacBook Pro (where I’ve been using either imaginAIry for Stable Diffusion 2.0 or DiffusionBee for older versions), and even my iPad Pro with Draw Things–which works surprisingly well, although it does burn down the battery.

imaginAIry, in particular, appeals to me because it makes no attempt to hide the internals and exposes a nice Python API, so it’s my favorite for reproducible results.

But I also wanted to get a feel for how people were building services around this and needed an “easy” thing to get back up to speed, so I decided to host my own1.

Picking a VM

The thing is, GPU-enabled instances don’t come cheap. I already went down this track back in 2018 when I fiddled with NVIDIA Geforce NOW, and the only thing I didn’t do at the time was play around with spot pricing.

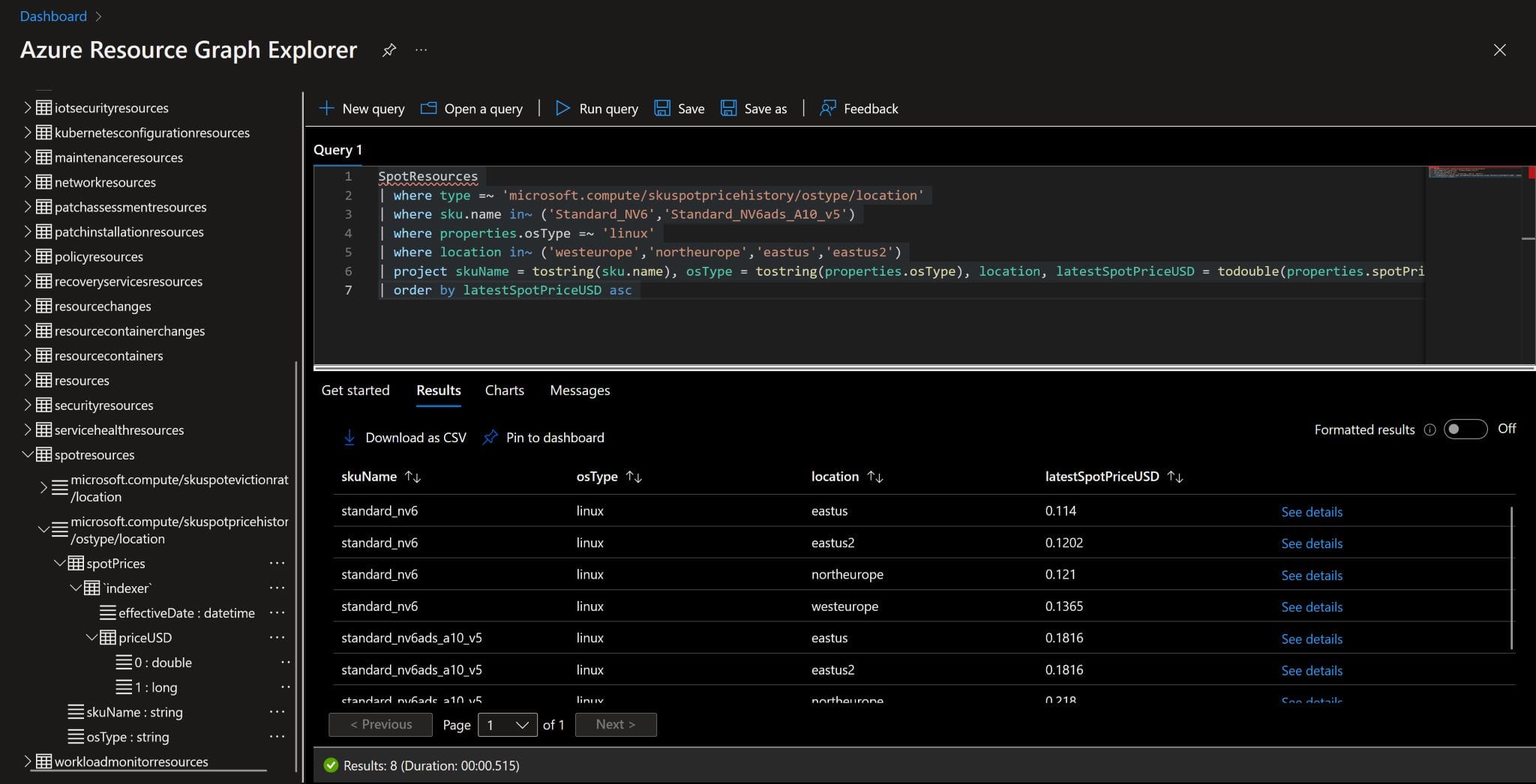

I have been using spot instances for my Kubernetes clusters, so I started by checking out spot pricing for a couple of interesting SKUs:

US $0.114/hour is really not bad considering that the retail price for a Standard_NV6 (which is exactly the same instance type I used in 2018) is, as of this writing, exactly ten times that amount, so that seemed OK. The additional surcharges for a standard SSD volume (a tiny one) and networking are residual, so I can certainly fit this into my personal budget for only a few hours of fooling around each month.

And getting the machine preempted would not be a big deal (you can also look up eviction rates, and they’re currently at 0-5%).

NVIDIA Driver Setup

Next, I went and hacked down my k3s template to deploy a single machine and started to figure out how to set up NVIDIA drivers in Linux. Given the hassle involved, I decided to go with Ubuntu 20.04 (which is still LTS, and has a couple more years of testing).

However, first I had to make sure I was picking the right image. And in case you’ve been living under a rock or are still doing Stone Age style manual provisioning in the Azure portal, I’m one of those people who only do automated deployments, so when I rewrote the Azure template I had to account for the fact that the Standard_NV6 uses an older generation of OS images (for a different hypervisor baseline):

"imageReference": {

"publisher": "Canonical",

"offer": "0001-com-ubuntu-server-focal",

"sku": "[if(contains(parameters('instanceSize'),'Standard_NV6'),'20_04-lts','20_04-lts-gen2')]",

"version": "latest"

},

Note that the if conditional above may seem lazy because I’m using contains, but that’s because I have also been playing around with the Standard_NV6_Promo SKU.

The next step was getting the NVIDIA drivers to actually install. And that turned out (after a couple hours of investigation and a couple of tests) to be as easy as making sure my cloud-init script did two things:

- Installed

ubuntu-drivers-commonandnvidia-cuda-toolkit - Ran

ubuntu-drivers autoinstallas part of my “preflight” script to set up the environment.

This last command does all the magic to get the kernel set up as well, although there is a caveat–you’ll need a reboot to actually be able to install some PyTorch dependencies (especially those that are recompiled upon install and need nvidia-smi and the like to have valid output, which only happens if the drivers are loaded).

But after a reboot, it works just fine:

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.65.01 Driver Version: 515.65.01 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla M60 Off | 00000001:00:00.0 Off | Off |

| N/A 47C P0 38W / 150W | 2505MiB / 8192MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 3766 C python3 2502MiB |

+-----------------------------------------------------------------------------+

I’ve yet to test this on Ubuntu 22.04, but I suspect it will work just the same (I’m quite curious as to how docker-nvidia will work, although I didn’t need it for this).

And, of course, in the real world I would likely automate a pre-baked OS image so I could have fully reproducible deployments.

But one thing I made sure to set up already (besides adding Tailscale for easier remote access) was configuring the VM for automated shutdown at dinner time. You can do that via the portal in a few clicks, and I will be adding it to my template later.

After all, you absolutely must set guardrails on how you use any kind of cloud resource… Especially if you’re experimenting–that’s usually when things get out of control.

Picking a Version

I started out using imaginAIry and JupyterLab for Stable Diffusion 2.0, but I quickly realized that the new training set used for 2.0 is vastly different and omits most of the nice art styles that I’m rather fond of.

So I parked that for a while and decided to revert to 1.5 and the AUTOMATIC1111 web UI (which also supports 2.0 somewhat).

And I’m not at all sad about missing out on 2.0 for now, especially considering I can still get this kind of output out of 1.5:

-

There was also a serendipitous coincidence in that I was asked to help in a couple of things, one of which also included setting up a GPU instance, so this was a good way to keep the momentum going once I clocked off work. ↩︎