Winter is (definitely) taking hold, and I managed to be foolish enough to be walking around with a half-mended flu on the coldest day so far and, as a direct consequence of that foolishness, had to stay home another week–so I definitely appreciated having taken the trouble to automate my heaters last year, and believe it’s time for another installment in that particular quest.

Previous Installments

For those of you tuning in for the first time, here’s a list of related posts:

- Part 1 - Sonoff/Tasmota Wi-Fi Outlets

- Part 2 - Node-RED, Broadlink IR and Security

- Part 3 - Debugging, Xiaomi Zigbee sensors

- Part 3.5 - an interim update regarding Azure IoT integration

Current Status

Back in November I dug out a few of my ESP8266-powered sockets, plugged the heaters back on, and everything’s been just dandy.

Although there have been several Tasmota firmware releases throughout the year, I held off on messing with stuff that works until there is a solid reason to do the whole lot, and focused on tackling three things: a stable back-end, media center automation, and Zigbee sensors.

The Back-End

I’m pretty happy with HomeKit as a platform (at least while Apple allows us to run hacks like homebridge, something that is always in the back of my mind), and 99% of my automation and scheduling is done through it (with the Apple TV as a hub), so what I do need is a good way to bridge devices to it and build the 1% that’s needed to talk to them.

Docker

Docker makes it trivial to set up the entire stack on a new machine, and both updates and rollbacks are pretty painless.

My stack essentially consists of three containers:

homebridgewith a few plugins (Dockerfile)zigbee2mqttto talk to Zigbee devices (Dockerfile)- Node-Red for glue logic, dashboards and custom accessories (Dockerfile)

I run my MQTT broker (mosquitto) and avahi-daemon outside Docker both for simplicity’s sake, and because running more than one HomeKit bridge on Linux tends to require careful management of Bonjour/Rendezvous, exposing dbus to both containers so that they can talk to avahi-daemon on the host:

node-red:

image: "rcarmo/node-red:arm32v7"

container_name: node-red

restart: always

volumes:

- /etc/localtime:/etc/localtime:ro

- /dev/rtc:/dev/rtc:ro

- /var/run/dbus:/var/run/dbus

- ${HOME}/.config/node-red:/home/user/.node-red

network_mode: "host"

homebridge:

image: "rcarmo/homebridge:arm32v7"

container_name: homebridge

restart: always

volumes:

- /etc/localtime:/etc/localtime:ro

- /dev/rtc:/dev/rtc:ro

- /var/run/dbus:/var/run/dbus

- ${HOME}/.config/homebridge:/home/user/.homebridge

network_mode: "host"

Node-RED

After a fair amount of tinkering with Python and asyncio, I realized that even though I am not overly fond1 of Node-RED, I was getting more bang for the buck from it than just about everything else I tried. The way I see it, it has a bunch of upsides:

- Native MQTT integration

- Built-in (if flaky and crashy) HomeKit support

- Built-in web UI and dashboards (for managing and monitoring devices)

- Built-in documentation (you can annotate flows in Markdown, which is very, very useful)

- Single solution for automating just about everything

- Very tight development cycles–one click and you’re live

The downsides, for me, are:

- Any real logic has to be built on JavaScript, which is a pain in terms of debugging, data manipulation, and assorted other hassles

- It’s very hard to manage and version large numbers of flows

- Persistency and data storage are… iffy.

However, I’ve managed to work around some of it. I have in mind building my own “function” node with something that transpiles to JavaScript, and started using sqlite as a backing store for anything important. More importantly, I found a moderately sane approach for versioning and backing things up.

Versioning

Data and configuration versioning is a bit of a pain – especially considered that all state (from Node-RED to zigbee2mqtt) is usually stored on disk as JSON files.

Even though Node-RED has an option to pretty-print its flow configuration, most of the others don’t, so I moved all the configuration files under ~/.config and hacked a simple Makefile and Python script to rsync the entire tree across, pretty-print all the JSON files (with sorted keys) and commit them to a local git repo, which works pretty well:

# Makefile

export SOURCE?=[email protected]:~/.config/

export TARGET?=home.lan

export TAG_DATE=`date -u +"%Y%m%d"`

.PHONY: snapshot init

init:

mkdir -p $(TARGET)

git init

snapshot:

rsync --delete-after -rvze ssh $(SOURCE) $(TARGET)

python3 prettyprint.py # pretty print all the JSON files

git add .; git commit -a -m "Snapshot on $(TAG_DATE)"

This is a bit heavy-handed, but also very quick:

#!/bin/env python3

from json import loads, dumps

from glob import iglob

for f in iglob("**/*.json", recursive=True):

try:

with open(f, 'r') as h:

data = loads(h.read())

with open(f, 'w') as h:

h.write(dumps(data, sort_keys=True, indent=4))

print(f)

except Exception as e:

print("Could not pretty print {f}: {e}".format(**locals()))

Insane Hackery

Before moving on to more automation, here’s an aside that deserves mention: One of the reasons I opted for Node-RED was that it passed a trial by fire of sorts, which I’ll briefly describe here.

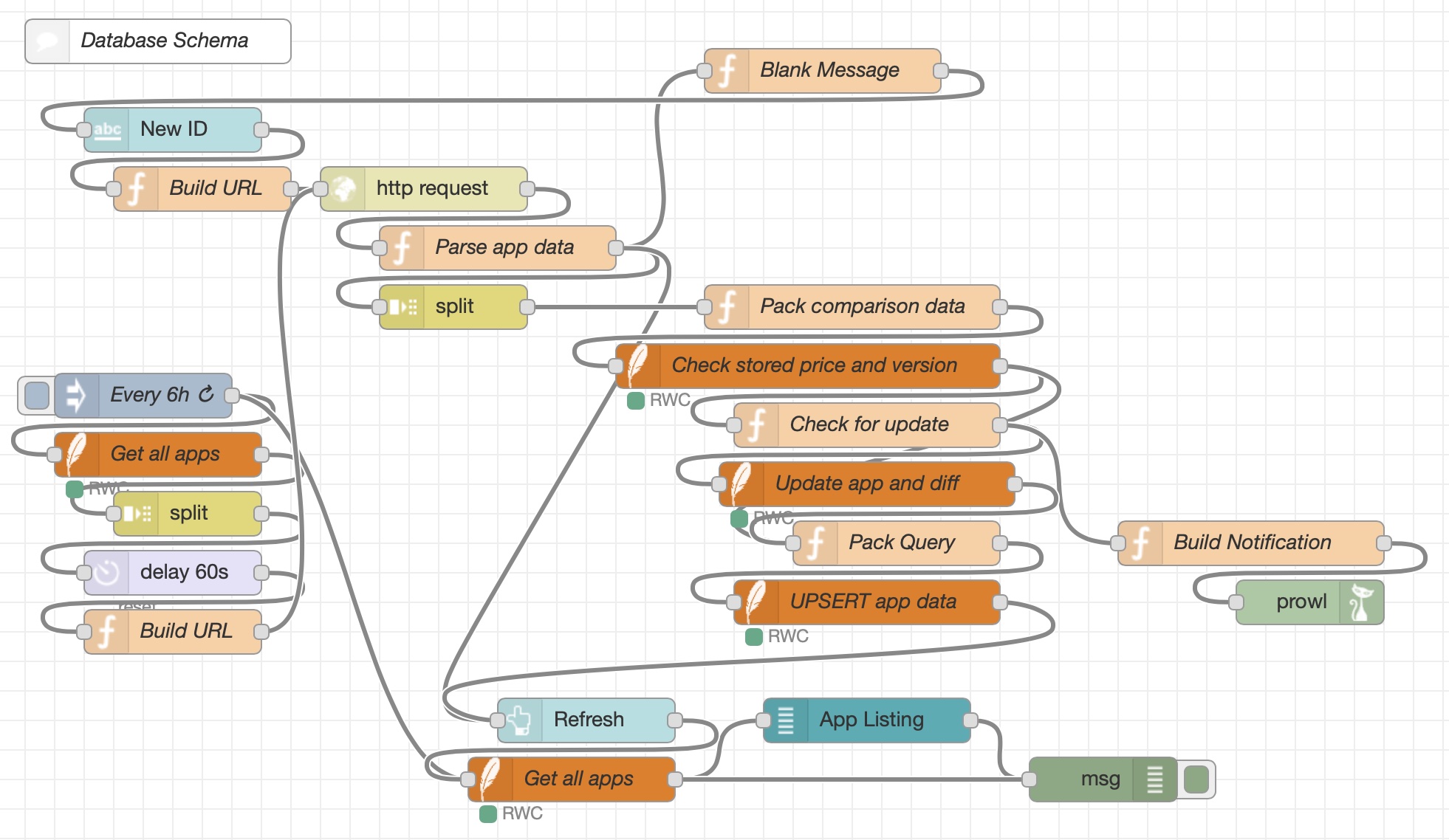

In a nutshell, due to my new music hobby I wanted to keep track of iOS and Mac App Store prices to keep tabs on holiday discounts for a few apps, and started building a small web app to do that until I realized that I could just as well push the envelope a bit and try to hack it together in Node-Red:

A particularly nice trick I enjoyed implementing was that I could split a stream of app IDs into lots of 10, query Apple’s endpoint with sets of 10 IDs a minute apart and then split out and check individual results, cutting down the number of HTTP requests with zero changes in logic (I would have done mostly the same in Python or Clojure, but it was neat nonetheless).

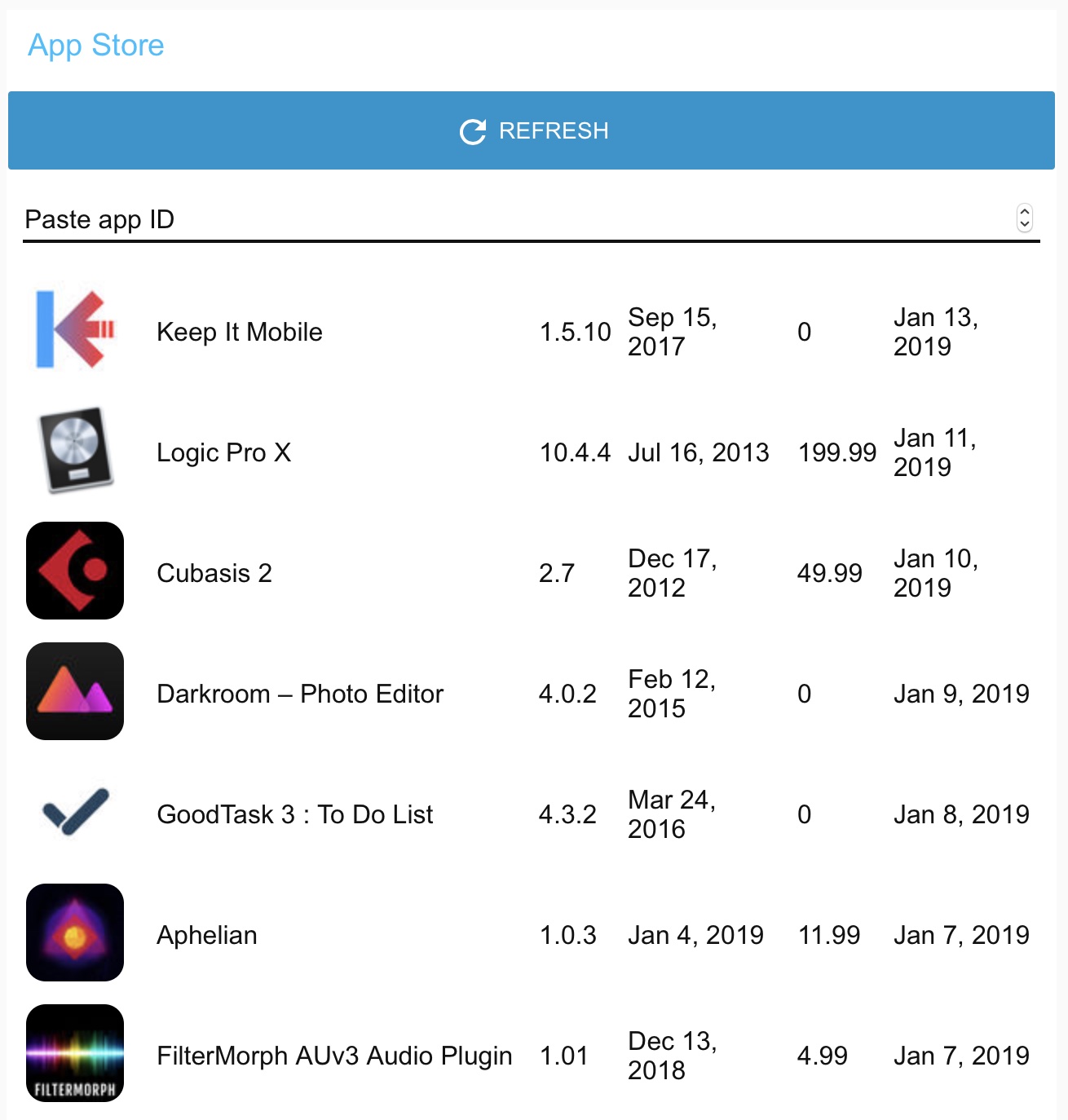

And yes, it works pretty well with the built-in, React-based dashboard UI components (and sends me timely mobile notifications with zero hassle, which is the entire point), even though the UX leaves something to be desired:

The ability to put something like this together and have a simple UI right off the bat (even if only for internal consumption) is pretty useful, and the amount of time it took me was a fraction of what a “regular” app would, so… I’ll accept that against limited maintainability, at least for throwaway projects and prototypes.

And as we shall see, it is also more than good enough for low level automation, too.

Media Center Automation

Thanks to the homebridge-webos-tv plugin, I can switch my LG SmartTV inputs, launch applications, set the volume (indirectly, by using a bogus lamp) and, of course, power it on and off, all of which work fine via Siri. The only thing I’m missing right now on the TV is switching audio to the optical output, which appears not to be exposed by LG’s WebOS APIs.

But ascertaining power states of other devices is still janky. I can test if the TV is on by poking TCP port 3000, but set-top-boxes are still a pain, and in order to figure out if the one in the living room is actually in use and automate it properly, I had to resort to finer hacking.

Mediaroom U-NOTIFY Packets

Fortunately, Vodafone Portugal uses Mediaroom for its set-top-boxes (at least for the non-4K ones), and their firmware sends out UDP multicast announcements on 239.255.255.250:8082 that look something like this:

[opaque binary data]

U-NOTIFY *

HTTP/1.1

x-type: dvr

x-filter: a57adb11-167d-4772-b519-[REDACTED]

x-lastUserActivity: 1/5/2019 4:48:58 PM

x-location: http://192.168.1.66:8080/dvrfs/info.xml

x-device: 11811169-2e33-4e5b-b9c0-[REDACTED]

x-debug: http://192.168.1.66:8080

<node count='930795'>

<activities>

<p15n stamp='08D59C7E89AA028080B23404B499'/>

<schedver dver='3' ver='0' pendcap='True' />

<x/>

<recordver ver='1' verid='23675' size='297426485248' free='291118252032' />

<tune src='timeshift:///' pipe='FULLSCREEN' ct='0xdfe48c76827c89be' pil='0x0' rate='0x85aac0' stopped='false'/>

<x/>

</activities>

</node>

Yes, that is an XML payload. I have XML being sprayed across my LAN by these things, likely for the benefit of the remote control app, and they happen whether the box is considered to be “on” or not (since it has local storage for recordings and “catch-up” pause/rewind TV functionality, the box is effectively always on).

So in order to figure out whether the STB is in use and allow me to turn it on/off remotely via HomeKit, here’s what I have to do:

- Read an UDP packet from

239.255.255.250:8082every 2 seconds - Parse headers to verify it’s this specific kind of packet

- Parse the

XMLpayload and look for atunenode withstoppedset tofalse

All the above can be done with a 5-step Node-RED flow, and even though parsing the XML might be a little too CPU intensive, the ability to drop events between a given time interval is built-in, and works great. It’s just hellishly difficult to debug in JavaScript.

The tune attributes vary and let me check if the STB is playing live TV, a timeshift stream (such as the above), etc., and I can match LiveTV with Vodafone Portugal’s EPG XML easily enough, but for now I’m OK with just figuring out whether it’s actually in use.

Since HomeKit is apparently in the process of being updated to support media players (and Smart TVs) properly, I intend to revisit this later in the year.

Parenthesis: The HDMI-CEC Anti-Unicorn

There’s a joke running around that people with fully working HDMI-CEC setups are “unicorns” of sorts, but I’m pleased to report nearly everything I have works fine with HDMI-CEC.

Sadly, like pretty much every other Mediaroom box I’ve used (and I tested quite a few, both when I was at Vodafone doing the RFP for the IPTV service, and later at MEO), the production Vodafone STB firmware has zero useful HDMI-CEC functionality, which means it will remain gleefully “on” and sucking up bandwidth even when the TV is powered off, and it will not honor (or pass on) IR commands from the TV remote.

(It obviously needs to be on standby mode for handling local recordings and various housekeeping duties, but it being a special snowflake annoys me to no end, since I know they can deliver a better user experience than this.)

I can build a ruleset based on TV state and turn the STB “off” when I switch inputs on the TV or power the TV off completely, but that adds an extra control loop and a possible point of failure that I am hesitant to “fix” for Vodafone Portugal.

If you’re reading this, guys, just get it fixed, you’re wasting power and bandwidth if someone forgets to explicitly turn off the STB.

Zigbee

I got a dresden elektronik RaspBee board for testing in hopes of using it as my main Zigbee controller, but as it turns out their software is both proprietary and solely focused on REST APIs, which means I can’t integrate it directly with my MQTT-based setup.

It does have a nice GUI that runs on the Raspberry Pi (and it lets me peek and prod at Zigbee gear at very low level), but it is effectively useless for me at this point save for testing, since I’ve as yet been unable to get it to join my existing Zigbee network as an extra router.

But right now, I have other priorities: coverage and reliability.

Coverage

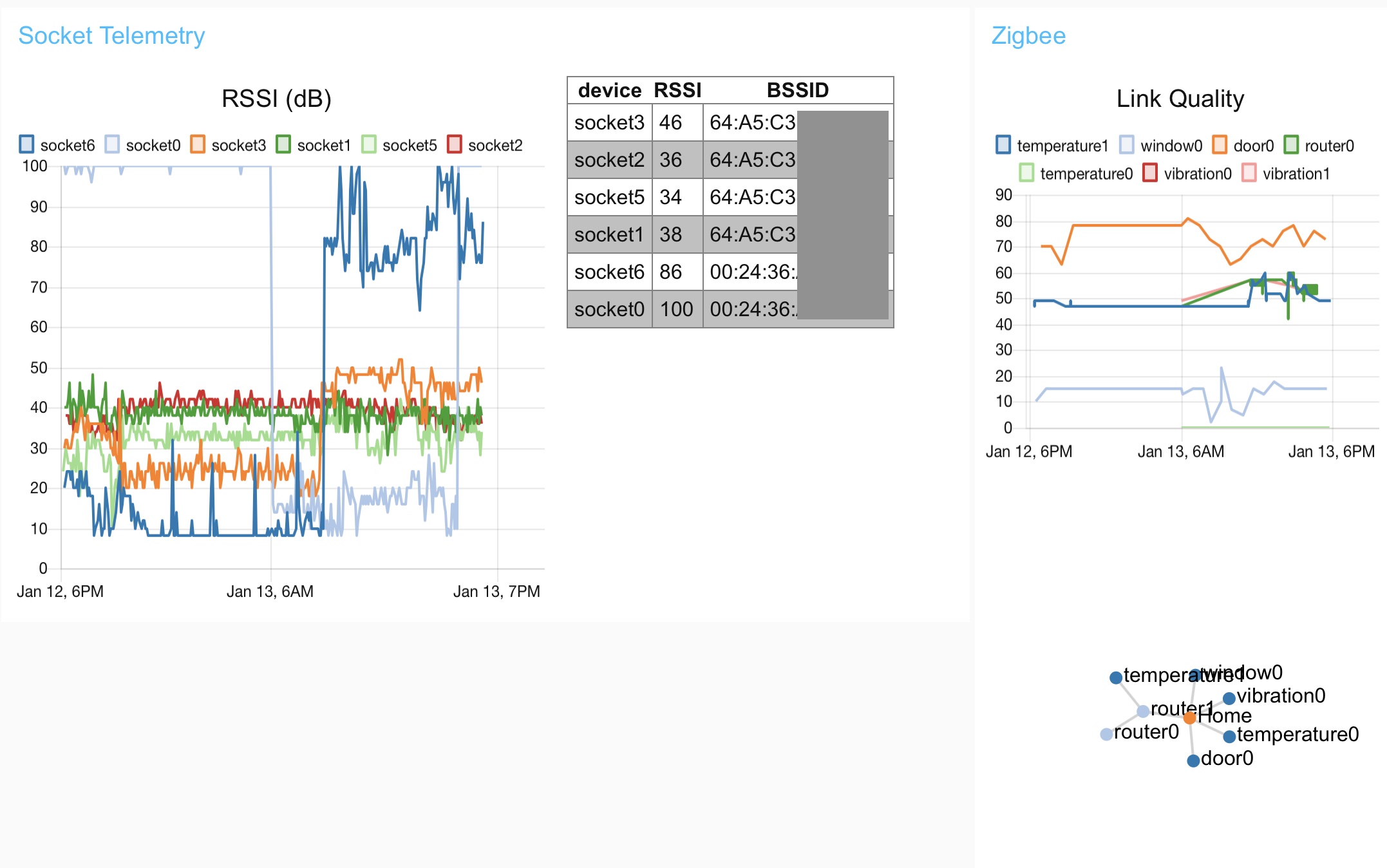

In order to address coverage, I simply bought two new CC2531 dongles, flashed them with the right firmware, and stuck one each on the back of my AirPort Extremes (which are placed in strategic locations around the house) to act as Zigbee routers.

It nominally works, but the mesh topology is still a bit wonky (devices report being attached to one of the routers at first, then appear connected to the coordinator):

I ascribe that partially to bugs in zigbee2mqtt and have rolled my own topology sniffer that picks off router updates on its own, but it is quite annoying not to have a stable way to verify things.

Reliability

As far as reliability is concerned, one of the temperature sensors keeps dropping off the grid for hours (I get that it theoretically should only report when something’s changed, but sometimes I am 100% sure there was more than enough change for it to trigger and yet nothing happens).

It also apparently doesn’t like to connect to any of the routers, although its sibling (and a cousin vibration sensor) has no qualms about it.

Finally, the Zigbee coordinator dongle hangs on occasion after restarting the server (i.e., no full power down), which is annoying (and is yet to be fixed).

But I like the idea of getting as much hardware off my Wi-Fi as possible, and am going to continue adding to my Zigbee setup (I ordered a few new sensors before Christmas) and upgrade the firwmare as zigbee2mqtt evolves.

Hopefully things will settle in the meantime.

Different Ways To Handle Accessories

Right now, I have three kinds of HomeKit accessories, and hope to standardize on two:

- Things directly supported as

homebridgeplugins (my reflashed ESP8266/Tasmota devices, anything IR-based, my LG smart TV) - “virtual” accessories supported by the

homebridge-mqttplugin that I can create and interact with solely via MQTT from Node-RED but which are effectively handled byhomebridgeitself (most of my Zigbee sensors are running like this) - “native” accessories that run the HomeKit protocol directly inside Node-RED (the Vodafone set-top-box and Zigbee sensors I haven’t migrated yet)

What this means is that I have two HomeKit virtual bridges running: homebridge itself (which handles the first two kinds of devices) and the Node-RED HomeKit “node” (which, despite my having switched to a new version, is still very flaky and tends to crash Node-RED).

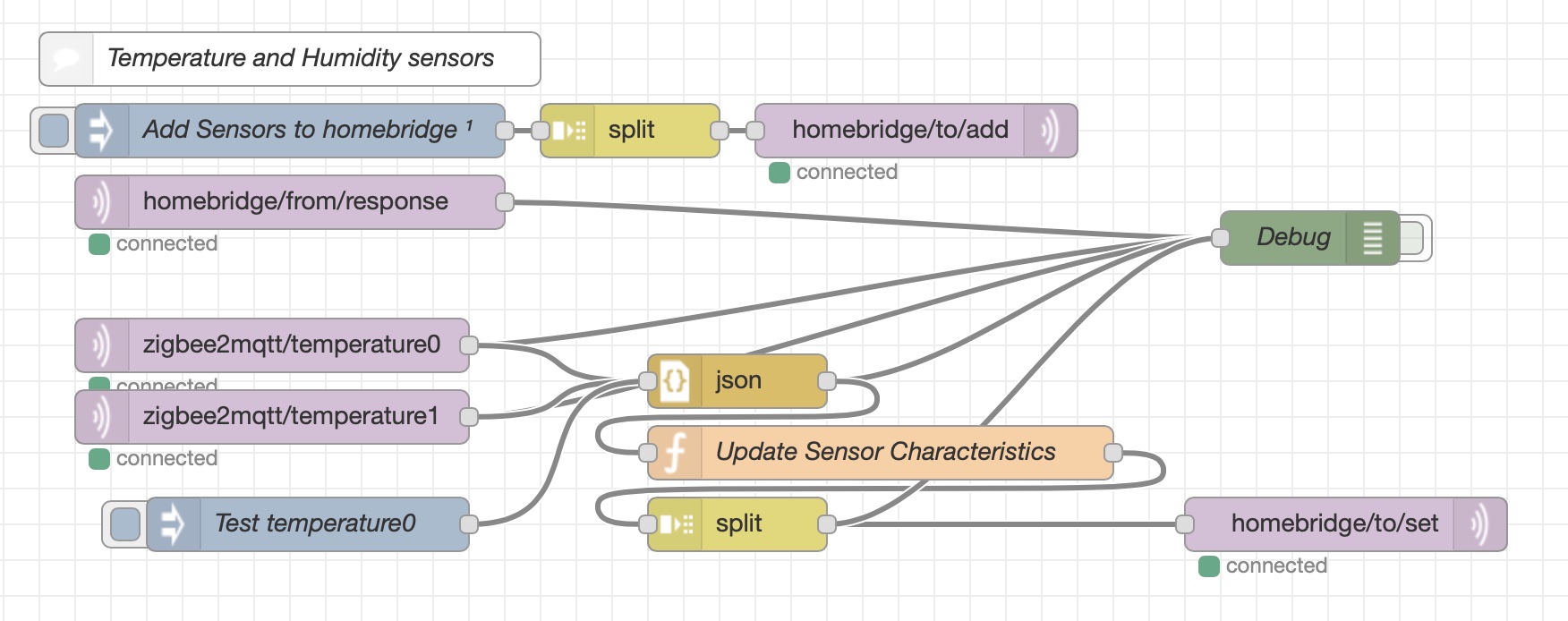

So I’m moving everything to homebridge-mqtt as time permits:

There’s also the advantage of enforcing separation of concerns: homebridge-mqtt lets me move everything related to the HomeKit protocol inside the homebridge process, and I can control the HomeKit shims via MQTT (and bridge them across to other things, like zigbee2mqtt) from Node-RED.

Next Steps

Since HomeKit has an all-or-nothing approach at access control (you can’t, for instance, specify that your kids can’t mess around with specific devices), and as part of other things I’m doing with bots, speech recognition and virtual agents, I’ve been tinkering with the ReSpeaker 2-Mics Pi HAT and several wake word engines to both gauge the state of the art and check whether it’s feasible to have a simple (and, hopefully, kid-friendly) voice assistant in the living room.

I’m not interested in using Google Home or Alexa/Echo (I recommend playing with the Alexa app on iOS and navigating to the recordings section, which is always a sobering reminder of what happens to the audio these things capture), but rather in seeing how far I can go with local speech recognition and (for kicks) to glue some things to Azure cognitive services.

The current Raspberry Pi Zero can barely cope with running a “hot word” detector, so I ordered a couple of new Raspberry Pi 3A+ boards (which are both quad-core and slimmer profile than the 3B+, although sadly shorter on RAM) to tackle that and another side project of mine:

It’s going to be fun seeing where it all leads.

-

Putting it another way, despite hating the dumpster fire of a language it’s built upon, which causes me no end of trouble whenever I want to do proper error handling and (guess what) data structures… ↩︎