Pipes has been dead since 2015 or so, but I used it for a long time and it was essential to my daily news intake, so I’ve been building a personal replacement to cater to my specific needs.

The problem statement is simple–I’m looking to rebuild the collection of industry-specific RSS news feeds I follow through Reeder and Feedly on a daily basis, and add more smarts to it (trending topics, for starters, and then a curated feed of relevant news according to five or six topics).

But most of the sites or companies I want to keep track are either too niche or too corporate to bother having proper RSS feeds (or don’t have them for what I want to keep track of). Plus (and this is one of the new twists) some are written in German (which I can read, but not before my morning coffee), Swedish, Finnish, or even Korean.

So just like around a decade ago, I’m building a few custom scrapers and the logic to carve out some meaning from hostile web sites, and trying to do so in the easiest way possible.

Should I Go Web Scale?

I could write it all up in Python or Go again, and if I did I would most likely end up with a similar set of decoupled services:

- Fetchers and parsers communicating via

pubsub. - A writer process that takes the end results and writes them to a central store.

- A set of back-end classifiers and rankers that would also use

pubsubto keep track of changes and update the store with additional metadata. - An HTTP listener with read-only access to the store that just grabs the results and renders them as custom feeds so that Reeder can get at them via Feedly.

But as it turns out, this doesn’t need massive scalability (I’m only planning to track around a hundred or so feeds at most), and it becomes a little tedious to set up and maintain.

So I turned to piku again, and within all of 15 minutes, I had a running single-core VM with a working deployment of Node-RED running behind Cloudflare.

I then set out on a quest for piping data through it.

The New Low-Code Fashion is RED

I’ve been using Node-RED for home automation for quite a few years now (even down to maintaining my own cross-platform Docker images) and it’s been really stable, but I just never bothered with half of the built-ins except for building an app store price scraper a couple of years back.

Most of what I’ve done with it is a weird mix of MQTT and JSON piping with a couple of low-end UDP and multicast sniffing hacks to deal with HomeKit and my set-top-boxes as well as a bunch of quick and dirty dashboards for Zigbee temperature sensors, so this time around I consciously tried for a more modular, generic approach.

I also tried to avoid using third-party nodes as much as possible, since (like the rest of the npm ecosystem) there is a lot of outdated and broken stuff out there.

Fortunately, the core nodes have (so far) been more than enough, and you can go a long way with just four of them:

- The

xmlnode can parse both RSS and Atom trivially, and it takes around 5 lines of JavaScript to grab feed items off the results. - The

htmlnode can takejQuery-like selectors likea.tiles__content--link h4, which means it is able to scrape, slice and dice most web pages just fine (except for SPAs, but I don’t feel like using Puppeteer just yet). - The

httpclient node can now do persistent connections, and is quite fast at grabbing multiple pages off the same web server. - The

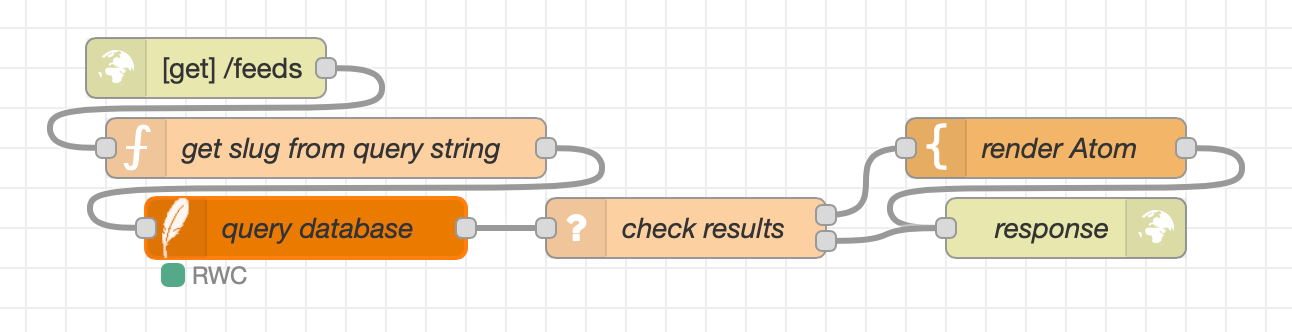

httpserver and response nodes make building an API a breeze:

The database, by the way, is provided by the node-red-contrib-sqlite node and currently has a pretty trivial schema (just feeds, items and topics, with straigthforward relationships) so isn’t exactly rocket science, either.

As an example, here’s the prepared statement for the query above:

SELECT

items.url as url,

items.title as title,

items.body as body,

strftime('%Y-%m-%dT%H:%M:%SZ', items.date, 'unixepoch') as date,

items.date as pubdate,

feeds.url as feed_url,

feeds.title as feed_title

FROM

feeds

JOIN items

ON items.feed_id = feeds.id

WHERE feeds.slug = $slug

ORDER BY pubdate DESC LIMIT 30;

Pushing down some trivial stuff (like date formatting and column renaming) into the engine is also another way to save time and increase responsiveness, so I do it whenever I can.

For instance, I use UNIQUE NOT NULL ON CONFLICT IGNORE on item url fields to simplify batch insertion handling (and implicit duplicate skipping), and also push down full-text searching into SQLite’s FTS engine, so it’s all blazing fast.

It’s all a matter of knowing your tools, really.

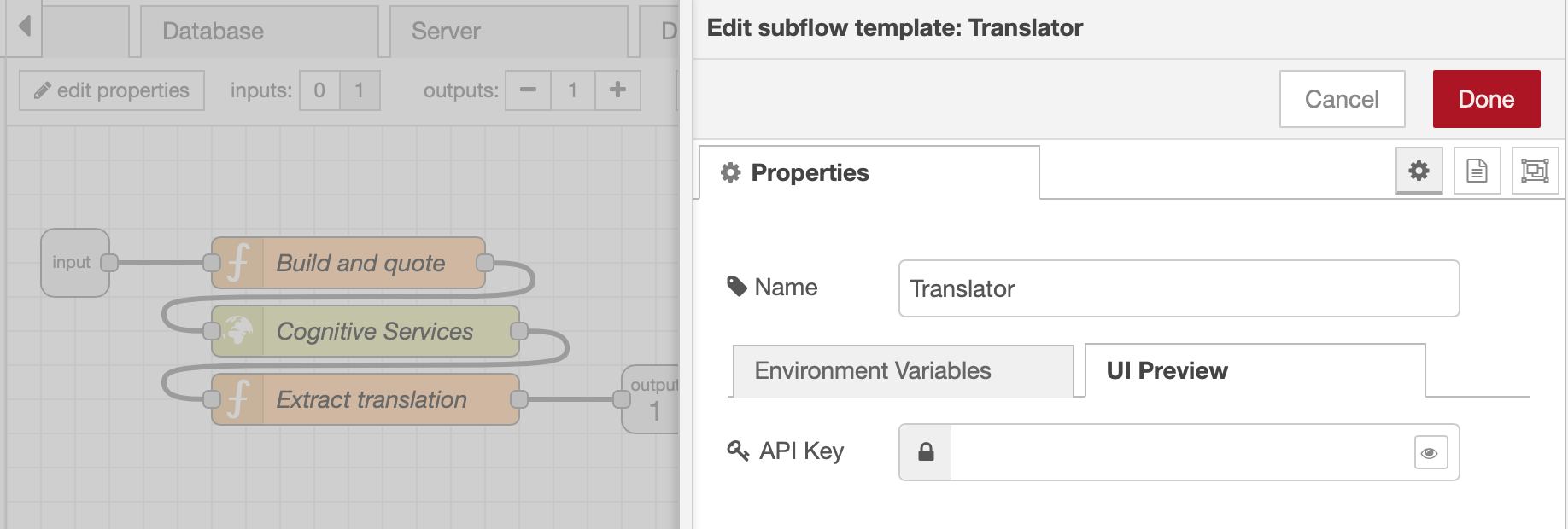

Also, somewhere in the past year or so Node-RED changed its UI for managing sub-flows, which means it’s much easier to have a modular approach with re-usable nodes–want a uniform way to clean up and store items? Just wrap a function node and a SQLite node in a sub-flow, and you’re done. And the same goes for filters, XML transforms, etc.

Build them once, test them, add them to the palette, wire them up in a pipeline, tie pipelines together… You get the idea.

So with some forethought and planning it’s pretty easy to have a Yahoo! Pipes-like experience - you just need to craft the right sub-flows with the right payload conventions, and voilá, you have your own palette of data transforms.

Example: Found In Translation

Translation turned out to be one of the easiest parts, since Node-RED’s HTTP node was also recently revamped to remove some of the weirdness of building custom HTTP requests.

Calling out to Azure Cognitive Services boiled down to building a generic sub-flow containing just three nodes (set up the request payload and custom headers, execute it, and unpack the results) as well as configuring a custom UI pane to paste in the API key:

Like all of my item processing nodes, it expects a consistent set of fields for each feed item, so I can toss in something that looks like:

{

"title": "Veckans nyheter",

"body": "Investeringsbank ..."

}

That will get formatted into an API request to the translation service, sent out, reformatted back into the right fields and output by the node like so:

{

"title": "This week's news",

"body": "Investment Bank ..."

}

…which conforms to the same schema I use across all my pipelines. So as long as I don’t deviate from that schema, I can slot this in anywhere.

Endgame

This means building a scraper for title-only, non-English feeds takes me around zero minutes right now, as all I have to do is drag one of my custom nodes to the canvas and link it to a scheduler that cycles through the URLs to fetch. It actually takes longer for me to subscribe to the new URL in Reeder.

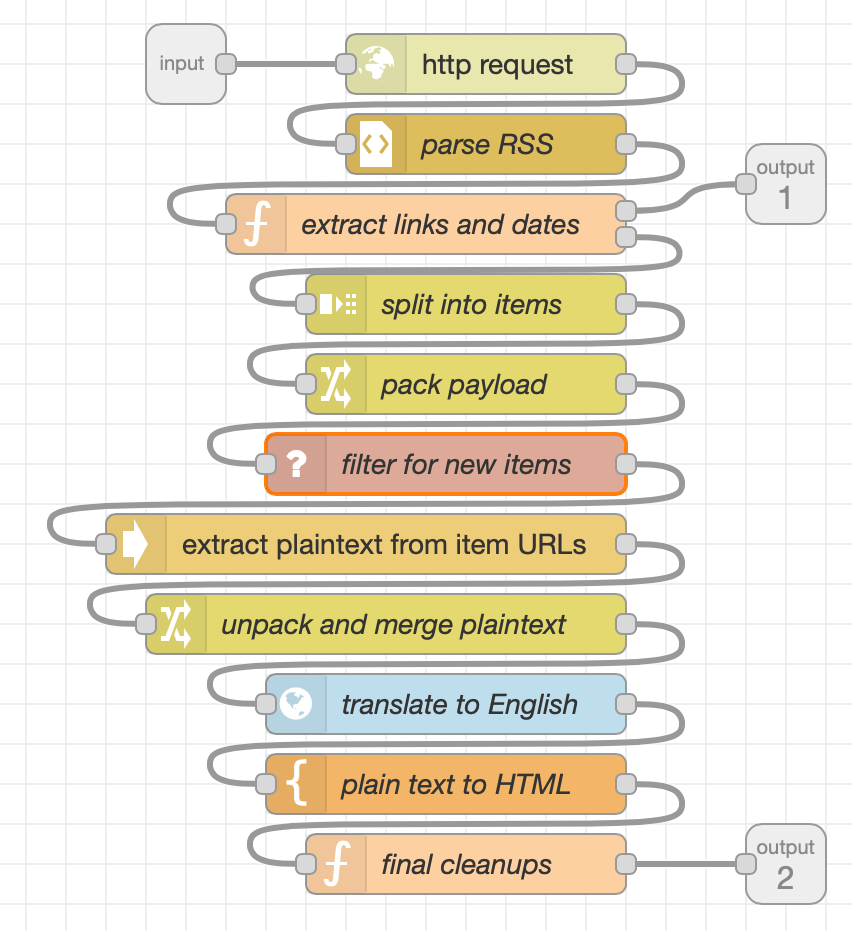

And each scraper follows more or less the same pattern (with minor variations depending on what it needs to do):

The filter, extractor and translator are all custom sub-flows as well, so it’s turtles all the way down.

There are a few other sub-flows for glue logic, but most of those are about scheduling refreshes and extracting topics from what is already in the database–again, it’s all a set of decoupled “processes”, and they could easily be extracted and run on their own compute contexts.

If Node-RED ran on Azure Functions, it would be a poster child for serverless low-code buzzword bingo…

Caveats

The only real issues I have with this setup are:

- Doing data munging in JavaScript is tedious and error-prone. I keep having to toss arrow functions into

Array.forEach, which is annoying. - Function nodes can only have one input, so there’s no easy way to (for instance) only act when two inputs are “finished” and available for processing. It requires some getting used to (and some creative workarounds for complex logic).

- Node-RED is still painful to debug sometimes (nodes have untyped inputs, so it’s easy to inject quasi-invalid data and have it blow up somewhere in the middle of a pipeline).

Other than that, it’s extremely fast, easy to maintain and quite cheap (thanks to piku it’s running on the same single-core VM that renders this site).

Since it’s all asynchronous fetching a few dozen feeds, translating them and writing them to the database takes only a few seconds, and Feedly only checks for the results every half hour or so, so there is no real need for a high-performance pipeline yet (and plenty of headroom).

And sitting behind Cloudflare it can probably even have a few thousand users hammering it, so I’d say it’s pretty fit for purpose and cost effective.

Not as much fun as coding the whole thing from scratch, perhaps, but much less hassle altogether, and frees up some time to do more interesting stuff.