404 Gallbladder Not Found

Putting off health matters is never a good idea, and my gallbladder took an opportunity of painfully reminding me of that this past Thursday evening. Excruciatingly so.

The upshot of this was that I spent a few days in the (aptly numbered) room 404 of a nearby hospital recovering from an unscheduled laparoscopy–which, if you haven’t kept up with medical jargon for the past 125 years, is the quaint (and handily reversable) technique of temporarily inflating humans and turning them into bagpipes with the goal of sticking various pointy implements into them and patching up misbehaving bits in the abdominal cavity.

Somewhat like miniature drone warfare, but in squishier, more constrained environs and with a better soundtrack (the playlist they had on in the OR as they put me under was quite cheery and upbeat).

The Key Lesson

Now, I’ve had highly unusual and unscheduled hospital stays before, and this was comparatively “fine” even if the hours leading up to it were excruciatingly painful. And serendipitously, I ended up staying in room 404 for extra geek cred:

The key lesson here for those of you following at home is that this was not unexpected–I had plenty of warning, did a few rounds of additional consultations, and was supposed to have planned this out and had it done as overnight/outpatient surgery over a year ago.

But the demise of Azure for Operators, the resulting scramble for a “safer” role and the recurring rounds of layoffs led to my neglecting the situation until it was a tad out of control–and this is exactly what I shouldn’t have done. I wasn’t in any truly serious risk, but the operating word here was “truly”–it could have been worse.

The Usual Existential Questions

Do I blame work? Yes.

And the reason why will be familiar to most people working in large corporations–no matter how many internal e-mails you get saying that your health is important and you should take time to take care of yourself, the peer pressure to do other things is always there, and that kind of internal propaganda quickly starts to ring hollow.

The implicit pressure I mention is most apparent if, like me, you work across multiple time zones by default, and actually got a bit worse in my current role due to the need to occasionally coordinate with sales teams (which are by default chaotic and have no notion of planning or schedules) and customers (who are my prime priority during delivery but obviously work on their own time). My current manager is an amazing person and told me time and again to take it easy, but there was only so much he could do–I’m the one who should have done more.

Other things like stress, organizational instability, recurring layoffs and constant trampling of any calendar slots I tried to set apart for myself (from having a quiet meal to being able to go out and exercise) were a factor in my letting things slide, yes, but they’re just details–I mostly blame myself for not pushing back.

Doing things as simple as saying no (which is hard for me to do) or walking away from messy projects (which I avoid since it is kind of anathema for my reputation as a “fixer”) might have helped, yes, but in retrospect I am just not sure–it’s been a crazy couple of years.

What Have I Learned (If anything)

Although I know that I will be back to work the day doctors cut me loose, I cannot help but remark that spending a weekend in blissful solitude walking nearly a dozen kilometers1 around the (nice, modern, tidy but expensive) hospital to help my sutures settle (pro tip–walking and taking slow, deep breaths does wonders for relaxing your abdomen), catching up on books and movies, messaging with friends and finding overly creative ways to bypass the hospital Wi-Fi (which is OK but uses a stupid Fortinet firewall that blocks Tailscale) has been… fun, although a tad expensive and unusually concerned with blood and other fluids as far as hospitality is concerned.

What these few days did was cement the idea that I would instantly leave my current job if I had a financially viable alternative that allowed me to walk or cycle to work and truly take care of myself instead of, well, just being spammed with e-mail about it (even if I am lucky enough to be working with the best people I ever met at my current employer).

Not Much Will Change Though

Sadly, relocating to California, London or Zurich is not an option (and likely not an immediate improvement in quality of life regardless of considerations about income and personal challenges).

There are no job alternatives for me in Portugal either–like I was discussing with a dear friend of mine last week (who happens to have had several key roles as head hunter for C-level positions), there are zero equivalent roles out there right now, and Portuguese companies are stupefyingly stingy, backwards and ageist–in that they hire people based on their public presence and job titles rather than their intrinsic value, abilities and ability to deliver (in true banana republic style, you will often find former sales people or consulting lobbyists with questionable backgrounds leading pretty high-profile technology organizations over here, which should give you an idea).

So as an “architect”, I am invariably cast as a techie rather than someone who actually manages a sizable amount of digits’ worth of people, resources and budget, and I’m off most people’s radars.

I’ve sort of learnt to deal with it, but the backwardness of this country can be grating sometimes–we do have excellent surgeons, though.

Conclusion

And this, in a nutshell, is why there haven’t been any geeky updates this weekend. Apologies for the mild ranting, but having at least five holes poked in you does lend itself to coloring one’s mood.

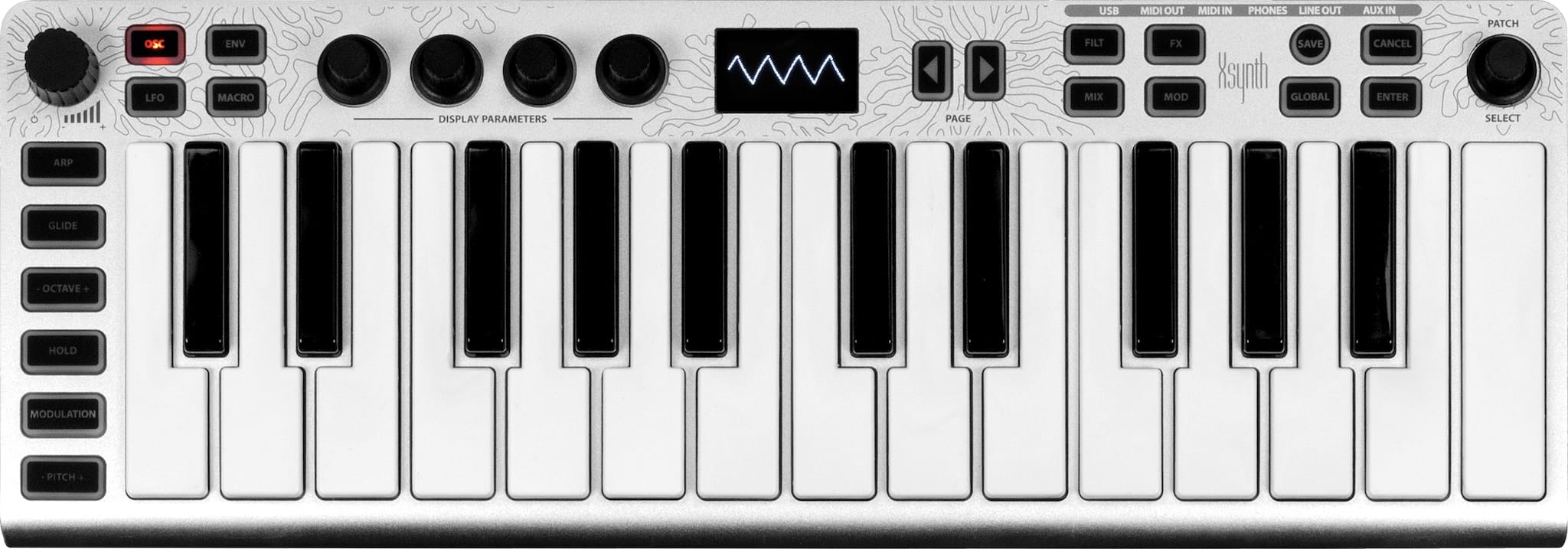

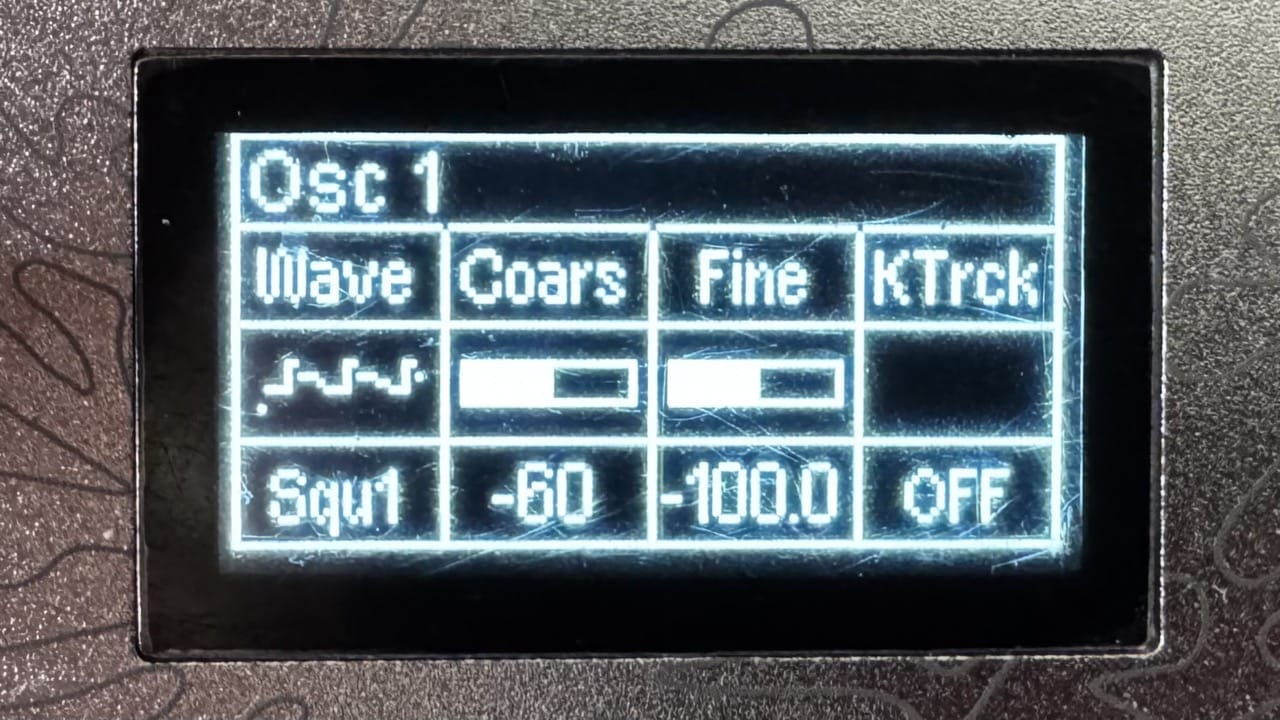

As to the next few posts you’re likely to read, I have a few bits of hardware I’m looking at (and more on the way to review) and I was planning to publish a project last weekend, but for the moment I’ll focus on catching up, adjusting my diet and taking it easy this week.

-

This according to my Apple Watch and my right heel, which now sports a blister that is actually slightly more painful than the largest scar (by the way, surgical glue has come a long way). ↩︎