Accelerando, But Janky

The past couple of weeks have been sheer madness in the AI hype space, enough that I think it’s worthwhile capturing the moment for posterity.

The Madness

A key insight I’ve had this Thursday is that I think I’m regretting going back to Twitter/X, because everything is so loud there. I went back to follow Salvatore, Armin and Mario, so my “For You” feed is now all AI, all the time, and although I can tune out the FOMO it induces, the pace is exhausting, particularly around OpenClaw.

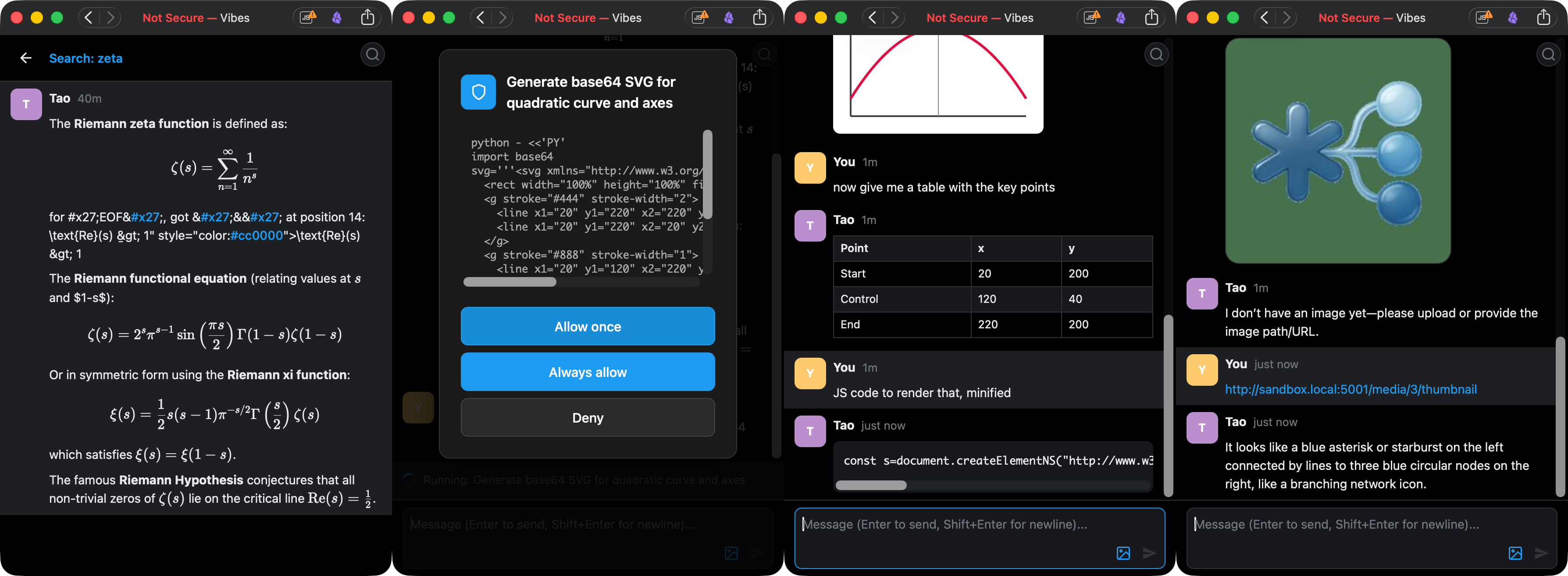

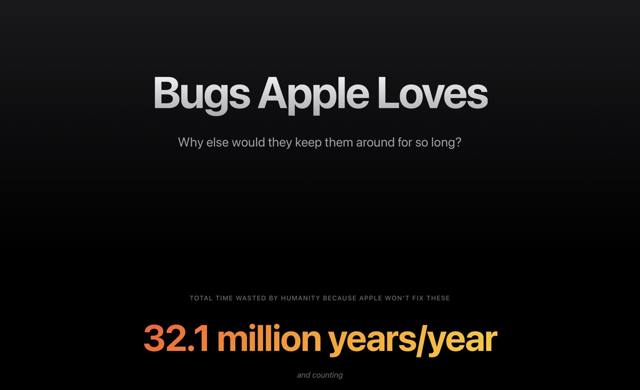

OpenClaw might be a blip, but right now it has spawned another Cambrian explosion of DIY agents, with everyone and their lobster creating minimal/more secure/tailored versions of it. If sandboxing was already high on everyone’s mind, the fact that people are actually giving API keys and “money” to a set of convoluted digital noodles running in a JavaScript runtime with administrative privileges drove everyone over the edge in various ways.

In short, it’s the Wild West, and there are no stable, clear patterns emerging yet.

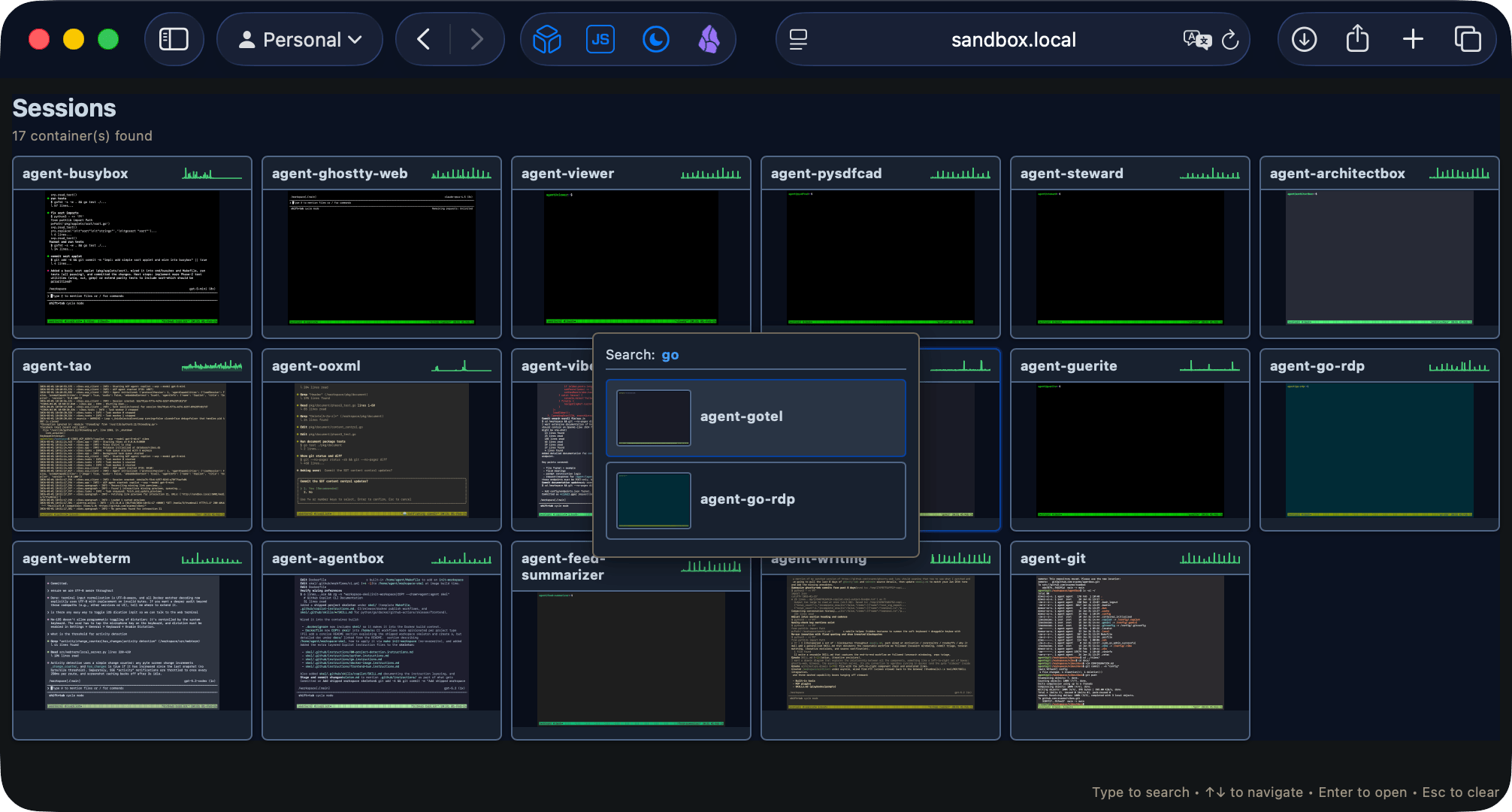

Which is why I’ve decided that my current sandboxing solution is going to stay the way it is for the foreseeable future. I’m still developing a sandboxable/WASM-ready busybox clone, but I’m going to wait it out until proper reusable patterns emerge (spoiler: I don’t think we’ll get to a consensus this year, unless sticking to containers is a consensus).

The Frontier

Both Anthropic and OpenAI launched their incremental updates this week, pretty much on schedule and confirming my years-long assertion that the decreasing returns phase of LLMs would be compensated by relentless optimization—but no amazing breakthroughs.

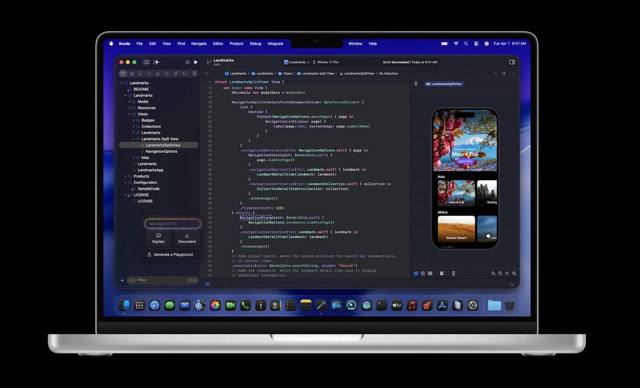

Still, both are pretty decent upgrades. Since I have pretty big SPEC-driven projects built with their previous iterations, I’ve let both loose on those codebases (I have taken to asking for a code smell/best practices/logic and security audit, including writing fuzzing tests) and both Opus 4.6 and Codex 5.3 spotted a few things.

What they both lack, sadly, is taste. Claude models tend to be much better at creating UIs than Codex but write absolutely shit tests, and Codex will often design API surfaces that make sense but are cumbersome to use—and neither of those traits (nor the annoying “personality” twists some stupid product managers insist on instilling on the models) was fixed by this week’s updates.

The demos are, of course, amazing, but what should matter at this point is accuracy (against specs), correctness (of code), and speed (which is what Codex 5.3 improved for me). I don’t particularly care about other people’s use cases, and neither should you.

Engineering Skills

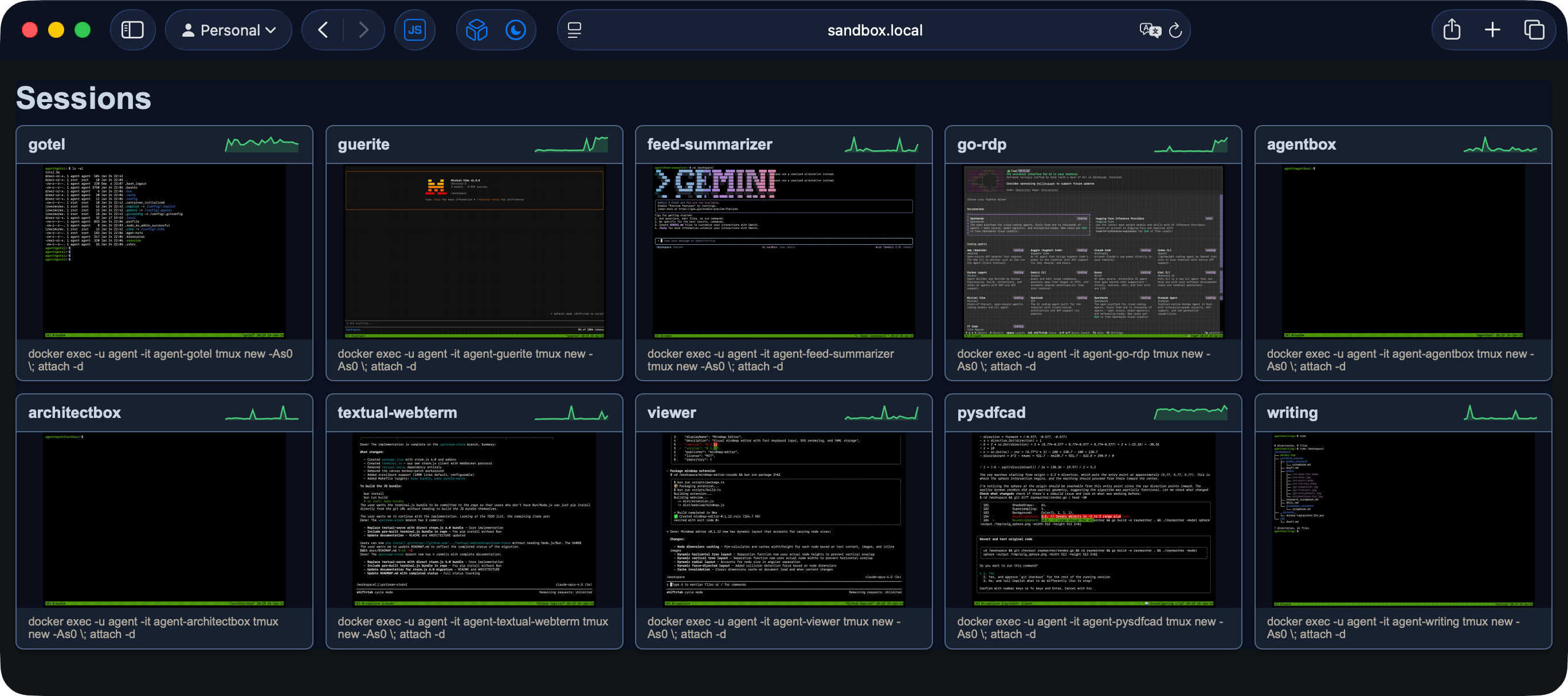

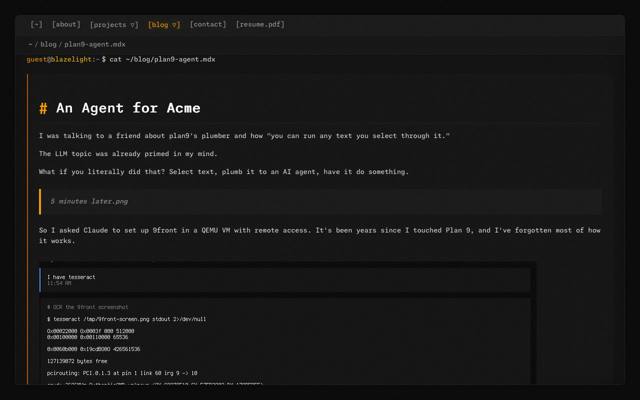

I’ve been sticking to the new GitHub Copilot CLI because I can get any frontier model on it, so I’ve been isolated from the OpenCode/minimal agent hype. I do like Pi and it’s minimal, shell-like workflow and approach, but am still convinced that we need higher-level tooling (because, again, my ultimate goal is not to build coding agents).

Regardless of tools, people have finally cottoned on to skills—and although I think there is a relapse to the voodoo/cargo-culting of early prompt engineering approaches, there are a few useful nuggets out there worth collecting and adapting.

My take on it has been to fold them into a skel folder in agentbox, and asking Copilot to "take the skills, workflows and instructions from this URL and adapt them to the scope of our SPEC.md" for every new project - both Codex and Claude are smart enough to not just duplicate the structure, but also to rewrite the skills to better suit the project, which is delightful.

Then during the project I will often ask Copilot to capture a specific workflow into a new skill (for instance, this week I got some feedback on Swift development, and that is now codified in this file).

And after things stabilize a bit, I can take any truly new skills or useful updates and put them back into my little archive, rather than collecting random stuff off the Internet that might never really suit my workflow and tooling.

Media

One of the few worthwhile outcomes of dipping into Twitter/X is that I can gauge the mass market impact of image and video generation with a broader view than just hanging around r/StableDiffusion, and this week was quite interesting in that regard.

Kling has been seeding social media with pretty amazing (but still detectable) AI shorts. They claim Hollywood is dead, but realistically I’d say video advertising (where impactful short content rules and there is maximal return) is going to be revolutionized, because good creatives will certainly know what to do with it.

That is worrying on several fronts, especially considering that even official sources seem to be using AI-generated media these days.

But I am cautiously optimistic that once visual inconsistencies are sorted out (or at least minimized and papered over by human SFX editors) we might see some actually good content coming out of it.