Gaming hasn’t exactly been one of my most intensive pastimes of late–it has its highs and lows, and typically tends to be more intensive in the cold seasons. But this year, with the public demise of Switch emulation and my interest in preserving a time capsule of my high-performance Zelda setup, I decided to create a VM snapshot of it and (re)document the process.

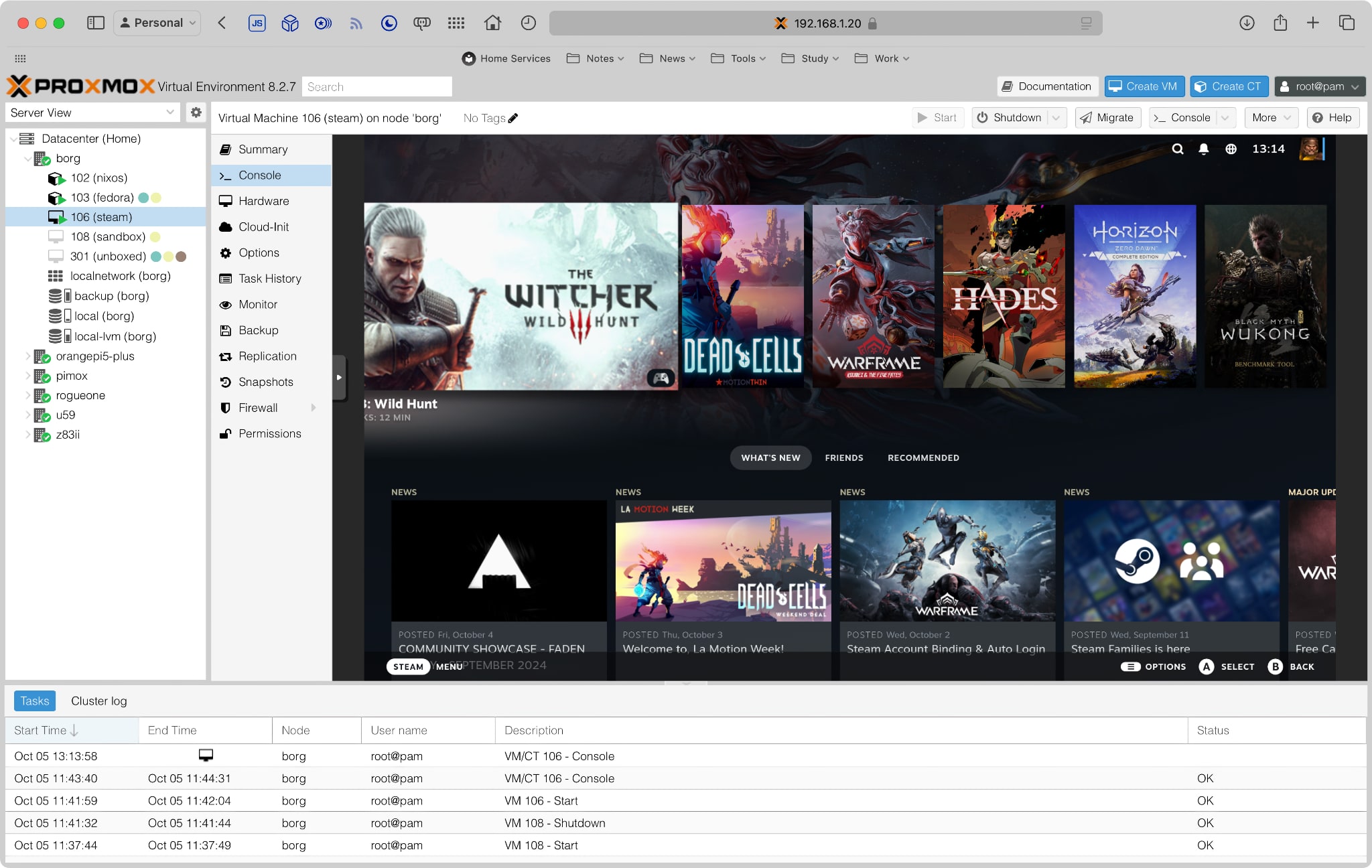

Either way, these steps will yield a usable gaming VM inside Proxmox that you can run either emulation or Steam on and stream games to anything in your home. Whether you actually want to do this is up to you.

Disclaimer: Let me be very clear on one thing: I am doing this because I really like playing some of my older games with massively improved quality1. And the sheer simplicity of the seamless transition between handheld, TV and (occasionally) my desktop Mac, not having to mess around with cartridges or dusting off my PlayStation. And also because, to be honest, Steam looks like the only way forward in the long run.

Background

From very early on, I’ve been using Bazzite as a Steam Link server for my Logitech G Cloud and my Apple TV, but I stopped running it on a VM because I needed the NVIDIA RTX 3060 in borg to do AI development, and you can’t really share a single GPU (in a single slot machine, to boot) across VMs without hard to maintain firmware hacks–although I did manage to automate switching VMs on and off using HomeKit.

In short, this isn’t my first rodeo with gaming VMs. It’s not even the tenth.

Bazzite

If you’re new here (and to Bazzite), it’s a Fedora immutable OS that you can actually install on a Steam Deck and many gaming handhelds, and that I’ve been using since its earliest days. I run other Atomic spins (as they are called) on other machines, but Bazzite is literally the simplest way to do Linux gaming, and actually had a VM set up to do exactly what I’m documenting on this post.

But Bazzite really shines on modern Ryzen mini-PC hardware, so I moved most of my gaming to the AceMagic AM18. The only caveat was that I couldn’t do 4K streaming (which was actually fine, since in Summer I have less tendency to game on a TV).

However, I ended up deleting the Steam VM from borg, and now I had to set it up all over again–and this time I decided to note down most of the steps to save myself time should it happen yet again.

Installation

This part is pretty trivial. To begin with, I’ve found the GNOME image to be much less hassle than the KDE one, so I’ve stuck with it. Also, drivers are a big pain, as usual.

Here’s what I did in Proxmox:

- Download the ISO to your

localstorage–don’t use remote mount point like I did, it messes with the CD emulation. - Do not use the default

bazzite-gnome-nvidia-openimage, or if you do, rebase to the normal one withrpm-ostree rebase ostree-image-signed:docker://ghcr.io/ublue-os/bazzite-gnome-nvidia:stableas soon as possible–the difference in frame rates and overall snappiness is simply massive.

A key thing to get Steam to use the NVIDIA GPU inside the VM (besides passing through the PCI device) is to get rid of any other display adapters.

- You can boot and install the Bazzite ISO with a standard VirtIO display adapter, but make sure you switch that to a SPICE display later, which will allow you to have control over the console resolution.

- Hit

EscorF2when booting the virtual machine and go toDevice Manager > OMVF Platform Configurationand set the console resolution to 1920x1080 upon boot. This will make a lot of things easier, since otherwise Steam Link will be cramped and blurry (and also confused as to what resolutions to use). - Install

qemu-guest-agentwithrpm-ostree install qemu-guest-agent --allow-inactiveso that you can actually have ACPI-like control of the VM and better monitoring (including retrieving the VM’s IP address via the Proxmox APIs for automation).

This is my current config:

# cat /etc/pve/qemu-server/106.conf

meta: creation-qemu=9.0.2,ctime=1727998661

vmgenid: a6b5e5a1-cee3-4581-a75a-15ec79badb2e

name: steam

memory: 32768

cpu: host

cores: 6

sockets: 1

numa: 0

ostype: l26

agent: 1

bios: ovmf

boot: order=sata0;ide2;net0

smbios1: uuid=cca9442e-f3e1-4d75-b812-ebb6fcddf3b4

efidisk0: local-lvm:vm-106-disk-2,efitype=4m,pre-enrolled-keys=1,size=4M

sata0: local-lvm:vm-106-disk-1,discard=on,size=512G,ssd=1

scsihw: virtio-scsi-single

ide2: none,media=cdrom

net0: virtio=BC:24:11:88:A4:24,bridge=vmbr0,firewall=1

audio0: device=ich9-intel-hda,driver=none

rng0: source=/dev/urandom

tablet: 1

hostpci0: 0000:01:00,x-vga=1

vga: qxl

usb0: spice

I have bumped it up to 10 cores to run beefier PC titles, but for emulation and ML/AI work I’ve found 6 to be more than enough on the 12th gen i7 I’m using.

Post-Boot

Bazzite will walk you through installing all sorts of gaming utilities upon startup. That includes RetroDECK, all the emulators you could possibly want (at least while they’re available), and (in my case) SyncThing to automatically replicate my emulator game saves to my NAS.

Again, this is a good time to install qemu-guest-agent and any other packages you might need.

What you should do is to use one of the many pre-provided ujust targets to test the NVIDIA drivers (and nvidia-smi) in a terminal:

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 560.35.03 Driver Version: 560.35.03 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 3060 On | 00000000:00:10.0 Off | N/A |

| 0% 45C P8 5W / 170W | 164MiB / 12288MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 4801 G /usr/libexec/Xorg 4MiB |

| 0 N/A N/A 5085 C+G ...libexec/gnome-remote-desktop-daemon 104MiB |

+-----------------------------------------------------------------------------------------+

There’s a reason gnome-remote-desktop-daemon is running–we’ll get to that.

GNOME Setup

Bazzite already adds Steam to Startup Applications after you log in (and you’ll want to either replace that with RetroDECK or set Steam to start in Big Picture mode), but to run this fully headless there’s a bit of tinkering you need to do in Settings.

- Turn on auto-login (

System > Users) - Turn off screen lock (it’s currently under

Privacy and Security) - Open Extensions (you’ll notice that Bazzite already has Caffeine installed to turn off auto suspend), go to

Just Perfection > Behavior > Startup Statusand make sure it is set toDesktopso that you don’t land into overview mode upon auto-login (it should, but if it doesn’t, you now know where to force it).

Remote Desktop

Also, you absolutely need an alternative way to get at the console besides Proxmox–this is because you’ll need to do a fair bit of manual tweaking on some emulator front-ends, but mostly because you’ll want to input Steam Link pairing codes without jumping through all the hoops involved in logging into Proxmox and getting to the console and also because Steam turns off Steam Link if you switch accounts, which is just… stupid.

So I decided to rely on GNOME’s nice new Remote Desktop support, which is actually over RDP (and with audio properly setup). This makes it trivial to access the console via my phone with a couple of taps, but there are a few kinks.

For starters, to get GNOME Remote Desktop to work reliably with auto-login (since that is what unlocks the keychain), you actually have to disable encryption for that credential. That entails installing Passwords and Keys and set the Default Keyring password to nothing.

Yes, this causes GNOME application passwords to be stored in plaintext–but you will not be using anything that relies on the GNOME keychain.

Once you’re done, it’s a matter of snapshotting the VM and storing the image safely.

Bugs

This setup has a critical usability bug, which is that I cannot get Steam Input to work with RetroDECK–I’ve yet to track down exactly why since Steam Input works on PC games and the virtual controllers show up in SDL tests (they just don’t provide inputs), but I have gotten around it using Moonlight (which is another kettle of fish entirely).

I could try to run all of this on Windows, but… I don’t think so. The exact same setup works perfectly on the AM18, so I just need to track down what is different inside the VM.

Steam Shortcuts

I’ve since hacked my own little script to add shortcuts to Steam for the emulated games I have installed. This assumes you have a SteamGridDB API key and requires you set that and fill your Steam ID as environment variables–as well as being a bit rough around the edges, but it works for me:

#!/bin/env python

from os import environ, remove

from os.path import join, exists, splitext

from pathlib import Path

from vdf import binary_load, binary_dump, dump, parse

from requests import Session

from logging import getLogger, basicConfig, INFO, DEBUG, WARN

from zlib import crc32

from click import group, option, command, argument, secho as echo, pass_context, STRING

from shutil import copyfile

from shlex import quote

basicConfig(level=INFO, format='%(asctime)s %(levelname)s %(message)s')

log = getLogger(__name__)

STEAM_ID = environ.get("STEAM_ID", "901194859")

STEAM_PATH = environ.get("STEAM_PATH","/home/me/.steam/steam")

STEAM_USER_DATA_PATH = join(STEAM_PATH,"userdata",STEAM_ID,"config")

STEAMGRIDDB_API_KEY = environ.get("STEAMGRIDDB_API_KEY","dc5b9820c178614336d7659eb7b64453")

GRID_FOLDER = join(STEAM_USER_DATA_PATH,'grid')

CACHE_PATH = join(environ.get("HOME"),".cache","steamgriddb")

SHORTCUTS_FILE = join(STEAM_USER_DATA_PATH,'shortcuts.vdf')

LOCALCONFIG_FILE = join(STEAM_USER_DATA_PATH,'localconfig.vdf')

for f in [GRID_FOLDER, CACHE_PATH]:

if not exists(f):

Path(f).mkdir(parents=True, exist_ok=True)

@group()

def tool():

"""A simple CLI utility to manage Steam shortcuts for Linux emulators"""

pass

@tool.command()

def list_shortcuts():

"""List all existing shortcuts"""

with open(SHORTCUTS_FILE, "rb") as h:

data = binary_load(h)

data = data['shortcuts']

headers = "appid AppName Exe LaunchOptions LastPlayTime".split()

rows = []

for i in range(len(data)):

rows.append([data[str(i)][k] for k in headers])

column_widths = {header: max(len(header), *(len(str(row[i])) for row in rows)) for i, header in enumerate(headers)}

header_format = " | ".join(f"{{:{column_widths[header]}}}" for header in headers)

row_format = header_format

echo(header_format.format(*headers))

echo("-+-".join('-' * column_widths[header] for header in headers))

for row in rows:

echo(row_format.format(*row))

@tool.command()

def list_steam_input():

"""List Steam Input settings"""

with open(SHORTCUTS_FILE, "rb") as h:

data = binary_load(h)

data = data['shortcuts']

apps = {}

for k,v in data.items():

apps[str(v['appid'])]=v['AppName']

with open(LOCALCONFIG_FILE, "r", encoding="utf8") as h:

data = parse(h)

if 'UserLocalConfigStore' not in data:

return

data = data['UserLocalConfigStore']['apps']

for k in data:

data[k]["appid"] = k

if k in apps:

data[k]["AppName"] = apps[k]

else:

data[k]["AppName"] = "(Unknown)"

headers = "appid AppName OverlayAppEnable UseSteamControllerConfig SteamControllerRumble SteamControllerRumbleIntensity".split()

rows = []

for r in data:

rows.append([data[r][k] if k in data[r] else "" for k in headers])

column_widths = {header: max(len(header), *(len(str(row[i])) for row in rows)) for i, header in enumerate(headers)}

header_format = " | ".join(f"{{:{column_widths[header]}}}" for header in headers)

row_format = header_format

echo(header_format.format(*headers))

echo("-+-".join('-' * column_widths[header] for header in headers))

for row in rows:

echo(row_format.format(*row))

@tool.command()

@argument('appid', type=STRING)

def disable_steam_input(appid):

"""Disable Steam Input for an appid"""

if isinstance(appid, str):

with open(SHORTCUTS_FILE, "rb") as h:

data = binary_load(h)

data = data['shortcuts']

apps = {}

for k,v in data.items():

apps[str(v['appid'])]=v['AppName']

if appid not in apps:

log.error(f"invalid appid {appid}")

return

with open(LOCALCONFIG_FILE, "r", encoding="utf8") as h:

data = parse(h)

if 'UserLocalConfigStore' not in data:

data['UserLocalConfigStore'] = {'apps':{}}

apps = data['UserLocalConfigStore']['apps']

if appid not in apps:

apps[appid]={}

apps[appid]["OverlayAppEnable"] = "1"

apps[appid]["UseSteamControllerConfig"] = "0"

apps[appid]["SteamControllerRumble"] = "-1"

apps[appid]["SteamControllerRumbleIntensity"] = "320"

with open(LOCALCONFIG_FILE, "w", encoding="utf8") as h:

dump(data, h)

@tool.command()

@argument('grid_id')

def fetch(grid_id):

"""Fetch media for <grid_id> from SteamGridDB"""

fetch_images(grid_id)

def find_id(name):

headers={'Authorization': f'Bearer {STEAMGRIDDB_API_KEY}'}

with Session() as s:

response = s.get(f'https://www.steamgriddb.com/api/v2/search/autocomplete/{name}',

headers=headers)

log.info(f"{name}:{response.status_code}")

if response.status_code == 200:

data = response.json()

if data['success']:

return data['data'][0]['id'] # take first result only

def fetch_images(game_id):

headers={'Authorization': f'Bearer {STEAMGRIDDB_API_KEY}'}

with Session() as s:

for image_type in ['banner','grid', 'hero', 'logo']:

local_path = join(CACHE_PATH,f"{game_id}.{image_type}")

have = False

for ext in [".jpg", ".png"]:

if exists(f"{local_path}{ext}"):

have = True

if have:

continue

if image_type == 'hero':

base_url = f'https://www.steamgriddb.com/api/v2/heroes/game/{game_id}'

elif image_type == 'banner':

base_url = f'https://www.steamgriddb.com/api/v2/grids/game/{game_id}?dimensions=920x430,460x215'

else:

base_url = f'https://www.steamgriddb.com/api/v2/{image_type}s/game/{game_id}'

response = s.get(base_url, headers=headers)

log.info(f"{game_id},{image_type},{response.status_code}")

if response.status_code == 200:

data = response.json()

if data['success'] and data['data']:

image_url = data['data'][0]['url']

ext = splitext(image_url)[1]

response = s.get(image_url)

if response.status_code == 200:

with open(f"{local_path}{ext}", 'wb') as f:

f.write(response.content)

log.info(f"{image_url}->{local_path}")

@tool.command()

@option("-e", "--emulator", default="org.retrodeck.RetroDECK", help="flatpak identifier")

@option("-a", "--args", multiple=True, help="arguments")

@option("-n", "--name", default="RetroDECK", help="shortcut name")

@option("-i", "--dbid", help="SteamGridDB ID to get images from")

def create(emulator, args, name, dbid):

"""Create a new shortcut entry"""

if not dbid:

dbid = find_id(name)

fetch_images(dbid)

if exists(SHORTCUTS_FILE):

with open(SHORTCUTS_FILE, 'rb') as f:

shortcuts = binary_load(f)

else:

shortcuts = {'shortcuts': {}}

appid = str(crc32(name.encode('utf-8')) | 0x80000000)

new_entry = {

"appid": appid,

"appname": name,

"exe": "flatpak",

"StartDir": "",

"LaunchOptions": f"run {emulator} {quote(" ".join(args))}",

"IsHidden": 0,

"AllowDesktopConfig": 1,

"OpenVR": 0,

"Devkit": 0,

"DevkitGameID": "shortcuts",

"LastPlayTime": 0,

"tags": {}

}

log.debug(new_entry)

shortcuts['shortcuts'][str(len(shortcuts['shortcuts']))] = new_entry

log.info(f"added {appid} {name}")

for image_type in ['grid', 'hero', 'logo','banner']:

local_path = join(CACHE_PATH,f"{dbid}.{image_type}")

for e in [".jpg", ".png"]:

if image_type == 'grid':

dest_path = join(GRID_FOLDER, f'{appid}p{e}')

elif image_type == 'hero':

dest_path = join(GRID_FOLDER, f'{appid}_hero{e}')

elif image_type == 'logo':

dest_path = join(GRID_FOLDER, f'{appid}_logo{e}')

elif image_type == 'banner':

dest_path = join(GRID_FOLDER, f'{appid}{e}')

log.debug(f"{local_path+e}->{dest_path}")

if exists(local_path + e):

copyfile(local_path+e, dest_path)

with open(SHORTCUTS_FILE, 'wb') as f:

binary_dump(shortcuts, f)

log.info("shortcuts updated.")

if __name__ == '__main__':

tool()

Shoehorning In an AI Sandbox

One of the side-quests I wanted to complete alongside this was to try to find a way to use ollama, PyTorch and whatnot on borg without alternating VMs.

As it turns out, Bazzite ships with distrobox (which I can use to run an Ubuntu userland with access to the GPU) and podman (which I can theoretically use to replicate my local AI app stack).

This would ideally make it trivial to have just one VM hold all the GPU-dependent services and save me the hassle of automating Proxmox to switch VMs between gaming and AI stuff.

(Again, I’ve automated that already, but it’s a bit too fiddly.)

However, trying to run an AI-intensive workload with Steam open has already hard locked the Proxmox host, so I don’t think I can actually make it work with the current NVIDIA drivers in Bazzite.

However, I got tantalizingly close.

Ubuntu Userland

The first part was almost trivial–setting up an Ubuntu userland with access to the NVIDIA GPU and all the mainstream stuff I need to run AI workloads.

# Create an Ubuntu sandbox

❯ distrobox create ubuntu --image docker.io/library/ubuntu:24.04

# Set up the NVIDIA userland

❯ distrobox enter ubuntu

📦[me@ubuntu ~]$ curl -fSsL https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/x86_64/3bf863cc.pub | sudo gpg --dearmor | sudo tee /usr/share/keyrings/nvidia-drivers.gpg > /dev/null 2>&1

📦[me@ubuntu ~]$ echo 'deb [signed-by=/usr/share/keyrings/nvidia-drivers.gpg] https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/x86_64/ /' | sudo tee /etc/apt/sources.list.d/nvidia-drivers.list

# Install the matching NVIDIA utilities to what Bazzite currently ships

📦[me@ubuntu ~]$ sudo apt install nvidia-utils-560

# Check that the drivers are working

📦[me@ubuntu ~]$ nvidia-smi

Sat Oct 5 11:26:30 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 560.35.03 Driver Version: 560.35.03 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 3060 On | 00000000:00:10.0 Off | N/A |

| 0% 43C P8 5W / 170W | 164MiB / 12288MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 4801 G /usr/libexec/Xorg 4MiB |

| 0 N/A N/A 5085 C+G ...libexec/gnome-remote-desktop-daemon 104MiB |

+-----------------------------------------------------------------------------------------+

From here on out, Python and everything else just worked.

Portainer, Podman and Stacks

This is where things started to go very wrong–there’s no nvidia-docker equivalent for podman, so you have to use CDI.

Just migrating my Portainer stacks across wouldn’t work, since the options for GPU access are fundamentally different.

But I figured out how to get portainer/agent running:

# Get the RPMs to overlay onto Bazzite

❯ curl -s -L https://nvidia.github.io/libnvidia-container/stable/rpm/nvidia-container-toolkit.repo | \

sudo tee /etc/yum.repos.d/nvidia-container-toolkit.repo

❯ rpm-ostree install nvidia-container-toolkit-base --allow-inactive

# create the CDI configuration

❯ sudo nvidia-ctk cdi generate --output=/etc/cdi/nvidia.yaml

❯ nvidia-ctk cdi list

INFO[0000] Found 3 CDI devices

nvidia.com/gpu=0

nvidia.com/gpu=GPU-cb9db783-7465-139d-900c-bc8d6b6ced7c

nvidia.com/gpu=all

# Make sure we have a podman socket

❯ sudo systemctl enable --now podman

# start the portainer agent

❯ sudo podman run -d \

-p 9001:9001 \

--name portainer_agent \

--restart=always \

--privileged \

-v /run/podman/podman.sock:/var/run/docker.sock:Z \

--device nvidia.com/gpu=all \

--security-opt=label=disable \

portainer/agent:2.21.2

# ensure system containers are restarted upon reboot

❯ sudo systemctl enable --now podman-restart

The above took a little while to figure out, and yes, Portainer was able to manage stacks and individual containers–but, again, it couldn’t set the required extra flags for podman to talk to the GPU.

But I could start them manually, and they’d be manageable. Here are three examples:

# ollama

❯ podman run -d --replace \

--name ollama \

--hostname ollama \

--restart=unless-stopped \

-p 11434:11434 \

-v /mnt/data/ollama:/root/.ollama:Z \

--device nvidia.com/gpu=all \

--security-opt label=disable \

ollama/ollama:latest

# Jupyter

❯ podman run -d --replace \

--name jupyter \

--hostname jupyter \

--restart=unless-stopped \

-p 8888:8888 \

--group-add=users \

-v /mnt/data/jupyter:/home/jovyan:Z \

--device nvidia.com/gpu=all \

--security-opt label=disable \

quay.io/jupyter/pytorch-notebook:cuda12-python-3.11

# InvokeAI (for load testing)

❯ podman run -d --replace \

--name invokeai \

--hostname invokeai \

--restart=unless-stopped \

-p 9090:9090 \

-v /mnt/data/invokeai:/mnt/data:Z \

-e INVOKEAI_ROOT=/mnt/data \

-e HUGGING_FACE_HUB_TOKEN=<token> \

--device nvidia.com/gpu=all \

--security-opt label=disable \

ghcr.io/invoke-ai/invokeai

However, I kept running into weird permission issues, non-starting containers and the occasional hard lock, so I decided to shelve this for now.

Pitfalls

Besides stability, I’m not sold on podman at all–at least not for setting up “permanent” services. Part of it is because making sure things are automatically restarted upon reboot is fiddly (and, judging from my tests, somewhat error-prone, since a couple of containers would sometimes fail to start up for no apparent reason), and partly because CDI feels like a stopgap solution.

Conclusion

This was a fun exercise, and now I have both a usable snapshot of the stuff I want and detailed enough notes on the setup to quickly reproduce it again in the future.

However, besides workload coexistence on the GPU, this still doesn’t make a lot of sense in practice and I seem to be doomed to automate shutting down Steam or RetroDECK when I want to work–it’s just simpler to improve the scripting to alternate between VMs, which has the additional benefit of resetting the GPU state between workloads.

Well, either that or doing some kind of hardware upgrade…

-

And, also, because it’s taken me years to almost finish Breath of the Wild given I very much enjoy traipsing around Hyrule just to see the sights. ↩︎