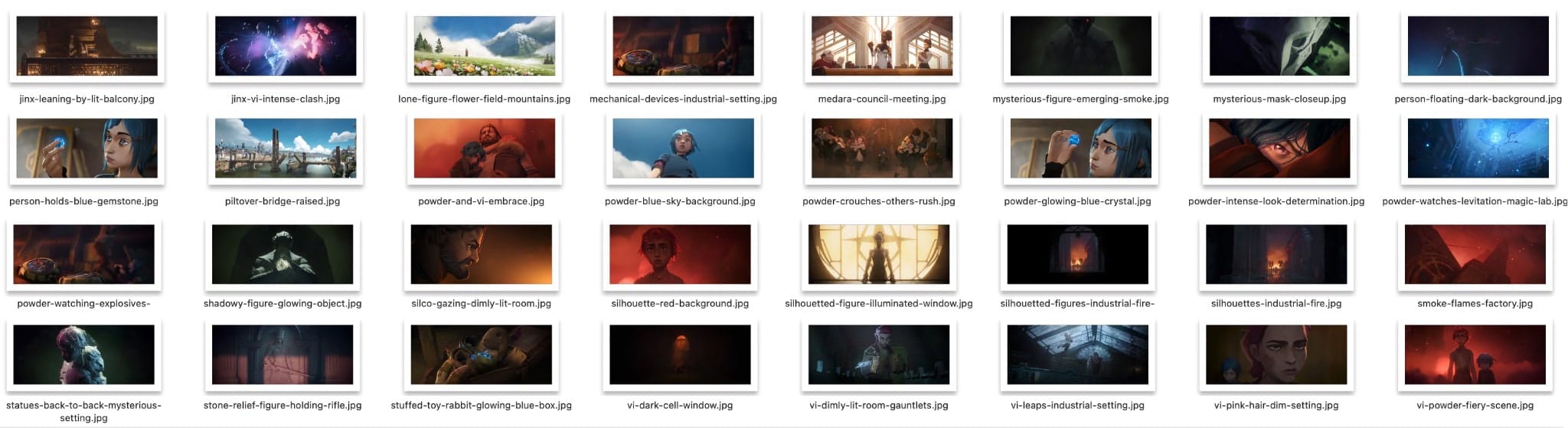

To make a long story short, Arcane ended yesterday, and during some idle browsing I came across a pretty amazing set of wallpapers that someone generated by taking 4K frames and upscaling to 8K using 4x_BSRGAN–which I promptly downloaded and converted to optimized JPEGs.

But the filenames were all wonky, so I decided to whip up a small script to rename and annotate them using gpt-4o’s multimodal capabilities. And to kill not two, but three birds with one stone, I also decided to do it using aiohttp, since I wanted to understand how to submit images directly via REST (I am not a fan of the openai Python package, and will eventually need to do this in Go as well).

Results

As it turns out, and even considering I provided some character descriptions in the prompt, gpt-4o seems to understand the Arcane universe well enough to identify characters, locations, and even use specific terms like “hextec”. In fact, the results are pretty decent, and any deviations are usually fixed by re-classifying the image:

There were a few screenshots that triggered the content filters for no apparent reason, but all in all the thing just soldiered through.

Code and Outputs

Here’s my somewhat hacky first pass:

from base64 import b64encode

from aiohttp import ClientSession

from asyncio import run, sleep

from PIL import Image

from io import BytesIO

from json import loads

from os import environ, rename, walk

from subprocess import run as subprocess_run, CalledProcessError

from os.path import join, dirname

from sys import argv

AZURE_OPENAI_KEY = environ.get("AZURE_OPENAI_KEY")

AZURE_OPENAI_ENDPOINT = environ.get("AZURE_OPENAI_ENDPOINT")

AZURE_OPENAI_MODEL = environ.get("AZURE_OPENAI_MODEL")

AZURE_OPENAI_VERSION = environ.get("AZURE_OPENAI_VERSION")

THUMBNAIL_WIDTH = int(environ.get("THUMBNAIL_WIDTH", 800))

THUMBNAIL_HEIGHT = int(environ.get("THUMBNAIL_HEIGHT", 600))

async def get_thumbnail(filename: str, maxwidth: int, maxheight) -> str:

"""Get thumbnail for image"""

with open(filename, "rb") as f:

image = Image.open(f)

image.thumbnail((maxwidth, maxheight))

buffer = BytesIO()

image.save(buffer, "JPEG")

return b64encode(buffer.getvalue()).decode("utf-8")

async def classify(thumbnail_data: str) -> dict:

"""Create request for Azure OpenAI API"""

prompt = """

You are an expert on League of Legends and the TV Series Arcane.

You will be given a screenshot from the TV Series Arcane and you have to provide a descriptive caption.

The caption should identify any characters by name, the setting, and action in the image.

Remember that:

- Jinx has blue hair and tattoos on her arms, and typically holds a weapon.

- Powder is a young girl with blue hair.

- Vi has pink hair and a tattoo on her face, and typically wears a decorated jacket and mechanical gauntlets.

- Jayce has brown hair, a white jacket and sometimes a beard.

- Caitlyn has dark blue hair, and typically carries a rifle.

- Viktor has long hair.

- Medara has dark skin, hair in a bun and golden makeup.

- Silco has a scar on his face and an eye with a different color.

- Vander has a beard and is built like a tank.

- Ekko has dark skin and white hair, and sometimes wears a hood and rides a hoverboard.

DO NOT use the words "Arcane" or "League of Legends" in your caption or tags.

Make the caption as short as possible.

Suggest a filename for the image based on your caption.

Suggest tags for the image based on your caption and any other relevant information.

Include character names, locations, and actions in your caption and filename.

Use characters' names, locations, and actions as tags.

Do not use Markdown code blocks or any other formatting.

Use the following JSON schema to provide your response:

{

"caption": "Your caption here.",

"filename": "Your suggested filename here, without the file extension. Use dashes instead of spaces or underscores.",

"tags": ["tag1", "tag2", "tag3", "tag4", "tag5"]

}

"""

url = f"https://{AZURE_OPENAI_ENDPOINT}/openai/deployments/{AZURE_OPENAI_MODEL}/chat/completions?api-version={AZURE_OPENAI_VERSION}"

headers = {"api-key": AZURE_OPENAI_KEY, "content-type": "application/json"}

async with ClientSession() as session:

async with session.post(

url,

json={

"messages": [

{

"role": "system",

"content": prompt,

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "What is happening in this image?",

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{thumbnail_data}"

},

},

],

},

],

"response_format": {"type": "json_object"},

},

headers=headers,

) as response:

return await response.json()

def modify_file(filename: str, data: dict) -> None:

"""Clear and inject metadata into file, renaming it after"""

cmd = ["exiftool", "-all=", "-overwrite_original", filename]

try:

subprocess_run(cmd, check=True)

except CalledProcessError as e:

print(f"An error occurred: {e}")

cmd = ["exiftool", "-overwrite_original", f'-ImageDescription={data["caption"]}']

for tag in data["tags"]:

cmd.append(f"-Keywords+={tag}")

cmd.append(filename)

try:

subprocess_run(cmd, check=True)

except CalledProcessError as e:

print(f"An error occurred: {e}")

ext = filename.split(".")[-1]

new_filename = f"{data['filename']}.{ext}"

print(f"Renaming {filename} to {new_filename}: {data['caption']} {data['tags']}")

rename(filename, join(dirname(filename), new_filename))

async def main(argv):

for path in argv:

# find all jpg files in path

for root, _, files in walk(path):

for filename in files:

if filename.lower().endswith(".jpg"):

full_path = join(root, filename)

thumbnail_data = await get_thumbnail(

full_path, THUMBNAIL_WIDTH, THUMBNAIL_HEIGHT

)

response = await classify(thumbnail_data)

try:

data = loads(response["choices"][0]["message"]["content"])

except Exception as e:

print(response)

continue

modify_file(full_path, data)

await sleep(1)

if __name__ == "__main__":

run(main(["/Users/rcarmo/Pictures/test"]))

The raw output (somewhat redacted for legibility) gives an idea of what kind of captions it generated:

Renaming <X>.jpg to tower-blue-light-night.jpg: A tall tower emitting a blue light beam at night. ['tower', 'blue light', 'night', 'beam', 'cityscape']

Renaming <X>.jpg to wooden-sign-boom.jpg: A wooden sign with 'Boom' painted in blue. ['sign', 'boom', 'wooden', 'blue', 'painted']

Renaming <X>.jpg to jinx-sits-luxurious-chair.jpg: Jinx sits confidently in a luxurious chair, holding a weapon. ['Jinx', 'luxurious chair', 'weapon', 'relaxed pose', 'interior']

Renaming <X>.jpg to silco-overlooks-undercity.jpg: Silco overlooking the Undercity from a high vantage point. ['Silco', 'Undercity', 'vantage point', 'overlook', 'scene']

Renaming <X>.jpg to ekko-room-graffiti-mechanical-object.jpg: Ekko in a room with graffiti and mechanical object. ['Ekko', 'room', 'graffiti', 'mechanical', 'object']

Renaming <X>.jpg to jinx-lair-candles.jpg: Jinx sits in her lair with her feet on a pink box, surrounded by candles. ['Jinx', 'lair', 'candles', 'box', 'neon lights']

Renaming <X>.jpg to character-peeking-through-hole.jpg: Character peeking through a hole in the wall. ['character', 'hole', 'wall', 'peeking']

Renaming <X>.jpg to jinx-raising-hand-with-glow.jpg: Jinx raising her hand with glowing lights in the air. ['Jinx', 'action', 'glow', 'lights', 'motion']

Renaming <X>.jpg to industrial-landscape-of-zaun.jpg: The industrial landscape of Zaun with pipes and overgrown vegetation. ['Zaun', 'industrial', 'landscape', 'pipes', 'vegetation']

Renaming <X>.jpg to jinx-walking-bridge-red-moon.jpg: Jinx walks on a bridge under a red moon, carrying her weapon. ['Jinx', 'bridge', 'red moon', 'walking', 'weapon']

Conclusion

So, was this amazingly useful? Well, not really, but it’s a decent start for the actual image classification I want to do in RSS feeds.

Was it reliable? Well, mostly. I had to re-classify maybe 25% of the files once or twice to deal with some glaring errors, but it eventually got things right. The nice thing about using gpt-4o is that Arcane was clearly part of its training data, since it added enough flavor to the captions to make them actually useful (and, of course, I can now search for them through Finder metadata).

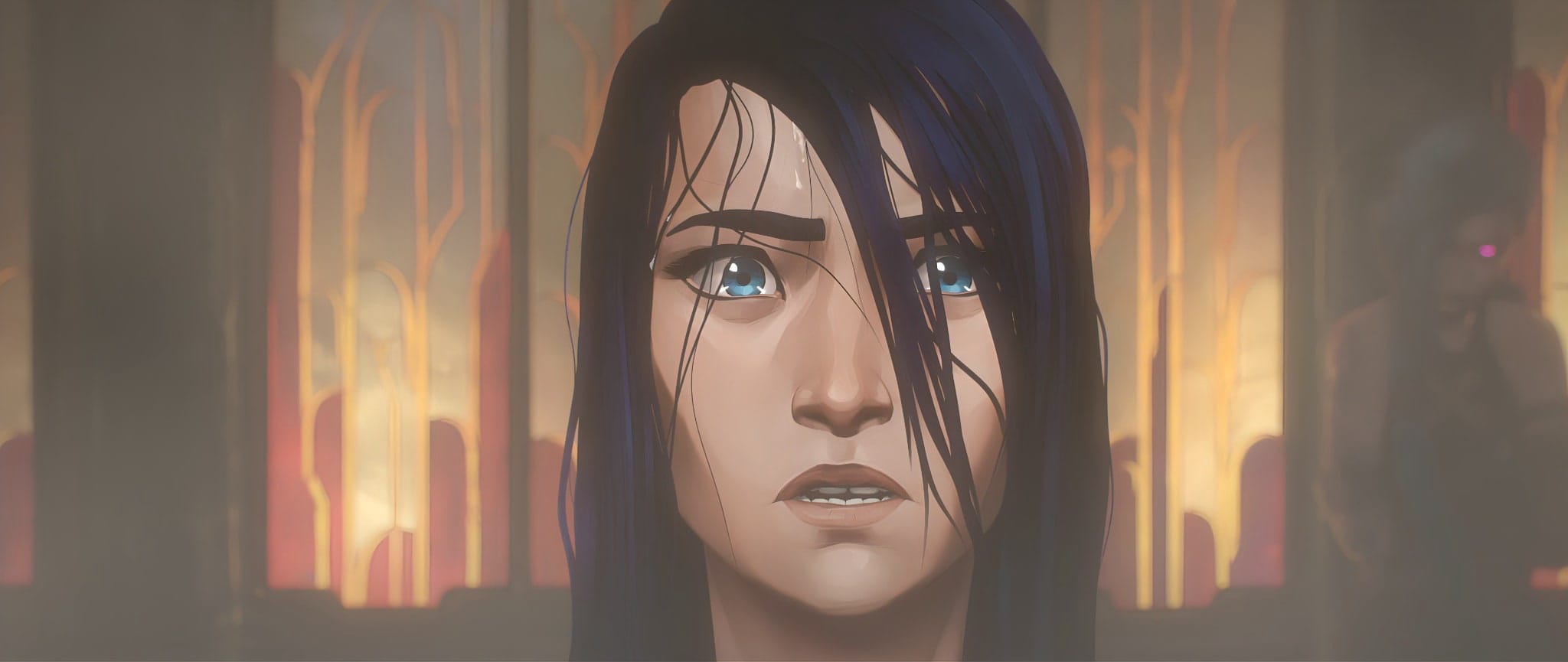

And there were one or two pretty amazing captions, like it consistently identifying Jinx lurking in the background in this one (not just once, but twice, since I ran it again without noticing it myself):

Not bad for an hour of hacking around, I’d say.

Update, a few days later

I was curious as to how I could do the screen captures myself, so I went and figured out how to get quality snapshots out of HDR footage–the simplest way is to just use mpv and tweak the config so that , and . will let you seek frame by frame:

❯ cat .config/mpv/mpv.conf

hr-seek=yes

screenshot-format=png

❯ cat .config/mpv/input.conf

RIGHT seek 1

LEFT seek -1

Then, in case you need to trim black borders:

❯ magick mogrify -fuzz 1% -bordercolor black -border 1 -trim +repage -format png *.png

As to upscaling, I simply resorted to Real-ERSGAN:

❯ find ~/Pictures/raw/*.png | xargs -I{} ./realesrgan-ncnn-vulkan -s 2 -i {} -o {}

This yielded some pretty good results, and I was able to generate a few more wallpapers from similar material.