I’ve been a Mathematica fan on and off throughout the years, and this week’s announcement of version 11 made me a little wistful, so I felt the need to kick the tires a bit on my Raspberry Pi (which can run a free license of version 10.3). As it would happen, it does in fact allow me to have partial access to most of the new features (yeah, including the Pokémon database…), so that’s been fun.

But after playing with it for a while under Raspbian, I realized that it also supports remote Mathematica kernels (a feature I used infrequently, but with great results many years ago), so I started wondering if I could run it alongside Spark and Jupyter on my little home cluster, which sports 5 Pi2 boards and a total of 20 CPU cores.

However, my cluster runs Ubuntu 16.04 (Xenial), and I’m not going to throw away vastly improved packages and a working setup to return to Raspbian (not to mention the work of having to reflash all the SD cards again).

So the stage was set for another of my little challenges – figuring out how to get the Raspbian binaries to run on Ubuntu, and then how to get Mathematica to run parallel computations on my cluster.

Installing Mathematica on Ubuntu 16.04 armhf

As it turns out, everything was fairly easy to figure out. Assuming you’re running Ubuntu on your Pi already via one of the official Ubuntu images, to get things started you want to grab the latest wolfram-engine package from the Raspberry Foundation’s repository:

wget http://archive.raspberrypi.org/debian/pool/main/w/wolfram-engine/wolfram-engine_10.3.1+2016012407_armhf.deb

Then, since libffi5 no longer exists in Ubuntu (it’s been replaced with libffi6), we need to get an equivalent armhf package from the Debian repositories:

wget http://ftp.us.debian.org/debian/pool/main/libf/libffi/libffi5_3.0.10-3+b1_armhf.deb

Then we can install the dependencies that are directly replaceable with standard Ubuntu packages:

sudo apt-get install libxmu6 libxt6 libice6 libsm6 libxrandr2 libxfixes3\

libxcursor1 libpango1.0-0 libhunspell-1.3-0 libcairo2\

libportaudio2 libjack-jackd2-0 libsamplerate0

…and install the .deb files we got:

sudo dpkg -i libffi5_3.0.10-3+b1_armhf.deb

sudo dpkg -i wolfram-engine_10.3.1+2016012407_armhf.deb

And presto, we have Mathematica installed in /opt/Wolfram/WolframEngine:

$ /opt/Wolfram/WolframEngine/10.3/Executables/math

Wolfram Language (Raspberry Pi Pilot Release)

Copyright 1988-2016 Wolfram Research

Information & help: wolfram.com/raspi

Mathematica cannot find a valid password.

For automatic Web Activation enter your activation key

(enter return to skip Web Activation):

^C

Getting it to Run Consistently

As far as I could tell, in order to validate that it’s running on the Raspberry Pi Mathematica requires access to the Pi’s hardware (namely /dev/fb0 and /dev/vchiq), and the best way to do that is make sure the user you’re running it under is a member of the video group.

The framebuffer device already has the right permissions, but /dev/vchiq doesn’t:

$ ls -al /dev/fb0

crw-rw---- 1 root video 29, 0 Feb 11 16:28 /dev/fb0

$ ls -al /dev/vchiq

crw------- 1 root root 245, 0 Feb 11 16:28 /dev/vchiq

So let’s make things right for a quick test:

sudo chown root:video /dev/vchiq

sudo chmod g+r+w /dev/vchiq

sudo usermod -G video username

And now, it works – temporarily:

$ /opt/Wolfram/WolframEngine/10.3/Executables/math

Wolfram Language (Raspberry Pi Pilot Release)

Copyright 1988-2016 Wolfram Research

Information & help: wolfram.com/raspi

In[1]:=

But once you reboot, /dev/vchiq will revert to its default permissions.

So we need a udev rule to make the change permanent:

echo 'ACTION=="add", KERNEL=="vchiq", GROUP="video"' | sudo tee /etc/udev/rules.d/91-mathematica.rules

The Mathematics of Clustering

So how do we run Mathematica on a Raspberry Pi cluster?

Well, Mathematica understands about RemoteKernels, so this is fairly easy to do – although a bit fiddly compared to the Lightweight Grid Manager that I played around with some time ago.

Assuming you’ve already set up passwordless SSH between nodes (which I won’t go into here), the first thing you need is to define a function to generate a remote kernel configuration (in essence a command line and a kernel count):

Needs["SubKernels`RemoteKernels`"]

Parallel`Settings`$MathLinkTimeout = 100;

ssh = "export LD_LIBRARY_PATH=;ssh"; (* avoid using Wolfram's bundled libraries *)

kernels = 4; (* for each node *)

math = "/opt/Wolfram/WolframEngine/10.3/Executables/math" <> " -wstp -linkmode Connect `4` -linkname `2` -subkernel -noinit > /dev/null 2>&1 &";

configureKernel[host_] :=

SubKernels`RemoteKernels`RemoteMachine[host,

ssh <> " " <> "ubuntu" <> "@" <> host <> " \"" <> math <> "\"", kernels];

With that done, all you need to do is to actually launch the kernels:

In[2]:= LaunchKernels[Map[configureKernel, {"node1", "node2", "node3", "node4"}]]

Out[2]= {"KernelObject"[1, "node1"], "KernelObject"[2, "node1"],

"KernelObject"[3, "node1"], "KernelObject"[4, "node1"],

"KernelObject"[5, "node2"], "KernelObject"[6, "node2"],

"KernelObject"[7, "node2"], "KernelObject"[8, "node2"],

"KernelObject"[9, "node3"], "KernelObject"[10, "node3"],

"KernelObject"[11, "node3"], "KernelObject"[12, "node3"],

"KernelObject"[13, "node4"], "KernelObject"[14, "node4"],

"KernelObject"[15, "node4"], "KernelObject"[16, "node4"]}

You should keep in mind that remote kernels are higher-priority by default for parallelizing operations but not for regular computations. To use them indiscriminately (if you really want to, since it might not be that efficient) you need to add them to the $ConfiguredKernels variable:

(* Don't do this indiscriminately *)

$ConfiguredKernels = Join[$ConfiguredKernels,

LaunchKernels[Map[configureKernel, {"node1", "node2", "node3", "node4"}]]];

So, How Fast Is It?

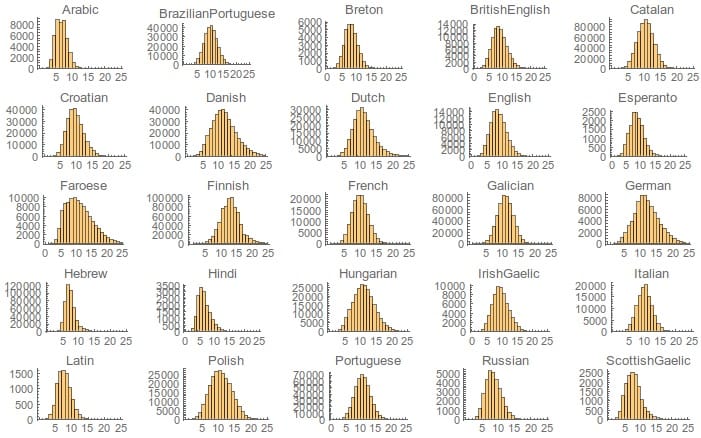

For parallelizable workloads, it can be surprisingly quick. On my Raspberry Pi 2 cluster, running the front-end on the master node via X11 and computing word frequency histograms of 25 languages on the four worker nodes is ten times faster in parallel mode.

In[3]:= languages = DictionaryLookup[All]; Length[languages]

Out[3]= 27

In[4]:= Timing[ (* local kernel *)

hist = Table[

Histogram[StringLength /@ DictionaryLookup[{l, All}], {1, 25, 1},

PlotLabel -> l, PlotRange -> {{0, 25}, All},

PerformanceGoal -> "Speed"], {l, languages}];]

Out[4]= {72.324, Null]

Here’s the same run atop those 16 kernels we created above, and without adding them to $ConfiguredKernels:

In[5]:= Timing[ (* 16 remote kernels *)

hist = ParallelTable[

Histogram[StringLength /@ DictionaryLookup[{l, All}], {1, 25, 1},

PlotLabel -> l, PlotRange -> {{0, 25}, All},

PerformanceGoal -> "Speed"], {l, languages}];]

Out[5]= {7.544, Null}

Seven and a half seconds, not bad. Around twice the time the front-end took to render this on the GUI:

In[6]:= GraphicsGrid[Partition[hist, 5], ImageSize -> 700]

Out[6]=

Conclusion

You’re probably asking yourself if this is actually useful in real life, and I’d have to say that using Mathematica in this way, without a really nice (i.e., native Mac) front-end and with the slight slowness that the Raspberry Pi always has, leaves something to be desired. Even the quad-core Pi 2 boards I’m using right now (which I haven’t overclocked past 900MHz for stability) have occasional trouble rendering the UI, and although my little cluster runs Spark and plenty of other things without a hitch, they tend to be far less demanding in nature.

But for educational purposes, this is an amazing setup, and I’m pretty sure we’ll see other people doing the same (hopefully without unleashing Wolfram’s wrath – remember they provided a special license to the Raspberry Pi Foundation out of goodwill, and we shouldn’t abuse that).

As to myself, I will be using this setup (and Wolfram’s web-based service) for playing around with their new machine learning features over my summer vacation. I fervently hope the remote kernel support isn’t removed in the next Raspberry Pi edition, because it’s such an amazingly neat thing to finally be able to run Mathematica across 20 kernels – a dream I never believed possible when I bought this book 25 years ago, way back in 1991.

Kind of amazing to think that a single of these boards is more powerful than the VAX we ran our whole class on.