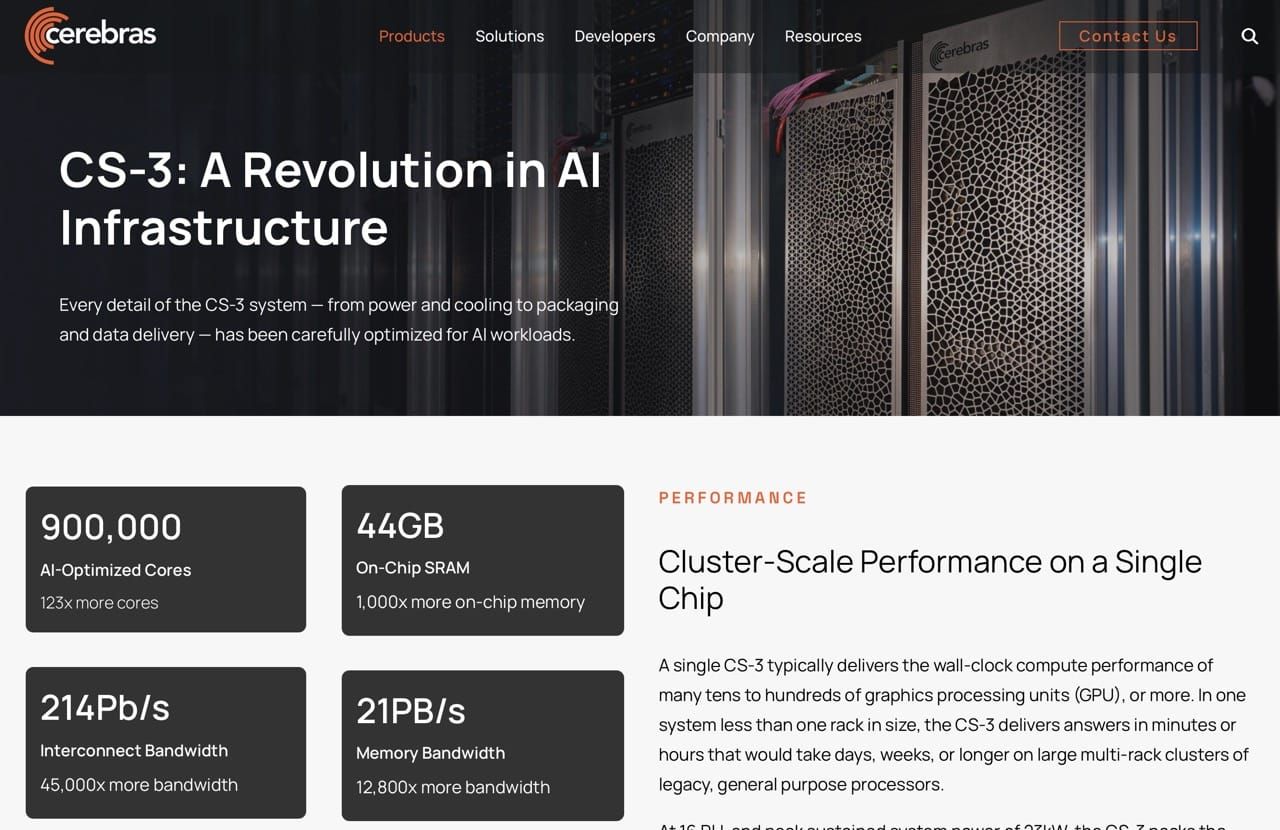

Cerebras’ CS-3 aims to cram cluster-level AI performance into a dorm-sized fridge by dint of building off a single wafer-scale chip that carries on-chip memory and interconnects, making it a self-contained AI supercomputer that makes NVIDIA GPUs look like a toy (especially considering that you can “stack” or interconnect multiple wafers).

This effectively means that it can run inference with almost negligible interconnect overhead–and that also applies if you split model layers across wafers, which is a neat trick. It is also the kind of paradigm shift I meant in my predictions for the year, although I wasn’t expecting it to come on the hardware side.

But I’m pretty sure it won’t be the only one.