Like I wrote a few weeks back:

like this past couple of weeks’ spate of news around

llama.cppproves, there is a lot of low hanging fruit where it regards optimizing running the models, and at multiple levels

And, apparently, I was right. And I’m likely also right on tailored models and the value proposition of running them on-premises, or, at the very least, without needing to rely on a gigantic corporation.

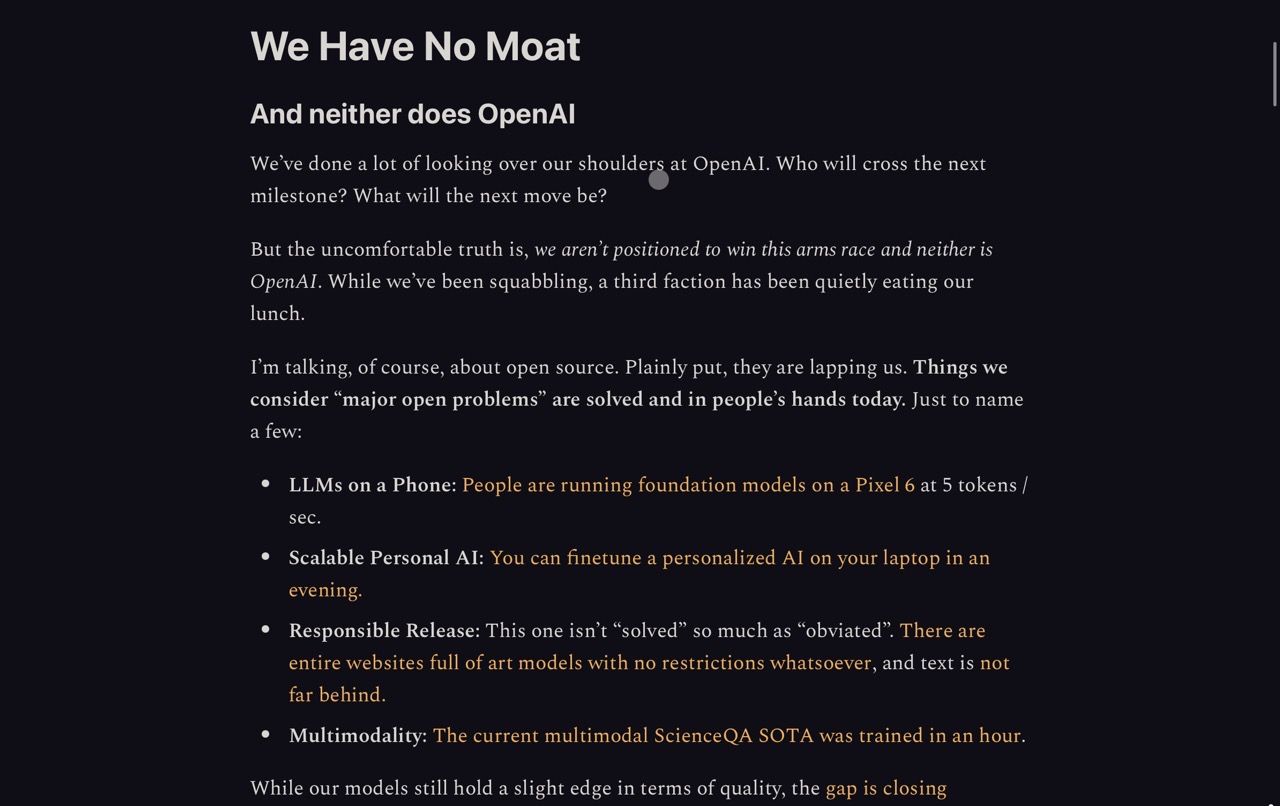

Whether this is really a leaked Google memo or not (and I suspect it is), I’m positive about two things: the genie has escaped the bottle, and nobody has a moat, yes.

But, also, nobody has that big of a treasure inside their castle either (the industry keeps trying to create walled gardens of various kinds…).