Update: I’ve since moved from VirtualBox to Parallels, and updated this accordingly. Also, vagrant-lxc has now been upgraded to 0.4.0, which means there are some (very minor) differences.

After fooling around with Vagrant and LXC for a few days (thanks to Fabio Rehm’s awesome plug-in) , I decided to pull everything together into a working, reproducible setup that allows me to develop and test on four different Linux distributions, as well as prepare Puppet scripts for production deployments – and all without the hassle of running multiple VirtualBox instances on my Mac,

If you’re running Linux natively, you can skip ahead to the last few sections, since most of this is about setting up a suitable Linux VM to host all the containers on the Mac.

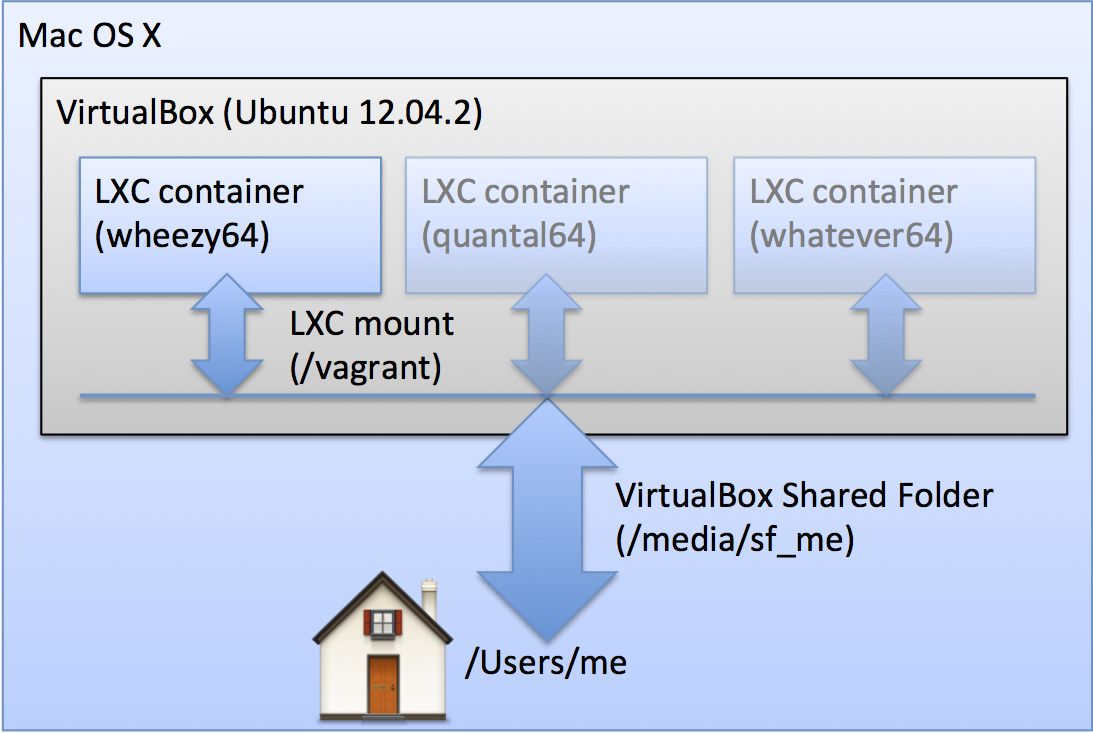

By using LXC inside one “host” VM, this setup uses far less resources – and with a few tweaks, it also lets me access my Mac’s file system from both inside the parent VM and from the Vagrant environments running as containers inside that VM:

In a nutshell, the advantages are:

- Run everything on a single VM – as many different Linux distros as you want

- Run multiple machines simultaneously with nearly zero overhead

- Significantly less clutter on your hard disk (boxes stay inside the VM)

- No need to install Vagrant directly on your Mac

- Your Mac’s filesystem is transparently mounted inside each environment with zero hassle

Assuming you have VirtualBox or Parallels set up already, here’s how to go about reproducing this:

Set up a suitable “parasite” host

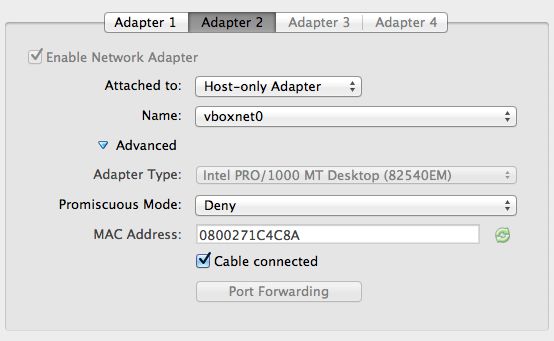

The first step is to set up an Ubuntu 64-bit VM with an extra host-only network interface. Here’s the relevant screen for VirtualBox:

This uses VirtualBox’s built-in DHCP server and will be the interface through which you’ll connect to the VM.

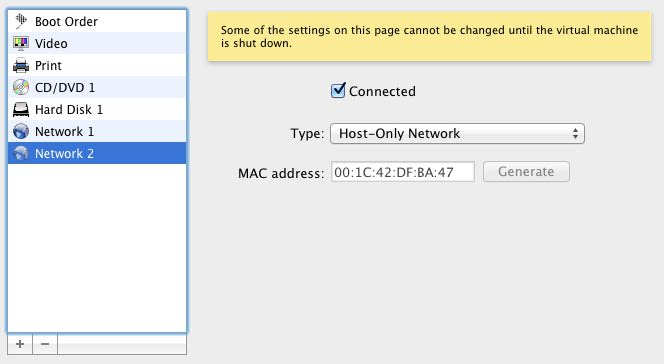

And here’s the equivalent in Parallels:

The reason I prefer setting up a second interface is that I’d rather use this than fool around with port mapping in the default interface (which I use to NAT out of my laptop), and it mirrors around 99% of my production setups (i.e., a dedicated network interface for management)

Now grab the Ubuntu MinimalCD image and do a bare minimum install, setting the host name to something like containers and skipping everything else – i.e., Choose only OpenSSH Server near the end).

(For the purposes of this HOWTO, I’m going to assume you created a local user called… user – replace as applicable)

I recommend that you don’t set up LVM (it’s somewhat pointless in a VM, really), don’t mess with the defaults, and go with 13.04 or above (I started out using this with 12.04 LTS and had a few issues with 13.04, but those seem to have been mostly fixed in the latest updates).

Required Packages

Once you’re done installing, it’s package setup time:

sudo apt-get update

sudo apt-get dist-upgrade

# grab the basics we need to get stuff done

sudo apt-get install vim tmux htop ufw denyhosts build-essential

# grab vagrant-lxc dependencies

sudo apt-get install lxc redir

# the easy way to ssh in

sudo apt-get install avahi-daemon

Once that’s done, install the VirtualBox/Parallels guest software in the usual way (i.e., use the relevant menu option to mount the CD image, run the installer as root, reboot the VM, etc.)

Networking tweaks

Now for a few networking changes that make it simpler (and faster) to access your new VM.

First off, disable DNS reverse lookups in sshd by adding the following line to /etc/ssh/sshd_config (they’re pointless in a local VM and cause unnecessary connection delay):

UseDNS=no

Then set up eth1 by editing /etc/network/interfaces to look like so:

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet dhcp

auto eth1

iface eth1 inet dhcp

The Easy Way In

Now we’ll set up avahi so that your VM announces its SSH service to your Mac using Bonjour – to do that, create /etc/avahi/services/ssh.service and toss in this bit of XML:

<?xml version="1.0" standalone='no'?><!--*-nxml-*-->

<!DOCTYPE service-group SYSTEM "avahi-service.dtd">

<service-group>

<name replace-wildcards="yes">%h</name>

<service>

<type>_ssh._tcp</type>

<port>22</port>

</service>

</service-group>

And, if you’re planning on doing web development, I also suggest setting up /etc/avahi/services/http.service with something like:

<?xml version="1.0" standalone='no'?><!--*-nxml-*-->

<!DOCTYPE service-group SYSTEM "avahi-service.dtd">

<service-group>

<name replace-wildcards="yes">%h</name>

<service>

<type>_http._tcp</type>

<port>80</port>

</service>

<service>

<type>_http._tcp</type>

<port>8080</port>

</service>

</service-group>

To get avahi to start in a headless machine, you need to disable dbus support in /etc/avahi/avahi-daemon.conf – which, incidentally, is also the right place to ensure Bonjour advertisements only go out via eth1.

So you need to add these two lines to that file:

allow-interfaces=eth1

enable-dbus=no

Now you’re ready to enable the network interface:

sudo ifup eth1

sudo service avahi-daemon restart

If you use ufw, you must open all traffic to lxcbr0:

sudo ufw allow in on lxcbr0

sudo ufw allow out on lxcbr0

…and change DEFAULT_FORWARD_POLICY to "ACCEPT" in /etc/default/ufw - otherwise your host system won’t be able to talk to your containers, and they won’t be able to reach the outside.

The First Neat Bit

You now have a nice, clean way to login to the machine – you can always connect via ssh [email protected], regardless of which address your virtualizer’s DHCP server grants the VM, and in case you ever have to put the VM on a LAN to work from another machine, you can bridge eth1 and things will just work™.

Housekeeping

If you’re going to be using the VM for development alone, it’s rather convenient to set up password-less sudo by tweaking /etc/sudoers to read:

%sudo ALL=(ALL:ALL) NOPASSWD: ALL

Also, and since we’re going to rely on VirtualBox’s shared folders, we need to change the vboxsf group’s GID to 1000 and add your user (user in this example) to it in /etc/group:

vboxsf:x:1000:user

This doesn’t seem necessary for Parallels, but I recommend you check the permissions on

/media/psfregardless.

This is key to getting shared folders working nicely with Vagrant, because VirtualBox adds the vboxsf as the next available GID on the VM (which is 1001 under Ubuntu on a fresh install) but the vagrant UID/GID is typically 1000 (since it’s the first user created in a guest system).

So you need to make sure these match, or else you’ll have all sorts of problems with file permissions on shared filesystems.

You should also tweak /etc/hosts and /etc/hostname accordingly:

$ cat /etc/hosts | head -2

127.0.0.1 localhost

127.0.1.1 containers.yourdomain.com containers

$ cat /etc/hostname

containers

…and, finally, placing your SSH key into the user’s authorized_keys file (manually, since the Mac, alas, doesn’t ship with ssh-copy-id:

me@mac:~$ cat ~/.ssh/id_rsa.pub | pbcopy

me@mac:~$ ssh containers.local

Password:

Last login: Tue May 28 17:28:32 2013 from 192.168.56.1

user@containers:~$ mkdir .ssh; tee .ssh/authorized_keys

# Cmd+V - Ctrl+D

user@containers:~$ chmod 400 .ssh/*

Installing Vagrant with LXC support

After this is done, you should be able to fire up your VM and SSH to it by typing:

ssh user@containers.local

And be magically there. So now you can install Vagrant and Fabio Rehm’s awesome plug-in by doing something like:

wget http://files.vagrantup.com/packages/0219bb87725aac28a97c0e924c310cc97831fd9d/vagrant_1.2.4_x86_64.deb

sudo dpkg -i vagrant_1.2.4_x86_64.deb

vagrant plugin install vagrant-lxc

…which is all you need to get started. To get suitable boxes and start working, check out the plug-in README, and this article on building your own base boxes.

The Second Neat Bit

Now set up a VirtualBox shared folder pointing to your home directory (Parallels does this by default) – it will show up as a /media/sf_username mount inside the VM.

The really neat bit is that if you cd into that, set up a Vagrantfile, do a vagrant up --provider=lxc and then vagrant ssh into the LXC container, you’ll be able to access your Mac’s file system via /vagrant from inside the container.

Wrap your head around that for a second – you can develop on your Mac and test your code on a set of different Linux environments without ferrying copies across. And, of course, you can use Puppet to provision those environments automatically for you.

Port Forwarding

Finally, a small fix - to be able to access forwarded ports from your containers on versions 0.3.4 and 0.4.0 of the plug-in, you need to tweak it a bit:

cd ~/.vagrant.d/gems/gems/vagrant-lxc-0.4.0/lib/vagrant-lxc/action

vi forward_ports.rb

# now edit the redirect_port function to remove the 127.0.0.1 binding like so:

def redirect_port(host, guest)

#redir_cmd = "sudo redir --laddr=127.0.0.1 --lport=#{host} --cport=#{guest} --caddr=#{@container_ip} 2>/dev/null"

redir_cmd = "sudo redir --lport=#{host} --cport=#{guest} --caddr=#{@container_ip} 2>/dev/null"

@logger.debug "Forwarding port with `#{redir_cmd}`"

spawn redir_cmd

end

And that’s it, you’re done. Enjoy.

Here Be Dragons

Another thing I’m experimenting with is using btrfs - Ubuntu 13.04 ships with a version of LXC that is btrfs-aware and significantly further reduces the amount of disk space required (lxc-clone actually performs a filesystem snapshot, which is terrific). That’s a little beyond the scope here, but it’s worth outlining here for ease of reference.

Setting up Ubuntu with btrfs

If you really want to try that, here’s a short list of the steps I’ve followed so far:

- Create a new VM with 512MB or RAM, two network interfaces (as above) and three separate VDI volumes:

- 2GB for

/ - 512MB for

swap - 8GB for

/var

- 2GB for

- Install from the Ubuntu 13.04 Server ISO

- Set up the partitions using btrfs for both data volumes (

/and/var), withnoatimeset (that’s about the only useful flag you can set inside the installer) - Once the partitioner sets up your volumes, switch to a new console and remount them with:

mount -o remount,compress=lzo /target mount -o remount,compress=lzo /target/var

- Once you’ve installed the system and before rebooting, go back to the alternate console and edit

/etc/fstabto havenoatime,ssd,compress=lzo,space_cache,autodefragas options to (you can usecompress-force=lzoif you want everything to be compressed). - To get rid of the “Sparse file not allowed” message upon booting, comment out the following line in

/etc/grub.d/00_headerand runupdate-grub:

if [ -n "\${have_grubenv}" ]; then if [ -z "\${boot_once}" ]; then save_env \ recordfail; fi; fi

- Proceed as above, and hike up the RAM on the VM to your liking. If all goes well, you’ll have a much smaller VM footprint due to the use of

lzocompression, a relatively small swapfile and copy-on-write support.

There are only three caveats:

- You need to do this for the moment (should be fixed in updated boxes after 0.3.4)

- The VM will be a little more CPU-intensive when doing disk-intensive tasks (I find it a sensible trade-off)

- The usual tricks for shrinking disk files on either VirtualBox or Parallels no longer apply to btrfs volumes (so far even GParted Live hasn’t really worked for me), so it’s probably best to save a copy of the VM somewhere.