This week’s notes come a little earlier, partly because of an upcoming long weekend and partly because I’ve been mulling the LLM space again due to the close release of both llama3 and phi-3.

Thanks to my recent AMD iGPU tinkering I’ve been spending a fair bit of time seeing how feasible it is to run these “small” models on a slow(ish), low-power consumer-grade GPU (as well as more ARM hardware that I will write about later), and I think we’re now at a point where these things are finally borderline usable for some tasks and the tooling is finally becoming truly polished.

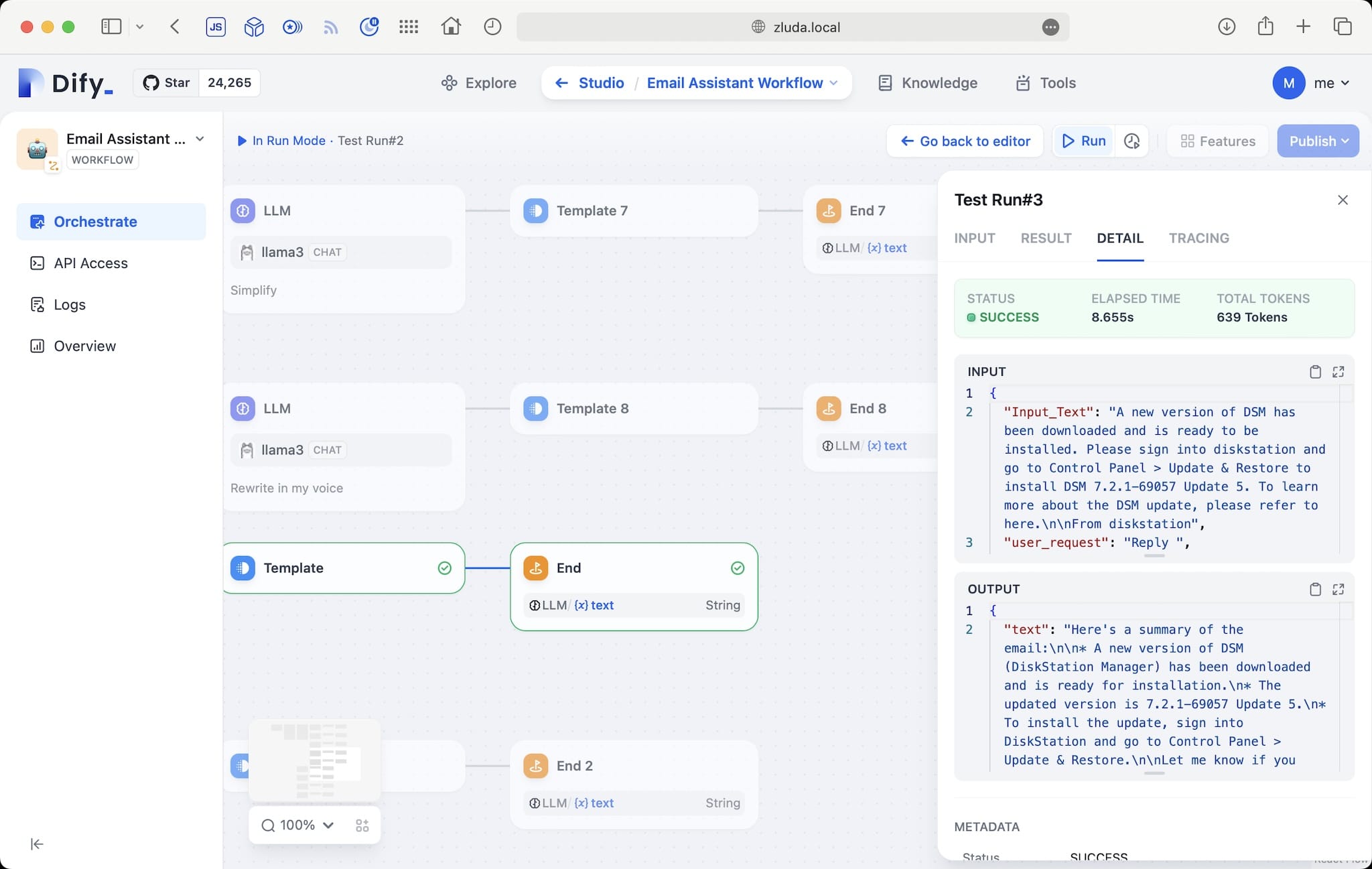

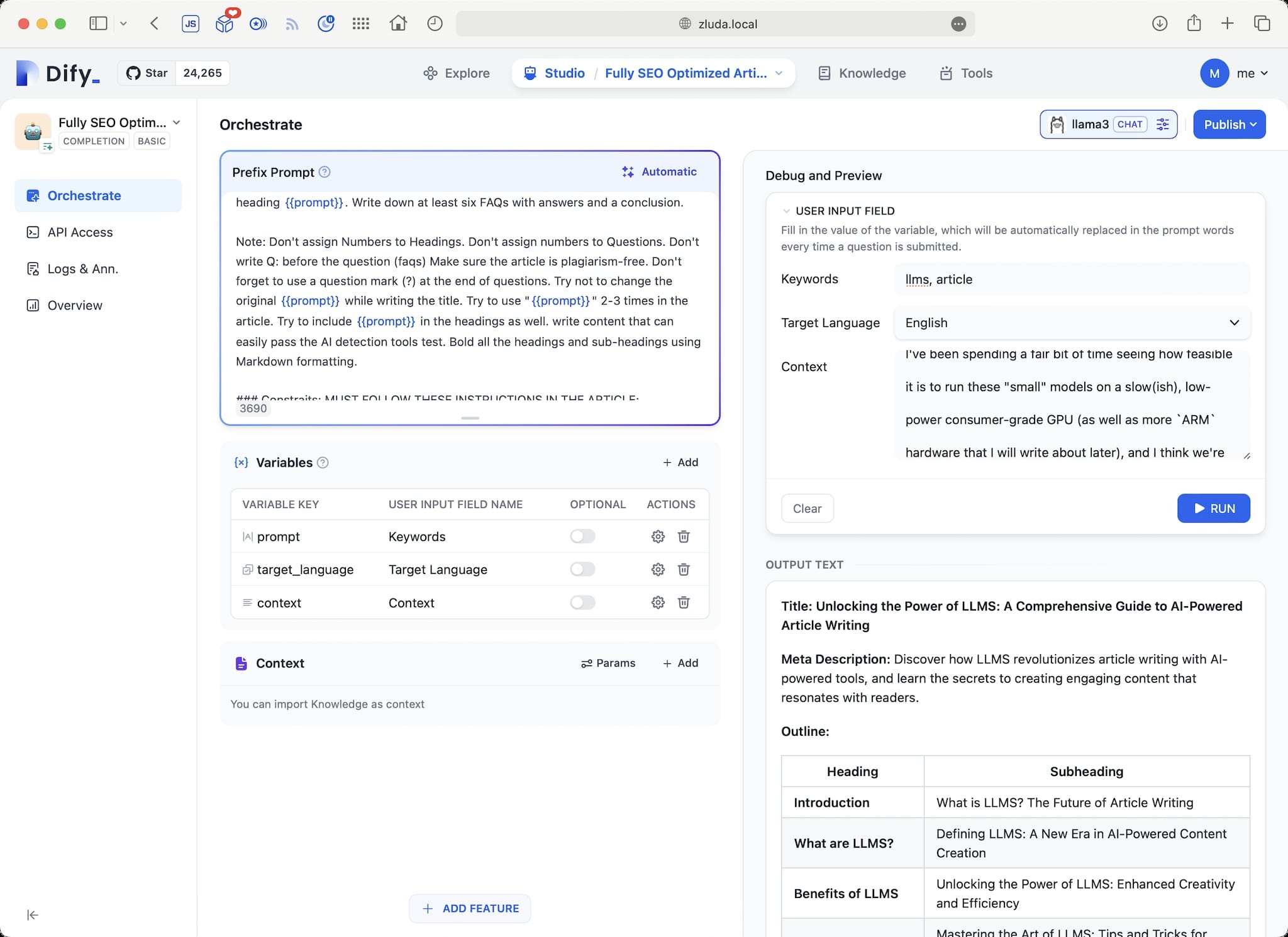

As an example, I’ve been playing around with dify for a few days. In a nutshell, it is a node-based visual environment to define chatbots, workflows and agents that can run locally against llama3 or phi-3 (or any other LLM model, for that matter) and makes it reasonably easy to define and test new “applications”.

It’s pretty great in the sense that it is a docker-compose invocation away and the choice of components is sane, but it is, like all modern solutions, just a trifle too busy:

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

592917c4d941 nginx:latest "/docker-entrypoint.…" 36 hours ago Up 36 hours 0.0.0.0:80->80/tcp, :::80->80/tcp me_nginx_1

2942ff1a3983 langgenius/dify-api:0.6.4 "/bin/bash /entrypoi…" 36 hours ago Up 36 hours 5001/tcp me_worker_1

bc1319008a7a langgenius/dify-api:0.6.4 "/bin/bash /entrypoi…" 36 hours ago Up 36 hours 5001/tcp me_api_1

2265bb3bc546 langgenius/dify-sandbox:latest "/main" 36 hours ago Up 36 hours me_sandbox_1

aed35da737f9 langgenius/dify-web:0.6.4 "/bin/sh ./entrypoin…" 36 hours ago Up 36 hours 3000/tcp me_web_1

824dfb63f2d0 postgres:15-alpine "docker-entrypoint.s…" 36 hours ago Up 36 hours (healthy) 5432/tcp me_db_1

bbf486e08182 redis:6-alpine "docker-entrypoint.s…" 36 hours ago Up 36 hours (healthy) 6379/tcp me_redis_1

b4280691205d semitechnologies/weaviate:1.19.0 "/bin/weaviate --hos…" 36 hours ago Up 36 hours me_weaviate_1

It has most of what you’d need to do RAG or ReAct except things like database and filesystem indexing, and it is sophisticated to the point where you can not just provide your models with a plethora of baked-in tools (for function calling) but also define your own tools and API endpoints to call–it is pretty neat, really, and probably the best all-rounded, self-hostable graphical environment I’ve come across so far.

But I have trouble building actual useful applications with it, because none of the data I want to use is accessible to it, and it can’t automate the things I want to automate because it doesn’t have the right hooks. By shifting everything to the web, we’ve foregone the ability to, for instance, index a local filesystem or e-mail archive, interact with desktop applications, or even create local files (like, say, a PDF).

And all the stuff I want to do with local models is, well, local. And still relies on things like Mail.app and actual documents. They might be in the cloud, but they are neither in the same cloud nor are they accessible via uniform APIs (and, let’s face it, I don’t want them to be).

This may be an unpopular opinion in these days of cloud-first everything, but the truth is that I don’t want to have to centralize all my data or deal with the hassle of multiple cloud integrations just to be able to automate it. I want to be able to run models locally, and I want to be able to run them against my own data without having to jump through hoops.

On the other hand, I am moderately concerned with control over the tooling and code that runs these agents and workflows. I’ve had great success in using Node-RED to summarize my RSS feeds (partly because it can be done “upstream” without need for any local data), and there’s probably nothing I can do in dify that I can’t do in Node-RED, but building a bunch of custom nodes for this would take time.

Dropping back to actual code, a cursory review of the current state of the art around langchain and all sorts of other LLM-related projects shows that code quality still leaves a lot to be desired1, to the point where it’s just easier (and more reliable) to write some things from scratch.

But the key thing for me is that creating something genuinely useful and reliable that does more than just spew text is still… hard. And I really don’t like that the current state of the art is still so focused on content generation.

It’s not the prompting, or the endless looping and filtering, or even the fact that many agent frameworks actually generate their own prompts to a degree where they’re impossible to debug–it’s the fact that the actual useful stuff is still hard to do, and LLMs are still a long way from being able to do it.

They do make for amazing Marketing demos and autocomplete engines, though.

-

I recently came across a project that was so bad that it just had to be AI-generated. The giveaway was the complete comment coverage of things that were essentially re-implementations of stuff in the Python standard library in the typical “Java cosplay” style object-oriented code that is so prevalent in the

LLMspace. It was that bad. ↩︎