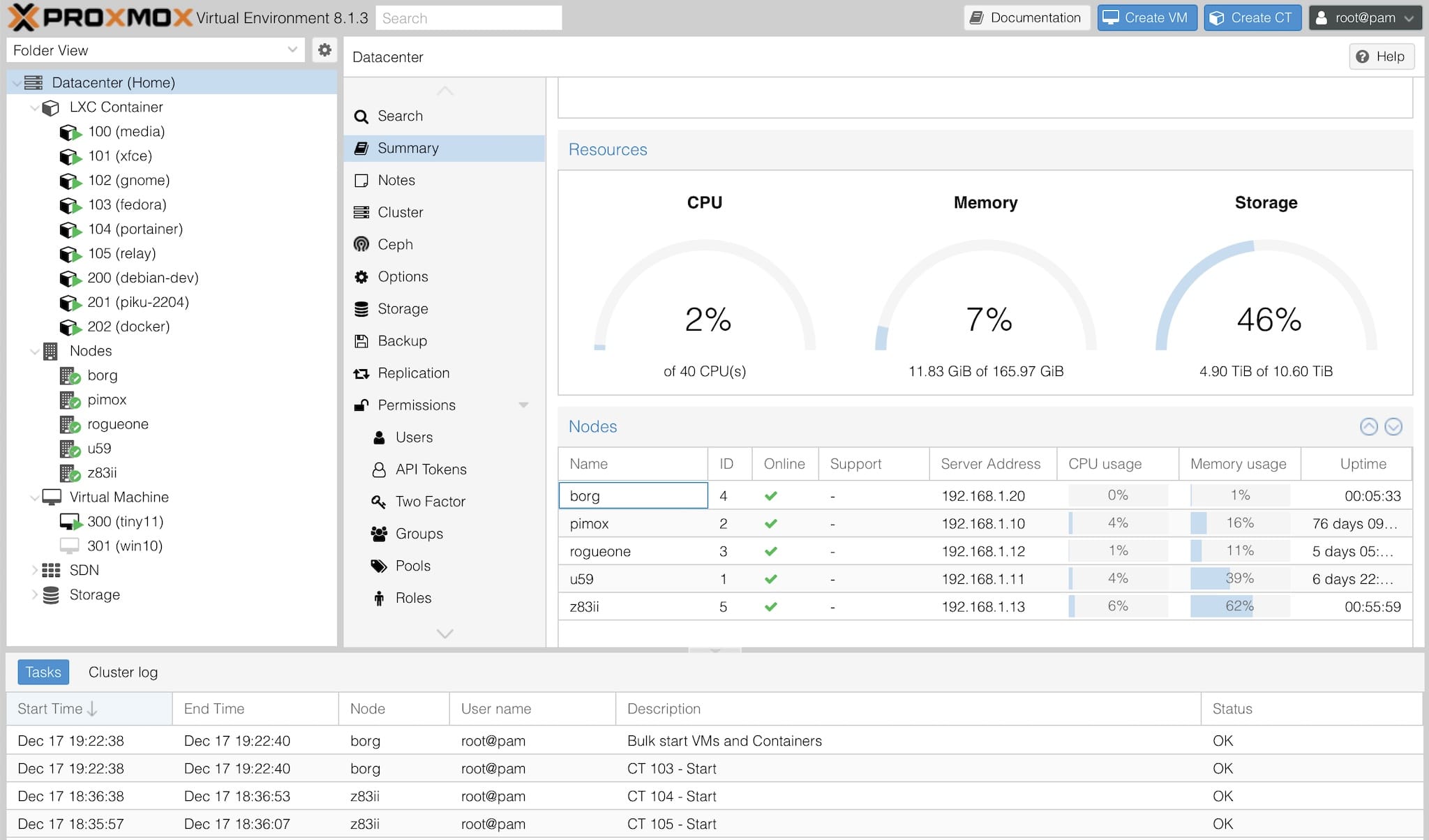

My homelab consolidation is finally complete–after migrating my media services to a Beelink U59 running Proxmox, I went through all my machines and assimilated them into a single cluster:

borg was already running Proxmox, so I went ahead and converted the LXD stack in rogueone by converting all the rootfs as before, which was easy enough, if slow–all my Fedora sandboxes were up and running again in a couple of hours.

I was a bit sad about decommissioning the z83ii and realized I needed a small Intel machine to be a guinea pig for eventually migrating over my home automation stack, so I went ahead and installed Proxmox on it too… Except I had to do it the hard way.

The Proxmox installer didn’t like the z83ii EMMC storage, so I installed a very minimal Debian 12 system and automated the rest. The result is a little cramped RAM-wise, but I have another z83ii someplace and will eventually repeat the process to use them to test disk replication and fail-over1.

One notable thing I had to do (so as to get Tailscale running inside a new, unprivileged LXC) was to map the tun/tap devices into the containers:

# cat /etc/pve/lxc/105.conf | grep dev

lxc.cgroup2.devices.allow: c 10:200 rwm

lxc.mount.entry: /dev/net/tun dev/net/tun none bind,create=file

The Good Bits

The main reason I finally did this was that Proxmox takes away a bunch of the hassle of running my local machines and makes it just a little bit closer to my day-to-day experience on Azure.

The things I like the most about my current setup are being able to:

- Keep tabs on all resources at a glance (CPU, RAM, disk, etc.) across multiple hosts.

- Snapshot any VM/

LXCand return them to a “known good” state with a couple of clicks (thanks toLVM). - Backup any of my systems to my NAS from any host with a single click (any node added to the cluster inherits those settings).

- Migrate VMs or

LXCcontainers to any other host (as long as there’s enough resources) also with a single click (I haven’t tried volume replication and “live” migration yet, but fully intend to). - Add/remove/migrate storage volumes and images with ease (as well as manage mount points, to a degree).

- Manipulate container configurations via the

CLI, and according to standard Linux primitives. - Have full control of networking down to the VLAN level2.

However, I can’t:

- Monitor non-Proxmox machines (would be nice).

- Use

SMB/CIFSfilesystems inside unprivilegedLXCcontainers trivially3. - Play with distributed storage (I just don’t have the right hardware).

- Keep track of Docker containers (or open ports for running services).

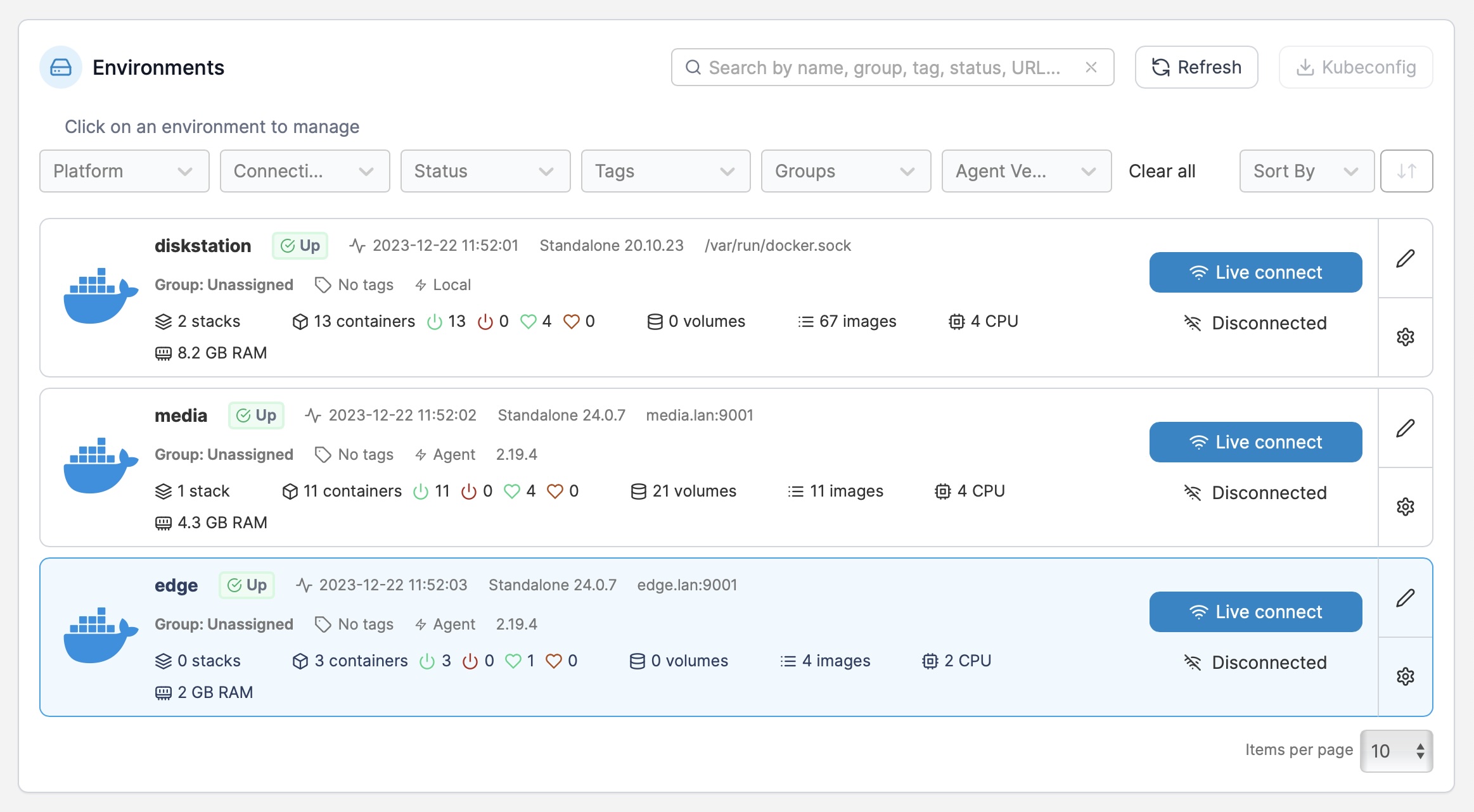

Another Layer–Portainer

As it happens I got a few notes regarding my last homelab post, and a couple of people asked me why I wasn’t using Portainer. Well, I am, but not for infrastructure management:

Besides the fact that I need to run actual VMs (for Windows, at least), there’s the simple fact that I prefer to just use docker-compose and have a trivial way to version things.

Portainer is very nice if you manage a mix of standard containers or a Kubernetes cluster, but it has a few things it can’t do in that scope, and the killer ones for me are:

- Export things to a

docker-composefile instead of a JSON dump. - Version configurations easily.

- Migrations between hosts

- Backups/snapshots of stateful volumes

You can “migrate” the stacks, but all the stateful volumes are left behind. I get that this is because stateful storage is supposed to be in Kubernetes persistent volume claims these days, but for simple docker-compose stacks, it’s a big flaw.

On the other hand, it is very nice to use to look at Docker logs, pop open a shell, etc.–I’ve been using it on my Synology NAS alongside the built-in Container Manager, and also added a couple of remote agents to it to manage stacks running inside VM/LXC hosts this week.

A minor gripe with Portainer environments

One thing I did come was that even with “volumeless” containers, Portainer currently fails to create containers on agent-managed hosts, which is inexcusable.

For instance, I can’t start this trivial web terminal sandbox on a connected host environment, because the agent cannot build the container locally:

services:

terminal:

hostname: terminal

ports:

- 7681:7681

build:

context: .

dockerfile_inline: |

FROM alpine:latest

RUN apk add --no-cache tini ttyd socat openssl openssh ca-certificates vim tmux htop mc unzip && \

echo "export PS1='\[\033[01;32m\]\u@\h\[\033[00m\]:\[\033[01;34m\]\w\[\033[00m\]\$ '" >> /etc/profile && \

# Fake poweroff

echo "alias poweroff='kill 1'" >> /etc/profile

ENV TINI_KILL_PROCESS_GROUP=1

EXPOSE 7681

ENTRYPOINT ["/sbin/tini", "--"]

CMD [ "ttyd", "--writable", "-s", "3", "-t", "titleFixed=/bin/sh", "-t", "rendererType=webgl", "-t", "disableLeaveAlert=true", "/bin/sh", "-i", "-l"]

But those things will eventually get fixed. And now I have a (nominally) stable infrastructure to tide me over during the holidays, leaving me (hopefully) free to do some coding and flesh out a couple of prototypes. And who knows, I might even add k3s to the mix as well… I miss it.

-

The z83ii boxes I have are limited to 2GB RAM and a 32GB

EMMC(and Proxmox takes up one 1GB of RAM idle on it), but their 4 cores and an attached USB 3 HDD make them pretty useful. ↩︎ -

I haven’t had time to delve into this yet–but I fully intend to now that most of my LAN switches have been swapped out by managed ones. ↩︎

-

This is a bit of a pet peeve, since I’ve been fighting with

smbnetfsandmcfor a couple of hours now–the former chokes on large file moves and the latter showsFUSEdirectories as empty, which is just sad in 2023. ↩︎