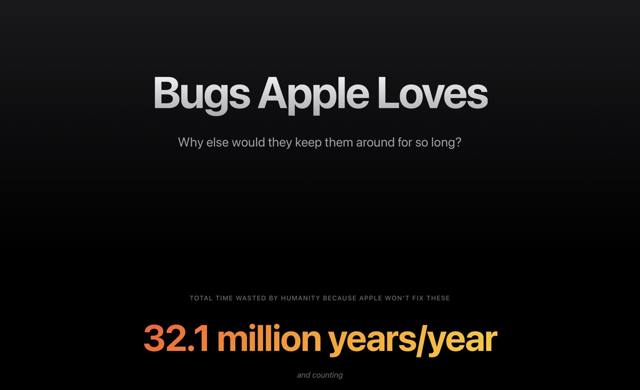

macOS Tahoe 26.3 is Broken

I have no idea of what is happening since I can’t even find any decent logs in Console.app, but it seems that the latest update to macOS Tahoe (26.3) has a serious bug.

My desktop (a Mac Mini M2 Pro) has been crashing repeatedly since the update, and the symptom is always the same: it suddenly becomes sluggish (the mouse cursor slows down, then freezes completely), and then after a minute both my displays flash purple and then go black as the machine reboots.

For a machine that used to be on 24/7 with zero issues, this is a definite regression.

I’ve tried keeping ActivityMonitor open to see if I can spot any process that is consuming too much CPU or memory (the obvious suspect would be WindowServer), but everything looks normal until the crash happens, and there is only so much attention one can spare while, you know, doing productive stuff.

I have a sneaking suspicion that it might be Apple’s container framework, but I have no way to confirm that other than (I guess) stop using it for a few days.

But, again, the fact that I can’t find any logs or error messages in Console.app and that searching for anything inside it is still a nightmare is a testament to Apple’s general software quality and ability to troubleshoot anything these days…

I filed it as FB21983519 in case anyone cares.

Update, a few hours later: It’s not the

containerframework. I just came back to my machine after lunch and it had crashed again, so I guess it’s something else. Again, nothing shows up on the console logs other than theBOOT TIMEentries for the reboot. There were no hardware changes, no new peripherals, and I’ve removed most of my login items (not that I have that many, and neither of them was recently updated).Update, a day later: The crashes continue. My MacBook Pro also mysteriously crashed while asleep–the first time this has happened to me in recent memory, and also running 26.3. I have no idea if it’s related, but it seems to be a similar issue. Again, no logs or error messages in

Console.appother than theBOOT TIMEentries.Update, two days later: A few people have suggested this might be a buggy interaction between

containerand Time Machine, which would be serendipitous given my recent problems with it, and although I did indeed remove~/Library/Application Support/com.apple.containerfrom my desktop early on and still had a crash, my MacBook Pro never rancontainerin the first place, so I don’t think that’s the issue. And somehow, Touch ID stooped working on my MacBook Pro, which is just the cherry on top of this whole mess.Update, a week later: I reinstalled macOS (sadly, Tahoe again) on my desktop and removed an errant sparse disk image from my laptop—apparently Time Machine was indeed the problem on that at least, since even though I wasn’t running

containeron it I did have a personal encrypted volume that 26.3 objected to. I now suspect that both 26.2 and 26.3 broke Time Machine in different ways.